What Is Quantum Computing?

This informal paper is designed to provide a brief but meaningful introduction to quantum computing — the process and significance of using quantum computers to address compute-intensive application problems, but without getting bogged down in any of the detailed technical jargon. It should be suitable for technical and non-technical audiences alike, including managers, and executives, as well as technical staff who are not yet familiar with quantum computing.

This is intended to be a light, high-level view of the overall process of using quantum computers, rather than a tutorial or hands-on guide on how to design algorithms or develop applications which use quantum computers. It stops short of teaching you about all of the programming model details, software, tools, and techniques needed to engage in quantum computing. But it does offer a reasonably complete panoramic view of what quantum computing can do for you, when it might and might not be an appropriate choice for solving your application problems, and what types of issues might arise and need to be addressed during the process.

The good news is that this paper will be almost exclusively simple, plain language:

- No math. No equations or formulas.

- No physics. Okay, a little, but just a very little, and only in plain language.

- No math symbols.

- No Greek letters or symbols.

- No complex, complicated, or confusing diagrams, tables, or charts. Just plain text, albeit with lots of bullet points and numbered lists.

- No pretty but distracting and relatively useless photos, images, or graphics.

- No jargon. Okay, a little, but minimal.

- No PhD required. Not even a STEM degree is required.

It doesn’t tell you about what a quantum computer is in any great detail, since that was done in the preceding paper:

Caveat: My comments here are all focused only on general-purpose quantum computers. Some may also apply to special-purpose quantum computing devices, but that would be beyond the scope of this informal paper. For more on this caveat, see my informal paper:

- What Is a General-Purpose Quantum Computer?

- https://jackkrupansky.medium.com/what-is-a-general-purpose-quantum-computer-9da348c89e71

This is a very long paper. To get a briefer view on quantum computing, check out these two sections:

- The elevator pitch on quantum computing

- Quantum computing in a nutshell

Topics discussed in this paper:

- The target of quantum computing: production-scale practical real-world problems

- The goal of quantum computing: production deployment of production-scale practical real-world quantum applications

- The elevator pitch on quantum computing

- What is a quantum computer?

- What is a practical quantum computer?

- The essence of quantum computing: exploiting the inherent parallelism of a quantum computer

- What is practical quantum computing?

- The quantum computing sector

- Scope

- Quantum computing in a nutshell

- The grand challenge of quantum computing

- The twin goals of quantum computing: achieve dramatic quantum advantage and deliver extraordinary business value

- Achieve dramatic quantum advantage

- Deliver extraordinary business value

- Quantum computing is the process of utilizing quantum computers to address production-scale practical real-world problems

- The components of quantum computing

- Quantum computer, quantum computation, and quantum computing

- Quantum computing is the use of a quantum computer to perform quantum computation

- Quantum algorithms and quantum applications

- Approaches to quantum computing

- Three stages for development of quantum algorithms and quantum applications — prototyping, pilot projects, and production projects

- Prototyping, experimentation, and evaluation

- Proof of concept experiments

- Prototyping vs. pilot project

- Production projects and production deployment

- Production deployment of a production-scale practical real-world quantum application

- Quantum effects and how they enable quantum computing

- Quantum information

- Quantum information science (QIS) as the umbrella field over quantum computing

- Quantum information science and technology (QIST) — the science and engineering of quantum systems

- Quantum mechanics — can be ignored at this stage

- Quantum physics — can also be ignored at this stage

- Quantum state

- What is a qubit? Don’t worry about it at this stage!

- No, a qubit isn’t comparable to a classical bit

- A qubit is a hardware device comparable to a classical flip flop

- Superposition, entanglement, and product states enable quantum parallelism

- Quantum system — in physics

- Quantum system — a quantum computer

- Computational leverage

- k qubits enable a solution space of 2^k quantum states

- Product states are the quantum states of quantum parallelism

- Product states are the quantum states of entangled qubits

- k qubits enable a solution space of 2^k product states

- Qubit fidelity

- Nines of qubit fidelity

- Qubit connectivity

- Any-to-any qubit connectivity is best

- Full qubit connectivity

- SWAP networks to achieve full qubit connectivity — works, but slower and lower fidelity

- May not be able to use all of the qubits in a quantum computer

- Quantum Volume (QV) measures how many of the qubits you can use in a single quantum computation

- Why is Quantum Volume (QV) valid only up to about 50 qubits?

- Programming model — the essence of programming a quantum computer

- Ideal quantum computer programming model not yet discovered

- Future programming model evolution

- Algorithmic building blocks, design patterns, and application frameworks

- Algorithmic building blocks

- Design patterns

- Application frameworks

- Quantum applications and quantum algorithms

- Quantum applications are a hybrid of quantum computing and classical computing

- Basic model for a quantum application

- Post-processing of the results from a quantum algorithm

- Quantum algorithm vs. quantum circuit

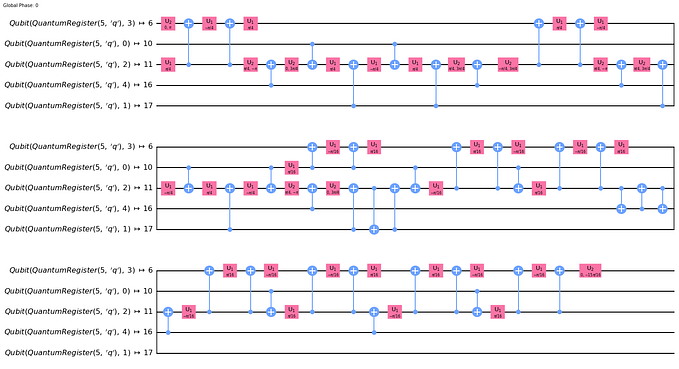

- Quantum circuits and quantum logic gates — the code for a quantum computer

- Generative coding of quantum circuits rather than hand-coding of circuits

- Algorithmic building blocks, design patterns, and application frameworks are critical to successful use of a quantum computer

- Quantum Fourier transform (QFT) and quantum phase estimation (QPE) are critical to successful use of a quantum computer

- Measurement — getting classical results from a quantum computer

- Measurement — collapse of the wave function

- Extracting useful results from a massively parallel quantum computation

- Components of a quantum computer

- Access to a quantum computer

- Quantum service providers

- Having your own in-house quantum computer is not a viable option at this stage

- Job scheduling and management

- Local runtime for tighter integration of classical and quantum processing

- Where are we at with quantum computing?

- Current state of quantum computing

- Getting to commercialization — pre-commercialization, premature commercialization, and finally commercialization — research, prototyping, and experimentation

- Quantum computing is still in the pre-commercialization phase

- More suited for the lunatic fringe who will use anything than for normal, average technical staff

- Still a mere laboratory curiosity

- Much research is still needed

- How much more research is required?

- No 40-qubit algorithms to speak of

- Where are all of the 40-qubit algorithms?

- Beware of premature commercialization

- Doubling down on pre-commercialization is the best path forwards

- The ENIAC Moment — proof that quantum computer hardware is finally up to the task of real-world use

- The time to start is not now unless you’re the elite, the lunatic fringe

- At least another two to three years before quantum computing is ready to begin commercialization

- Little of current quantum computing technology will end up as the foundation for practical quantum computers and practical quantum computing

- Current quantum computing technology is the precursor for practical quantum computers and practical quantum computing

- Little data with a big solution space

- Very good at searching for needles in haystacks

- Quantum parallelism

- The secret sauce of quantum computing is quantum parallelism

- Combinatorial explosion — moderate number of parameters but very many combinations

- But not good for Big Data

- What production-scale practical real-world problems can a quantum computer solve — and deliver so-called quantum advantage, and deliver real business value?

- Heuristics for applicability of quantum computing to a particular application problem

- What applications are suitable for a quantum computer?

- Quantum machine learning (QML)

- Quantum AI

- Quantum AGI

- What can’t a quantum computer compute?

- Not all applications will benefit from quantum computing

- Quantum-resistant problems and applications

- Post-quantum cryptography (PQC)

- Post-quantum cryptography (PQC) is likely unnecessary since Shor’s factoring algorithm likely won’t work for large numbers the size of encryption keys

- How do you send input data to a quantum computer? You don’t…

- Any input data must be encoded in the quantum circuit for a quantum algorithm

- Classical solution vs. quantum solution

- Quantum advantage

- Dramatic quantum advantage is the real goal

- Quantum advantage — make the impossible possible, make the impractical practical

- Fractional quantum advantage

- Three levels of quantum advantage — minimal, substantial or significant, and dramatic quantum advantage

- Net quantum advantage — discount by repetition needed to get accuracy

- What is the quantum advantage of your quantum algorithm or application?

- Be careful not to compare the work of a great quantum team to the work of a mediocre classical team

- To be clear, quantum parallelism and quantum advantage are a function of the algorithm

- Quantum supremacy

- Random number-based quantum algorithms and quantum applications are actually commercially viable today

- Quantum supremacy now: Generation of true random numbers

- Need to summarize capability requirements for quantum algorithms and applications

- Matching the capability requirements for quantum algorithms and applications to the capabilities of particular quantum computers

- A variety of quantum computer types and technologies

- Quantum computer types

- General-purpose quantum computers

- Universal general-purpose gate-based quantum computer

- Vendors of general-purpose quantum computers

- Special-purpose quantum computing devices

- Special-purpose quantum computers or special-purpose quantum computing devices?

- General-purpose vs. special-purpose quantum computers

- Qubit technologies

- Qubit modalities

- Quantum computer technologies

- Different types and technologies of quantum computers may require distinctive programming models

- Don’t get confused by special-purpose quantum computing devices that promise much more than they actually can deliver

- Coherence, decoherence, and coherence time

- Gate execution time — determines how many gates can be executed within the coherence time

- Maximum quantum circuit size — limits size of quantum algorithms

- Another quantum computer technology: the simulator for a quantum computer

- Simulators for quantum computers

- Classical quantum simulator

- Quantum computers — real and simulated are both needed

- Two types of simulator and simulation

- Classical quantum simulator — simulate a quantum computer on a classical computer

- Simulation — simulating the execution of a quantum algorithm using a classical computer

- Context may dictate that simulation implies simulation of science

- Focus on using simulators rather than real quantum computers until much better hardware becomes available

- Capabilities, limitations, and issues for quantum computing

- Quantum computers are inherently probabilistic rather than absolutely deterministic

- Quantum computers are a good choice when approximate answers are acceptable

- Statistical processing can approximate determinism, to some degree, even when results are probabilistic

- As challenging as quantum computer hardware is, quantum algorithms are just as big a challenge

- The process of designing quantum algorithms is extremely difficult and challenging

- Algorithmic complexity, computational complexity, and Big-O notation

- Quantum speedup

- Key trick: Reduction in computational complexity

- We need real quantum algorithms on real machines (or real simulators) — not hypothetical or idealized

- Might a Quantum Winter be coming?

- Risk of a Quantum IP Winter

- No, Grover’s search algorithm can’t search or query a database

- No, Shor’s factoring algorithm probably can’t crack a large encryption key

- No, variational methods don’t show any promise of delivering any dramatic quantum advantage

- Quantum computer vs. quantum computing vs. quantum computation

- Quantum computer vs. quantum computer system vs. quantum processor vs. quantum processing unit vs. QPU

- Quantum computer as a coprocessor rather than a full-function computer

- Quantum computers cannot fully replace classical computers

- Quantum applications are mostly classical code with only selected portions which run on a quantum computer

- No quantum operating system

- Noise, errors, error mitigation, error correction, logical qubits, and fault tolerant quantum computing

- Perfect logical qubits

- NISQ — Noisy Intermediate-Scale Quantum computers

- Near-perfect qubits as a stepping stone to fault-tolerant quantum computing

- Quantum error correction (QEC) remains a distant promise, but not critical if we have near-perfect qubits

- Circuit repetitions as a poor man’s approximation of quantum error correction

- Beyond NISQ — not so noisy or not intermediate-scale

- When will the NISQ era end and when will the post-NISQ era begin?

- Three stages of adoption for quantum computing — The ENIAC Moment, Configurable packaged quantum solutions, and The FORTRAN Moment

- Configurable packaged quantum solutions are the greatest opportunity for widespread adoption of quantum computing

- The FORTRAN Moment — It is finally easy for most organizations to develop their own quantum applications

- Quantum networking and quantum Internet — research topics, not a near-term reality

- Distributed quantum computing — longer-term research topic

- Distributed quantum applications

- Distributed quantum algorithms — longer-term research topic

- Quantum application approaches

- Quantum network services vs. quantum applications

- What could you do with 1,000 qubits?

- 48 fully-connected near-perfect qubits may be the sweet spot for achieving a practical quantum computer

- 48 fully-connected near-perfect qubits may be the minimum configuration for achieving a practical quantum computer

- 48 fully-connected near-perfect qubits may be the limit for practical quantum computers

- The basic flow for quantum computing

- Quantum programming

- Quantum software development kits (SDK)

- Platforms

- Quantum computing software development platforms

- Quantum workflow orchestration

- Programming languages for quantum applications

- Programming languages for quantum algorithms

- Quantum-native high-level programming language

- Python for quantum programming

- Python and Jupyter Notebooks

- Support software and tools

- Audiences for support software and tools

- Tools

- Developer tools

- Audiences for developer tools

- Compilers, transpilers, and quantum circuit optimization

- Interactive and online tools

- Beware of tools to mask severe underlying technical deficiencies or difficulty of use

- Agile vs. structured development methodology

- Need for an Association for Quantum Computing Machinery — dedicated to the advancement of practical quantum computing

- Need to advance quantum information theory

- Need to advance quantum computer engineering

- Need to advance quantum computer science

- Need to advance quantum software engineering

- Need to advance quantum algorithms and applications

- Need to advance quantum infrastructure and support software

- Need to advance quantum application engineering

- Need for support for research

- Need for quantum computing education and training

- Need for quantum certification

- Need for quantum computing standards

- Need for quantum publications

- Need for quantum computing community

- Quantum computing community

- Need for quantum computing ecosystem

- Quantum computing ecosystem

- Distinction between the quantum computing community and the quantum computing ecosystem

- Call for Intel to focus on components for others to easily build their own quantum computers

- Intel could single-handedly do for the quantum computing ecosystem what IBM, Intel, and Microsoft did for the PC ecosystem

- Need to assist students

- Need for recognition and awards

- Need for code of ethics and professional conduct

- Quantum computing as a profession

- Benchmarking

- Education

- Training

- What is the best training for quantum computing?

- Getting started with IBM Qiskit Textbook

- Workforce development

- How to get started with quantum computing

- Investors and venture capital for quantum computing

- Commitment to a service level agreement (SLA)

- Diversity of sourcing

- Dedicated access vs. shared access

- Deployment and production

- Shipment, release, and deployment criteria

- How much might a quantum computer system cost?

- Pricing for service — leasing and usage

- Pricing for software, tools, algorithms, applications, and services

- Open source is essential

- Intellectual property (IP) — boon or bane?

- Shared knowledge — opportunities and obstacles

- Secret projects — sometimes they can’t be avoided

- Secret government projects — they’re the worst

- Transparency is essential — secrecy sucks!

- An open source quantum computer would be of great value

- Computational diversity

- Quantum-inspired algorithms and quantum-inspired computing

- Collaboration — strategic alliances, partnerships, joint ventures, and programs

- Classical computing still has a lot of runway ahead of it and quantum computing still has a long way to go to catch up

- Science fiction

- Universal quantum computer is an ambiguous term

- Both hardware and algorithms are limiting quantum computing

- A practical quantum computer would be ready for production deployment to address production-scale practical real-world problems

- Practical quantum computing isn’t really near, like within one, two, or three years

- Why aren’t quantum computers able to address production-scale practical real-world problems today?

- Limitations of quantum computing

- Quantum computation must be relatively modest

- No, you can’t easily migrate your classical algorithms to run on a quantum computer

- Lack of fine granularity for phase angles may dramatically limit quantum computing

- Limitations of current quantum computers

- Two most urgent needs in quantum computing: higher qubit fidelity and full qubit connectivity

- Premature commercialization is a really bad idea

- Minimum viable product (MVP)

- Initial commercialization stage — C1.0

- Subsequent commercialization stages

- But commercialization is not imminent — still years away from even commencing

- Quantum Ready

- Quantum Ready — but for who and when?

- Quantum Aware — what quantum computing can do, not how it does it

- The technical elite and lunatic fringe will always be ready, for anything

- But even with the technical elite and lunatic fringe, be prepared for the potential for several false starts

- Quantum Ready — on your own terms and on your own timeline

- Timing is everything — when will it be time for your organization to dive deep on quantum computing?

- Your organizational posture towards technological advances

- Each organization must create its own quantum roadmap

- Role of academia and the private sector

- Role of national governments

- When will quantum computing finally be practical?

- Names of the common traditional quantum algorithms

- Quantum teleportation — not relevant to quantum computing

- IBM model for quantum computing

- Circuit knitting

- Dynamic circuits

- Future prospects of quantum computing

- Post-quantum computing — beyond the future

- Should we speak of quantum computing as an industry, a field, a discipline, a sector, a domain, a realm, or what? My choice: sector (of the overall computing industry)

- Why am I still unable to write a brief introduction to quantum computing?

- List of my papers on quantum computing

- Definitions from my Quantum Computing Glossary

- Jargon and acronyms — most of it can be ignored unless you really need it

- My glossary of quantum computing terms

- arXiv — the definitive source for preprints of research papers

- Free online books

- Resources for quantum computing

- Personas, use cases, and access patterns for quantum computing

- Beware of oddball, contrived computer science experiments

- Beware of research projects masquerading as commercial companies

- Technology transfer

- Research spinoffs

- Don’t confuse passion for pragmatism

- Quantum hype — enough said

- Should this paper be turned into a book?

- My original proposal for this topic

- Summary and conclusions

The target of quantum computing: production-scale practical real-world problems

A lot of published material on quantum computing is addressing hypothetical, theoretical, contrived, or rather small problems. A lot of the material is simply separated from real-world concerns. So, the central focus needs to be:

- Real-world problems

And since a lot of material is approaching topics from too abstract, hypothetical, and theoretical perspectives, the focus needs to be on the practical:

- Practical real-world problems

A lot of existing efforts at using quantum computing have been very limited, at a toy scale, or call them prototypes or proof of concept experiments to be charitable. The great opportunity with quantum computing is supposed to be impressive performance, so the emphasis needs to be on problems at a production scale, so that’s the essential target of quantum computing:

- Production-scale practical real-world problems

So, applications for quantum computers need to be:

- Production-scale practical real-world quantum applications

The goal of quantum computing: production deployment of production-scale practical real-world quantum applications

That’s the target of quantum computing, addressing production-scale practical real-world problems.

And the goal is to produce production-scale practical real-world quantum applications.

Actually, the goal is not simply to produce such quantum applications, but to deploy them in a production environment so that they can begin fulfilling their promise, so the goal of quantum computing is actually:

- Production deployment of production-scale practical real-world quantum applications

That is the promised land that we seek to journey to. Anything less than that won’t be worth our attention, resources, and effort.

The elevator pitch on quantum computing

What is quantum computing?

Unlike classical computing in which one alternative at a time is evaluated, quantum computing is able to evaluate a vast number of alternatives all at the same time, in a single computation rather than a vast number of computations. This parallel evaluation of a large number of alternatives is known as quantum parallelism.

A quantum computer is a specialized electronic device which exploits the seemingly magical qualities of quantum effects governed by the principles of quantum mechanics to enable it to perform a fairly small calculation on a very large number of possible solution values all at once, producing a solution value in a very short amount of time. This parallel computation on a large number of values is the quantum parallelism mentioned above.

A quantum computer is not a full-blown computer capable of the full range of computation of a classical computer, but is more of a coprocessor which can perform carefully chosen and crafted calculations at a rate far exceeding the performance of even the best classical supercomputers.

Quantum applications are a hybrid of classical code and quantum algorithms. Most of the application, including any complex logic and handling of data, is classical, while small but critical and time-consuming calculations can be extracted and transformed into quantum algorithms which can be executed in a much more efficient manner than is possible with even the most powerful classical supercomputers.

Quantum computers are still in their infancy, demonstrating rudimentary capabilities, and not even close to being able to address production-scale practical real-world problems and not even close to being ready for production deployment, but are available in limited capacities for prototyping and experimentation, even as significant research continues.

It may be three to five, seven, or even ten years before practical quantum computers are generally available which support production-scale practical real-world quantum applications and achieve dramatic quantum advantage over classical computing and deliver extraordinary business value which is well beyond the reach of classical computing.

What is a quantum computer?

A quantum computer is a specialized electronic device which exploits the seemingly magical qualities of quantum effects governed by the principles of quantum mechanics to enable it to perform a fairly small calculation on a very large number of possible solution values all at once using a process known as quantum parallelism, producing a solution value in a very short amount of time.

This paper focuses on quantum computing, the use of quantum computers, the application of quantum computers to addressing real-world application problems.

For more detail on quantum computers themselves, see my previous paper:

What is a practical quantum computer?

As already mentioned, quantum computers are still in their infancy and not ready for practical use. So, what is a practical quantum computer? By definition:

- A practical quantum computer supports production-scale practical real-world quantum applications and achieves dramatic quantum advantage over classical computing and delivers extraordinary business value which is well beyond the reach of classical computing.

The essence of quantum computing: exploiting the inherent parallelism of a quantum computer

At its essence, quantum computing enables a quantum application to exploit the inherent parallelism of a quantum computer to evaluate a very large number of potential solutions to a relatively small computation all at once, delivering a result in a very short amount of time.

What is practical quantum computing?

Practical quantum computing is the fruition of quantum computing when practical quantum computers themselves come to fruition, coupled with all of the software, algorithms, and other components of quantum computing.

Practical quantum computers are the hardware.

Practical quantum computing is the hardware plus all of the software.

The software includes quantum algorithms, quantum applications, support software, and tools, especially developer tools.

Also included are:

- Education.

- Training.

- Ongoing research.

- Consulting.

- Conferences.

- Publications.

- Community.

- Ecosystem.

The quantum computing sector

Some may consider quantum computing to be an industry or field, but for the purposes of this informal paper I consider quantum computing to be a sector of the overall computing industry — the quantum computing sector.

See a greater discussion of this later in this paper.

Scope

This paper endeavors to provide a light, high-level view of what quantum computing is and has to offer and its general benefits, and what issues might arise and need to be addressed.

A previous paper, What Is a Quantum Computer?, covered the nature of the quantum computer itself.

The details of how to program or directly use a quantum computer are also beyond the scope of this paper.

Some of the details of quantum computing that are not included in this paper:

- Math. In general.

- Physics. In general.

- Linear algebra.

- Quantum mechanics.

- Quantum physics.

- Unitary transformation matrices.

- Density matrices.

- Details of particular quantum algorithms.

There are quite a few details which are included in many of the typical, traditional introductions to quantum computing which are not included here, simply because the focus here is a lighter, higher-level view of quantum computing, rather than all of the detail normally hidden under the hood.

There are actually quite a few details which are in fact mentioned in passing but not in fine detail in this paper. In general, citations will be given when details can be found elsewhere.

And as mentioned in the caveat in the introduction, the focus of this paper is general-purpose quantum computers, not special-purpose quantum computing devices.

Quantum computing in a nutshell

Here we try to summarize as much of quantum computing as possible as succinctly as possible. It’s a real challenge to be succinct, but there are a lot of important points to be made about quantum computing to capture the big picture in any truly meaningful manner.

- The target of quantum computing: production-scale practical real-world problems. Addressing real-world problems, at scale.

- The goal of quantum computing: production deployment of production-scale practical real-world quantum applications. The goal is met when these production-scale quantum applications are actually in production, at scale, and delivering their promised results.

- What is quantum computing? Quantum computing permits the evaluation of a vast number of alternatives all at the same time, in a single computation rather than a vast number of computations. This parallel evaluation of a large number of alternatives is known as quantum parallelism.

- What is a quantum computer? A quantum computer is a specialized electronic device which exploits the seemingly magical qualities of quantum effects governed by the principles of quantum mechanics to enable it to perform a fairly small calculation on a very large number of possible solution values all at once using a process known as quantum parallelism, producing a solution value in a very short amount of time.

- What is a practical quantum computer? A practical quantum computer supports production-scale practical real-world quantum applications and achieves dramatic quantum advantage over classical computing and delivers extraordinary business value which is well beyond the reach of classical computing.

- What is practical quantum computing? Practical quantum computing is the fruition of quantum computing when practical quantum computers themselves come to fruition, coupled with all of the software, algorithms, and other components of quantum computing.

- The quantum computing sector. Some may consider quantum computing to be an industry or field, but for the purposes of this informal paper I consider quantum computing to be a sector of the overall computing industry.

- The grand challenge of quantum computing. Figure out how to exploit the capabilities of a quantum computer most effectively for a wide range of applications and for particular application problems to enable production-scale practical real-world quantum applications that achieve dramatic quantum advantage and deliver extraordinary business value.

- The twin goals of quantum computing: achieve dramatic quantum advantage and deliver extraordinary business value.

- Achieve dramatic quantum advantage. Not merely a relatively modest computational advantage, but a truly mind-boggling performance advantage.

- Deliver extraordinary business value. Even more important than raw computational performance advantage, a quantum computer needs to deliver actual business value, and in fact extraordinary business value.

- Quantum computing is the process of utilizing quantum computers to address production-scale practical real-world problems.

- The components of quantum computing. The major components, functions, areas, and topics of quantum computing.

- Quantum computer, quantum computation, and quantum computing. The machine, the work to accomplish, and the overall process of achieving it.

- Quantum computing is the use of a quantum computer to perform quantum computation. Putting it all together.

- Quantum algorithms and quantum applications. Besides the quantum computer itself, these are the two central types of objects that we focus on in quantum computing.

- Approaches to quantum computing. There are a variety.

- Three stages for development of quantum algorithms and quantum applications — prototyping, pilot projects, and production projects. Basic proof of concept for a subset of functions, proof of scaling for full functions, and full-scale project.

- Prototyping, experimentation, and evaluation. Quantum computing is new, untried, and unproven, so it needs to be tried and proven. And feedback given to researchers and vendors. And decisions must be made as to whether to pursue the technology or pass on it. Or maybe simply defer until a later date when the technology has matured some more.

- Proof of concept experiments. It’s probably a fielder’s choice whether to consider a project to be a prototype or a proof of concept. The intent is roughly the same — to demonstrate a subset of basic capabilities. Although there is a narrower additional meaning for proof of concept, namely, to prove or demonstrate that some full feature or full capability can be successfully implemented.

- Prototyping vs. pilot project. Prototyping and pilot projects can have a lot in common, but they have very distinct purposes. Prototyping seeks to prove the feasibility of a small but significant subset of functions. A pilot project seeks to prove that a prototype can be scaled up to the full set of required functions and at a realistic subset of production scale.

- Production projects and production deployment. When a product or service is ready to be made operational, to begin fulfilling its promised features, we call that production deployment.

- Production deployment of a production-scale practical real-world quantum application. That’s the ultimate goal for a quantum computing project. It’s ready to be made operational to begin fulfilling its promise.

- In simplest terms, quantum computing is computation using a quantum computer.

- The secret sauce of a quantum computer is quantum parallelism — evaluating a vast number of alternative solutions all at once. A single modest computation executed once but operating on all possible values at the same time.

- Focus is on specialized calculations which are not easily performed on a classical computer, not because the calculation itself is hard, but because it must be performed a very large number of times.

- Perform calculations in mere seconds or minutes which classical computers might take many years, even centuries, or even millennia. Again, not because the individual calculation takes long, but because it must be performed a very large number of times.

- Essentially, a quantum computer is very good at searching for needles in haystacks. Very large haystacks, much bigger than even the largest classical computers could search. And doing it much faster.

- The optimal use of a quantum computer is for little data with a big solution space. A small amount of input data with a fairly simple calculation which will be applied to a very large solution space, producing a small amount of output. See little data with a big solution space below.

- But quantum parallelism is not automatic — the algorithm designer must cleverly deduce what aspect of the application can be evaluated in such a massively parallel manner. Not all applications of algorithms can exploit quantum parallelism or exploit it fully.

- The degree of quantum parallelism is not guaranteed and will vary greatly between applications and algorithms and even depending on the input data.

- Combinatorial explosion — moderate number of parameters but very many combinations. These are the applications which should be a good fit for quantum computing. Matching combinatorial explosion with quantum parallelism.

- What production-scale practical real-world problems can a quantum computer solve — and deliver so-called quantum advantage, and deliver real business value? No clear answer or clearly-defined path to get an answer, other than trial and error, cleverness, and hard work. If you’re clever enough, you may find a solution. If you’re not clever enough, you’ll be unlikely to find a solution that delivers quantum advantage on a quantum computer.

- Heuristics for applicability of quantum computing to a particular application problem. Well, there really aren’t any yet. Much more research is needed. Prototyping and experimentation may yield some clues on heuristics and rules of thumb for what application problems can be matched to quantum algorithm solutions.

- What applications are suitable for a quantum computer? No guarantees, but at least there’s some potential.

- Quantum machine learning (QML). Just to highlight one area of high interest for applications of quantum computing, but details are beyond the scope of this paper.

- Quantum AI. Beyond quantum machine learning (QML) in particular, there are many open questions about the role of quantum commuting in AI in general. This is all beyond the scope of this paper.

- Quantum AGI. Beyond quantum machine learning (QML) in particular and AI generally, there are many open questions about the role of quantum commuting in artificial general intelligence (AGI) in particular. This is all beyond the scope of this paper. Is the current model for general-purpose quantum computing (gate-based) sufficiently powerful to compute all that the human brain and mind can compute, or is a more powerful architecture needed? I suspect the latter. This is mostly a speculative research topic at this stage.

- What can’t a quantum computer compute? There are plenty of complex math and logic problems which simply aren’t computable on even the best and most-capable quantum computers.

- Not all applications will benefit from quantum computing. Sad but true.

- Quantum-resistant problems and applications. Some problems or applications are not readily or even theoretically solvable using a quantum computer. These are sometimes referred to as quantum-resistant mathematical problems or quantum-resistant problems. Actually, there is one very useful and appealing sub-category of quantum-resistant mathematical problems, namely post-quantum cryptography (PQC), which includes the newest forms of cryptography which are explicitly designed so that they cannot be cracked using a quantum computer.

- Post-quantum cryptography (PQC). It is commonly believed that Shor’s factoring algorithm will be able to crack even 2048 and 4096-bit public encryption keys once we get sufficiently capable quantum computers. As a result, a search began for a new form of cryptography which wouldn’t be vulnerable to attacks using quantum computers. The basic idea is that all you need is a quantum-resistant mathematical problem, which, by definition, cannot be attacked using quantum computation. NIST (National Institute of Standards and Technology) is currently formalizing a decision in this area.

- How do you send input data to a quantum computer? You don’t…

- Any input data must be encoded in the quantum circuit for a quantum algorithm.

- Classical solution vs. quantum solution. An algorithm or application is a solution to a problem. A key task in quantum computing is comparing quantum solutions to classical solutions. This is comparing a quantum algorithm to a classical algorithm, or comparing a quantum application to a classical application.

- Quantum advantage expresses how much more powerful a quantum solution is compared to a classical solution. Typically expressed as either how many times faster the quantum computer is, or how many years, decades, centuries, millennia, or even millions or billions or trillions of years a classical computer would have to run to do what a quantum computer can do in mere seconds, minutes, or hours.

- Dramatic quantum advantage is the real goal. A truly mind-boggling performance advantage. One quadrillion or more times a classical solution.

- Quantum advantage — make the impossible possible, make the impractical practical. Just to emphasize that point more clearly.

- Fractional quantum advantage. A more modest advantage. Substantial or significant would be one million or more times a classical solution. Minimal would be 1,000 times a classical solution.

- Three levels of quantum advantage — minimal, substantial or significant, and dramatic quantum advantage. Minimal — 1,000 X, substantial or significant — 1,000,000 X, and dramatic quantum advantage — one quadrillion X the best classical solution.

- Net quantum advantage — discount by repetition needed to get accuracy. Quantum advantage can be intoxicating — just k qubits gives you a computational leverage of 2^k, but… there’s a tax to be paid on that. Since quantum computing is inherently probabilistic by nature, you can’t generally do a computation once and have an accurate answer. Rather, you have to repeat the calculation multiple or even many times, called circuit repetitions, shot count, or just shots, and do some statistical analysis to determine the likely answer. Those repetitions are effectively a tax or discount on the raw computational leverage which gives you a net computational leverage, the net quantum advantage.

- What is the quantum advantage of your quantum algorithm or application? It’s easy to talk about quantum advantage in the abstract, but what’s really needed is for quantum algorithm designers to explicitly state and fully characterize the quantum advantage of their quantum algorithms. It’s also important for applications using quantum algorithms to understand their own actual input size since the actual quantum advantage will depend on the actual input size.

- Be careful not to compare the work of a great quantum team to the work of a mediocre classical team. If you redesigned and reimplemented a classical solution using a technical team as elite as that required for a quantum solution, the apparent quantum advantage of the quantum solution over the new classical solution might be significantly less, or even negligible. Not necessarily, but very possible.

- To be clear, quantum parallelism and quantum advantage are a function of the algorithm. A quantum computer does indeed enable quantum parallelism and quantum advantage, but the actual and net quantum parallelism and quantum advantage are a function of the particular algorithm rather than the quantum computer itself.

- Quantum supremacy. Sometimes simply a synonym for quantum advantage, or it expresses the fact that a quantum computer can accomplish a task that is impossible on a classical computer, or that could take so long, like many many years, that it is outright impractical on a classical computer.

- Quantum computers already excel at generating true random numbers. Clear supremacy over classical computing.

- Random number-based quantum algorithms and quantum applications are actually commercially viable today.

- Quantum supremacy now: Generation of true random numbers.

- The goal is not simply to do things faster, but to make the impossible possible. To make the impractical practical. Many computations are too expensive today to be practical on a classical computer.

- Quantum effects and how they enable quantum computing. If you want to get down into the quantum mechanics which enable quantum computers and quantum computing.

- Quantum information. What it is. How it is represented. How it is stored. How it is manipulated in quantum computing. It’s a lot more than just the quantum equivalent of the classical binary 0 and 1.

- Quantum information science (QIS) as the umbrella field over quantum computing. Also covers quantum communication, quantum networking, quantum metrology (measurement), quantum sensing, and quantum information.

- Quantum information science and technology (QIST) — the science and engineering of quantum systems.

- Quantum state. How quantum information is represented, stored, and manipulated.

- Measurement — getting classical results from a quantum computer. Quantum state can be complicated, but measurement of qubits always returns a classical binary 0 or 1 for each measured qubit.

- Measurement — collapse of the wave function. The loss of the additional information of quantum state and product state beyond the simple binary 0 or 1 on measurement of qubits is known as the collapse of the wave function.

- Extracting useful results from a massively parallel quantum computation.

- Quantum computers do exist today, but only in fairly primitive and simplistic form.

- They are evolving rapidly, but it will still be a few more years before they can be useful for practical applications.

- A practical quantum computer would be ready for production deployment to address production-scale practical real-world problems. Just to clarify the terminology — we do have quantum computers today, but they haven’t achieved the status of being practical quantum computers since they are not yet ready for production deployment to address production-scale practical real-world problems.

- Practical quantum computing isn’t really near, like within one, two, or three years. Although quantum computers do exist today, they are not ready for production deployment to address production-scale practical real-world problems. And it’s unlikely that they will be in the next one, two, or three years. Sure, there may be some smaller niches where they can actually be used productively, but those would be the exception rather than the rule. Four to seven years is a better bet, and even then only for moderate benefits.

- Why aren’t quantum computers able to address production-scale practical real-world problems today? Quite a long list of gating factors.

- Limitations of quantum computing. Quantum computing has great potential from a raw performance perspective, but does have its limits. And these are not mere limitations of current quantum computers, but of the overall architecture of quantum computing. Limited coprocessor function. None of the richness of classical computing. Complex applications must be couched in terms of physics problems. Etc.

- Limitations of current quantum computers. These are real limitations, but only of current quantum computers. Future quantum computers will be able to advance beyond these limitations. Limited qubit fidelity. Limited qubit connectivity. Limited circuit size.

- Two most urgent needs in quantum computing: higher qubit fidelity and full qubit connectivity. Overall, the most urgent need is to support more-sophisticated quantum algorithms. Supporting larger quantum circuits is a runner-up, but won’t matter until qubit fidelity and connectivity are addressed.

- Quantum computation must be relatively modest. Must be simple. Must be short. No significant complexity. The computational complexity comes from quantum parallelism — performing this relatively modest computation a very large number of times.

- No, you can’t easily migrate your classical algorithms to run on a quantum computer. You need a radically different approach to exploit the radically different capabilities of quantum computers.

- Lack of fine granularity for phase angles may dramatically limit quantum computing. For example, Shor’s factoring algorithm may work fine for factoring smaller numbers, but not for much larger numbers such as 1024, 2048, and 4096-bit encryption keys.

- Getting to commercialization — pre-commercialization, premature commercialization, and finally commercialization — research, prototyping, and experimentation.

- Premature commercialization is a really bad idea. The temptation will be strong. Many will resist. But some will succumb. A recipe for disaster. Expectations will likely be set (or merely presumed) too high and fail to be met.

- Minimum viable product (MVP). What might qualify for an initial practical quantum computer. The minimal requirements.

- Initial commercialization stage — C1.0. Where we end up for the initial commercial practical quantum computer.

- Subsequent commercialization stages. Progress once the initial commercialization stage, C1.0, is completed.

- But commercialization is not imminent — still years away from even commencing. The preceding sections were intended to highlight how commercialization might or should unfold, but should not be construed as implying that commercialization was in any way imminent — it’s still years away. In fact commercialization is still years away from even commencing. The next few years, at a minimum, will still be the pre-commercialization stage for quantum computing.

- Quantum Ready. Prepare in advance for the eventual arrival of practical quantum computing.

- Quantum Ready — but for who and when? Different individuals and different organizations have different needs and operate on different time scales. Not everybody needs to be Quantum Ready at the same time.

- Quantum Aware — what quantum computing can do, not how it does it. A broader audience needs to understand quantum computing at a high level — its general benefits — but doesn’t need the technical details of how quantum computers actually work or how to program them.

- The technical elite and lunatic fringe will always be ready, for anything.

- But even with the technical elite and lunatic fringe, be prepared for the potential for several false starts. Too often, the trick with any significant new technology is that it may take several false starts before the team hits on the right mix of technology, resources, and timing. This is why it’s best not to get the whole rest of the organization Quantum Ready until the technical elite and lunatic fringe have gotten all of the kinks out of the new and evolving quantum computing technology.

- Quantum Ready — on your own terms and on your own timeline. Corporate investment and deployments of personal computers made sense in 1992, but not in 1980. Don’t jump the gun. Wait until your needs and the technology are in sync.

- Timing is everything — when will it be time for your organization to dive deep on quantum computing? Each organization will be different. Different needs. Different goals. Different resources.

- Your organizational posture towards technological advances. Quantum computing may be different, but the context is the organization’s general posture towards technological advances in general.

- Each organization must create its own quantum roadmap. Each organization should define its own roadmap of milestones for preparation for initial adoption of quantum computing. This is a set of milestones which must be achieved before the organization can achieve practical quantum computing.

- Components of a quantum computer. At a high level. See the previous paper for greater detail.

- Access to a quantum computer. Generally over a network connection. Commonly a cloud-based service.

- Quantum service providers. A number of cloud service providers are quantum service providers, providing cloud access to quantum computers. Including IBM, Amazon, Microsoft, and Google.

- Having your own in-house quantum computer is not a viable option at this stage. In theory, customers could have their own dedicated quantum computers in their own data centers, but that’s not appropriate at this stage since quantum computers are still under research and evolving too rapidly for most organizations to make an investment in dedicated hardware. And customers are focused on prototyping and experimentation rather than production deployment. There are some very low-end quantum computers, such as 2 and 3-qubit machines from SpinQ which can be used for in-house experimentation, but those are very limited configurations.

- Job scheduling and management. One of the critical functions for the use of networked quantum computers is job scheduling and management. Requests are coming into a quantum computer system from all over the network, and must be scheduled, run, and managed until their results can be returned to the sender of the job.

- Local runtime for tighter integration of classical and quantum processing. Classical application code can be packaged and sent to the quantum computer system where the classical application code can run on the classical computer that is embedded inside of the overall quantum computer system, permitting the classical application code to rapidly invoke quantum algorithms locally without any of the overhead of a network connection. Final results can then be returned to the remote application.

- Where are we at with quantum computing? In a more abstract sense. Still at the early stages. Lots of research needed. Still in the pre-commercialization stage.

- Current state of quantum computing. I decided that would be too much distracting detail for this paper. The abstract state of affairs should be sufficient for this paper.

- Much research is still needed. Theoretical, experimental, and applied. Basic science, algorithms, applications, and tools.

- How much more research is required? A lot more research is still needed before quantum computing is ready to exit from the pre-commercialization stage and begin the commercialization stage. There’s no great clarity as to how much more research is needed. Most answers to this question are more abstract than concrete, although there are a number of concrete milestones.

- Quantum computing is still in the pre-commercialization phase. Focus is on research, prototyping, and experimentation.

- Not ready for production-scale practical real-world quantum applications. More capabilities and more refinement are needed.

- Production deployment is not appropriate at this time. Not for a few more years, at least.

- More suited for the lunatic fringe who will use anything than for normal, average technical staff. It’s still the wild west out there. Great and exciting for some, but not for most.

- Still a mere laboratory curiosity. Not ready for production-scale practical real-world quantum applications or production deployment.

- No 40-qubit algorithms to speak of. Where are all of the 40-qubit algorithms?

- Beware of premature commercialization. The technology just isn’t ready yet. Much more research is needed. Much more prototyping and experimentation is needed.

- Doubling down on pre-commercialization is the best path forwards. Research, prototyping, and experimentation should be the priorities, not premature commercialization, not production deployment.

- The ENIAC Moment — proof that quantum computer hardware is finally up to the task of real-world use. The first time a production-scale practical real-world quantum application can be run in something resembling a production environment. Proves that quantum computer hardware is finally up to the task of real-world use. We’re not there yet, not even close.

- The time to start is not now unless you’re the elite, the lunatic fringe. Most normal technical teams and management planners should wait a few years, specifically until the ENIAC Moment has occurred. Everything learned before then will need to be discarded — the ENIAC moment will be the moment when we can finally see the other side of the looking glass, where the real action will be and where the real learning needs to occur.

- At least another two to three years before quantum computing is ready to begin commercialization. Could even be longer. Even four to five years. Not likely to be less.

- Little of current quantum computing technology will end up as the foundation for practical quantum computers and practical quantum computing. Rapid evolution and radical change. Fueled by ongoing research. Wait a few years and everything will have changed.

- Current quantum computing technology is the precursor for practical quantum computers and practical quantum computing. That doesn’t mean that the current technology is a waste or a mistake — it does provide a foundation for further research, prototyping, and experimentation, which provides feedback into further research and ideas for future development.

- Quantum computers cannot handle Big Data. They can handle only a small amount of input data and generate only a small amount of output data. None of the Three V’s of Big Data are appropriate for quantum computing — volume, velocity, variety.

- Quantum computers cannot handle complex logic. Only very simple calculations, but applied to a very large number of possible solutions.

- Quantum computers cannot handle rich data types. Only very simple numeric calculations.

- Quantum computers are inherently probabilistic rather than absolutely deterministic. Not suitable for calculations requiring absolute precision, although statistical processing can approximate determinism.

- Quantum computers are a good choice when approximate answers are acceptable. Especially in the natural sciences or where statistical approximations are used, probabilistic results can be quite acceptable. But not good for financial transactions where every penny counts.

- Statistical processing can approximate determinism, to some degree, even when results are probabilistic. Run the quantum algorithm a bunch of times and statistically analyze the results to identify the more likely, deterministic result.

- As challenging as quantum computer hardware is, quantum algorithms are just as big a challenge. The rules for using a quantum computer are rather distinct from the rules governing classical computers, so entirely new approaches are needed for quantum algorithms.

- The process of designing quantum algorithms is extremely difficult and challenging. The final algorithm may be very simple, but getting to that result is a great challenge. And testing is really difficult as well.

- Algorithmic complexity, computational complexity, and Big-O notation. Algorithmic complexity and computational complexity are roughly synonyms and refer to the calculation of the amount of work that an algorithm will need to perform to process an input of a given size. Generally, the amount of work will vary based on the size of the input data. Big-O notation is used to summarize the calculation of the amount of required work.

- Quantum speedup. Moving from a classical algorithm with a high degree of algorithmic complexity to a quantum algorithm with a low or lower degree of algorithmic complexity achieves what is referred to as a speedup or quantum speedup.

- Key trick: Reduction in computational complexity. One of the key secret tricks for designing quantum algorithms is to come up with clever techniques for reduction in computational complexity — turn a hard problem into an easier problem. Yes, it is indeed harder than it sounds, but the benefits can be well worth the effort.

- We need real quantum algorithms on real machines (or real simulators) — not hypothetical or idealized. We’ve had quite a few years of papers published based on quantum algorithms for hypothetical or idealized quantum computers rather than real machines (or real simulators configured to reflect realistic expectations for real machines in the next few years.) That has led to unrealistic expectations for what to expect from quantum computers and quantum algorithms.

- What is a qubit? Don’t worry about it at this stage! At this stage it isn’t necessary to get into esoteric details such as qubits and how they might be related to the classical bits of a classical computer. All that really matters is quantum parallelism. Qubits are just an element of the technology needed to achieve quantum parallelism.

- No, a qubit isn’t comparable to a classical bit. Any more than a car is comparable to a bicycle or a rocket is comparable to an airplane.

- A qubit is a hardware device comparable to a classical flip flop. It is used to store and manipulate a unit of quantum information represented as a unit of quantum state.

- Superposition, entanglement, and product states enable quantum parallelism. The details are beyond the scope of this paper, but the concepts of superposition, entanglement, and product states are what combine to enable quantum parallelism, the ability to operate on 2^k distinct values in parallel with only k qubits.

- Quantum system — in physics. In physics, an isolated quantum system is any collection of particles which have a quantum state which is distinct from the quantum state of other isolated quantum systems. An unentangled qubit is a quantum system. A collection of entangled qubits is also a quantum system.

- Quantum system — a quantum computer. In addition to the meaning of quantum system in physics, a quantum system in quantum computing can also simply refer to a quantum computer or a quantum computer system.

- Computational leverage. How many times faster a quantum algorithm would be compared to a comparable classical algorithm. Such as 1,000 X, one million X, or even one quadrillion X a classical solution.

- k qubits enable a solution space of 2^k quantum states. The superposition, entanglement, and product states of k qubits combine to enable a solution space of 2^k quantum states.

- Product states are the quantum states of quantum parallelism. When superposition and entanglement of k qubits enables 2^k quantum states, each of those unique 2^k quantum states is known as a product state. These 2^k product states are the unique values used by quantum parallelism.

- Product states are the quantum states of entangled qubits. Simply stating it more explicitly, product state is only meaningful when qubits are entangled. An unentangled (isolated) qubit will not be in a product state.

- k qubits enable a solution space of 2^k product states. More properly, a solution space for k qubits is composed of 2^k product states. Each product state is a unique value in the solution space.

- Qubit fidelity. How reliable the qubits are. Can they maintain their quantum state for a sufficiently long period of time and can operations be performed on them reliably.

- Nines of qubit fidelity. Express the qubit reliability as a percentage and then count the number of leading nines in the percentage, as well as the fraction of a nine after the last nine. More nines is better — higher fidelity.

- Qubit connectivity. How easily two qubits can operate on each other. Usually it has to do with the physical distance between the two qubits — if they are adjacent, they generally can operate on each other most efficiently, but if they are separated by some distance, it may be necessary to move one or both of them so that they are physically adjacent, using so-called SWAP networks, and such movement takes time and can introduce further errors which impact qubit fidelity. Some qubit technologies, such as trapped-ion qubits support full any-to-any qubit connectivity which avoids these problems.

- Any-to-any qubit connectivity is best. The best performance and best qubit fidelity (fewest errors) comes with true, full, any-to-any qubit connectivity. Any two qubits can interact, regardless of the distance between them. Not all qubit technologies support it — notably, superconducting transmon qubits do not, but some do — such as trapped-ion qubits.

- Full qubit connectivity. Generally a synonym for any-to-any qubit connectivity.

- SWAP networks to achieve full qubit connectivity — works, but slower and lower fidelity. SWAP networks are sometimes needed to achieve full qubit connectivity. This approach does work, but is slower and causes lower qubit fidelity. If two qubits are not physically adjacent and any-to-any qubit connectivity is not supported, a SWAP network will be needed to shuffle the quantum states of the two qubits to a pair of qubits which are physically adjacent.

- May not be able to use all of the qubits in a quantum computer. Qubit fidelity and issues with qubit connectivity can limit how many qubits can be used in a single quantum computation.

- Quantum Volume (QV) measures how many of the qubits you can use in a single quantum computation. Technically it tells you how many quantum states can be used in a single quantum computation without excessive errors, but that is 2^k for k qubits, so k or log2(QV) is a measure of how many qubits you can use in a single quantum computation.

- Why is Quantum Volume (QV) valid only up to about 50 qubits? Measuring the Quantum Volume (QV) metric for a quantum computer requires simulating quantum circuits on a classical quantum simulator, which is constrained by memory capacity, so since simulation of quantum circuits larger than about 50 qubits is not feasible, obtaining a Quantum Volume (QV) metric greater than 2⁵⁰ is not feasible either.

- Programming model — the essence of programming a quantum computer. The details are beyond the scope of this paper, but the rules for how to program a quantum computer are very technical and require great care.

- Ideal quantum computer programming model not yet discovered. Research and innovation is ongoing. Don’t get too invested in what we have today since it will likely be obsolete in a few to five to ten years.

- Quantum applications and quantum algorithms. How a quantum computer is used.

- Quantum applications are a hybrid of quantum computing and classical computing. Most of the application, especially handling large volumes of data and complex logic, is classical code, while select compute-intensive functions can be implemented as quantum algorithms.

- Basic model for a quantum application. The major steps in the process.

- Post-processing of the results from a quantum algorithm. Put the results of the quantum algorithm in a form that classical application code can handle.

- Quantum algorithm vs. quantum circuit. In computer science we would say that the algorithm is the specification of the logic while the circuit is the implementation of the specification for the logic. A quantum circuit is the exact sequence of quantum logic gates which will be sent to the quantum processing unit (QPU) for execution. The algorithm focuses on the logic, while the circuit focuses on the execution.

- Quantum circuits and quantum logic gates — the code for a quantum computer.

- Generative coding of quantum circuits rather than hand-coding of circuits. Fully hand-coding the quantum circuits for algorithms is absolutely out of the question. What is needed is a more abstract representation of an algorithm. Generative coding of quantum circuits provides this level of abstraction. Any algorithm designed to be scalable must be generated dynamically using classical code which is parameterized with the input size or size of the simulation, so that as the input size grows or the simulation size grows, a larger number of quantum logic gates will be generated to accommodate the expanded input size.

- Algorithmic building blocks, design patterns, and application frameworks are critical to successful use of a quantum computer. The level immediately above the programming model. But beyond the scope of this paper, which focuses on quantum computing overall.

- Quantum Fourier transform (QFT) and quantum phase estimation (QPE) are critical to successful use of a quantum computer. These algorithmic building blocks are profoundly critical to effectively exploiting the computational power of a quantum computer, such as for quantum computational chemistry, but beyond the scope of this paper, which focuses on quantum computing overall.

- Quantum computer, quantum processor, quantum processing unit, QPU, and quantum computer system are roughly synonyms. But a few distinctions.

- Quantum computer as a coprocessor rather than a full-function computer. Most quantum application code will run on a classical computer with only selected functions offloaded to a quantum processor.

- Quantum computers cannot fully replace classical computers. Although eventually they will be merged with classical computers as a universal quantum computer, but that’s far in the future.

- Quantum applications are mostly classical code with only selected portions which run on a quantum computer. Most application logic either cannot be performed on a quantum computer at all, or wouldn’t achieve any meaningful quantum advantage over performing it on a classical computer. Only selected portions of the quantum application would be coded as quantum algorithms and execute on a quantum computer.

- No quantum operating system. As previously mentioned, a quantum computer is not a full-function computer as a classical computer is. Rather, it acts as a coprocessor, similar to how a graphics processing unit (GPU) operates. As such, there is no quantum operating system per se.

- Coherence, decoherence, and coherence time. Coherence is the ability of a quantum computer to remain in its magical quantum state where quantum effects can be maintained in a coherent manner which enables the quantum parallelism needed to fully exploit quantum computation. Decoherence is simply the loss of coherence. Coherence time is generally fairly short for many quantum computer technologies, limiting the size and complexity of quantum algorithms. Some technologies are more coherent than others, meaning they have a longer coherence time, which enables greater size and complexity of quantum algorithms.

- Gate execution time — determines how many gates can be executed within the coherence time.

- Maximum quantum circuit size — limits size of quantum algorithms.

- Need to summarize capability requirements for quantum algorithms and applications. Clearly document the capability requirements for quantum algorithms and applications. What capabilities does a quantum computer need to possess to support a quantum algorithm or quantum application.

- Matching the capability requirements for quantum algorithms and applications to the capabilities of particular quantum computers. Both must be clearly documented. Can’t run all quantum algorithms and applications on all quantum computers. Need to match the requirements for quantum algorithms and applications with the capabilities for particular quantum computers.

- A variety of quantum computer types and technologies. General-purpose and special-purpose types. A variety of qubit technologies.

- General-purpose quantum computers. Can be applied to many different types of applications.

- Special-purpose quantum computers and special-purpose quantum computing devices. Beyond the scope of this paper, which focuses on general-purpose quantum computers, also referred to as universal gate-based quantum computers.

- Different types and technologies of quantum computers may require distinctive programming models. General-purpose quantum computers and special-purpose quantum computers tend to have different programming models — and their quantum algorithms won’t be compatible. Different types of special-purpose quantum computers will tend to have different programming models. Some qubit technologies may indeed have compatible programming models, but some may not.

- Don’t get confused by special-purpose quantum computing devices that promise much more than they actually can deliver.

- Simulators for quantum computers. You don’t need a real quantum computer to run relatively simple quantum algorithms — you can simulate a quantum computer on a classical computer, also known as a classical quantum simulator. But complex quantum algorithms will run very slowly or not at all since the whole point of a quantum computer is to greatly outperform even the best classical supercomputers. Simulators are also good for debugging and experimenting with improved hardware before it is even available.

- Quantum computers — real and simulated are both needed. Both are important for quantum computing. Simulated quantum computers are needed for development and debugging. Real quantum computers are great for production, but less useful for development and debugging.

- Focus on using simulators rather than real quantum computers until much better hardware becomes available. Current quantum computers have too many shortcomings to be very useful or productive in the near term. It would be more productive for most people to use classical quantum simulators rather than real quantum computers for most of their work.

- Noise, errors, error mitigation, error correction, logical qubits, and fault tolerant quantum computing. Noise can cause errors. Sometimes errors can be mitigated and corrected. Sometimes they just have to be tolerated. But noise is just a fact of life for quantum computing, for the foreseeable future. Eventually we will get to true fault tolerance, but not soon. Near-perfect qubits will help sooner.

- Perfect logical qubits. The holy grail of quantum computing. Regular qubits are noisy and error-prone, but the theory, the generally-accepted belief, is that quantum error correction will fully overcome that limitation.

- NISQ — Noisy Intermediate-Scale Quantum devices (computers). Official acknowledgement that noise is a fact of life for quantum computing, for the foreseeable future.

- Near-perfect qubits as a stepping stone to fault-tolerant quantum computing. Dramatically better than current NISQ devices even if still well-short of true fault-tolerant quantum computing.

- Quantum error correction (QEC) remains a distant promise, but not critical if we have near-perfect qubits. Not within the next few years. But not critical as long as we achieve near-perfect qubits within a year or two.

- Circuit repetitions as a poor man’s approximation of quantum error correction. By executing the same quantum circuit a bunch of times and then examining the statistical distribution of the results, it is generally possible to determine which of the various results is the more likely result — which result occurs more frequently.

- Beyond NISQ — not so noisy or not intermediate-scale. NISQ is technically inaccurate for many current (and future) quantum computers. I’ve proposed some alternative terms to supplement NISQ.

- When will the NISQ era end and when will the post-NISQ era begin? Maybe just a year or two, maybe three. Near-perfect qubits would happen first. Perfect logical qubits would be several years after that. Hundreds of qubits are coming later this year (IBM Osprey), but the combination of hundreds of near-perfect qubits might take two or three years, maybe four.

- Three stages of adoption for quantum computing — The ENIAC Moment, Configurable packaged quantum solutions, and The FORTRAN Moment. The first production-scale quantum application. Widespread use of quantum applications. It is finally easy for most organizations to develop their own quantum applications.

- Configurable packaged quantum solutions are the greatest opportunity for widespread adoption of quantum computing. Combine prewritten algorithms and code with the ability to dynamically customize both using high-level configuration features rather than needing to dive deep into actual quantum algorithms or application code. This will likely be the main method by which most organizations exploit quantum computers.

- The FORTRAN Moment — It is finally easy for most organizations to develop their own quantum applications. Advent of a truly high-level programming model, rich collection of high-level algorithmic building blocks, plethora of design patterns, numerous rich application frameworks, and many examples of working and deployable quantum algorithms and applications.

- Quantum networking and quantum Internet — research topics, not a near-term reality.

- Distributed quantum computing — longer-term research topic. Just as quantum networking is a longer-term research project rather than a current reality, so is distributed quantum computing — a longer-term research topic rather than a current reality.

- Distributed quantum applications. Even though distributed quantum computing is not a near-term reality, distributed quantum applications are quite feasible since they’re mostly classical code, which can be distributed today. But, each quantum algorithm works independently, since there is no quantum networking, yet.

- Distributed quantum algorithms — longer-term research topic. Although quantum applications can be distributed, today, quantum algorithms cannot be distributed, at present, since there is no quantum networking to connect them and their quantum state.