Model for Pre-commercialization Required Before Quantum Computing Is Ready for Commercialization

Quantum computing is a very long way from being ready for commercialization. This informal paper lays out a proposed model of the main areas, main stages, major milestones, and toughest issues, and a process through which quantum computing must progress before commercialization can begin in earnest, when all significant technical uncertainties have been resolved and the technology is ready to be handed off to a product engineering team to develop a marketable and deployable product with minimal technical risk which will be ready for the development and production deployment of production-scale production-quality practical real-world quantum applications. This paper refers to all of this work in advance of commercialization as pre-commercialization — the work needed before a product engineering team can begin their work to develop a usable product suitable for production deployment with minimal technical risk.

There is a vast jungle, swamp, and desert of research, prototyping, and experimentation in front us us before quantum computing is finally ready for the final product engineering in preparation for commercialization for production deployment of quantum applications to solve production-scale practical real-world problems, but we’ll focus more on the big picture than the myriad of fine details.

Pre-commercialization is a vague and ambiguous term, ranging from everything that must occur before a technology is ready to be commercialized, to the final stage to get the technology ready for release for commercial use. This paper will focus on the former, especially research, prototyping, and experimentation, but the ultimate interest is in achieving the latter — commercial use.

For now and the indefinite future, quantum computing remains a mere laboratory curiosity, not yet ready for the development and deployment of production-scale quantum applications to solve practical real-world problems.

In fact, the critical need at this stage is much further and deeper research. Too many questions, uncertainties, and unknowns remain before there will be sufficient research results upon which to base a commercialization strategy.

The essential goal of pre-commercialization is simple:

- Pre-commercialization seeks to eliminate all major technical uncertainties which might impede commercialization, thorough research, prototyping, and experimentation.

So that commercialization is a more methodical and predictable process:

- Commercialization uses off the shelf technologies and proven research results to engineer low-risk commercial products in a reasonably short period of time.

To be clear, the term product is used in this paper to refer to the universe of quantum computing-related technology, from quantum computers themselves to support software, tools, quantum algorithms, and quantum applications — any technology needed to enable quantum computing. Many vendors may be involved, but this paper still refers to this universe of products as if it collectively were a single product, since all of them need to work together for quantum computing to succeed.

Topics to be covered in this paper:

- This paper is only a proposed model for approaching commercialization of quantum computing

- First things first — much more research is required

- The gaps: Why can’t quantum computing be commercialized right now?

- What effort is needed to fill the gaps

- Quantum advantage is the single greatest technical obstacle to commercialization

- Quantum computing remains a mere laboratory curiosity

- The critical need for quantum computing is much further and deeper research

- What do commercialization and pre-commercialization mean?

- Put most simply, pre-commercialization is research as well as prototyping and experimentation

- Put most simply, commercialization focuses on a product engineering team — commercial product-oriented engineers and software developers rather than scientists

- Pre-commercialization is the Wild West while commercialization is more like urban planning

- Most production-scale application development will occur during commercialization

- Premature commercialization risk

- No great detail on commercialization proper since focus here is on pre-commercialization

- Commercialization can be used in a variety of ways

- Unclear how much research must be completed before commercialization of quantum computing can begin

- What fraction of research must be completed before commercialization of quantum computing?

- Research will always be ongoing and never-ending

- Simplified model of the main stages from pre-commercialization through commercialization

- The heavy lift milestones for commercializing quantum computing

- Short list of the major stages in development and adoption of quantum computing as a new technology

- More detailed list of stages in development of quantum computing as a product

- Stage milestones towards quantum computing as a commercial product

- The benefits and risks of quantum error correction (QEC) and logical qubits

- Three stages of deployment for quantum computing: The ENIAC Moment, configurable packaged quantum solutions, and The FORTRAN Moment

- Highlights of pre-commercialization activities

- Highlights of initial commercialization

- Post-commercialization — after the initial commercialization stage

- Highlights for subsequent stages of commercial deployment, maybe up to ten of them

- Prototyping and experimentation — hardware and software, firmware and algorithms, and applications

- Pilot projects — prototypes

- Proof of concept projects (POC) — prototypes

- Rely primarily on simulation for most prototyping and experimentation

- Primary testing of hardware should focus on functional testing, stress testing, and benchmarking — not prototyping and experimentation

- Prototyping and experimentation should initially focus on simulation of hardware expected in commercialization

- Late in pre-commercialization, prototyping and experimentation can focus on actual hardware — once it meets specs for commercialization

- Prototyping and experimentation on actual hardware earlier in pre-commercialization is problematic and an unproductive distraction

- Products, services, and releases — for both hardware and software

- Products which enable quantum computing vs. products which are enabled by quantum computing

- Potential for commercial viability of quantum-enabling products during pre-commercialization

- Preliminary quantum-enabled products during pre-commercialization

- How much of research results can be used intact vs. need to be discarded and re-focused and re-implemented with a product engineering focus?

- Hardware vs. cloud service

- Prototyping and experimentation — applied research vs. pre-commercialization

- Prototyping products and experimenting with applications

- Prototyping and experimentation as an extension of research

- Trial and error vs. methodical process for prototyping and experimentation

- Alternative meanings of pre-commercialization

- Requirements for commercialization

- Production-scale vs. production-capacity vs. production-quality vs. production-reliability

- Commercialization of research

- Stages for uptake of new technology

- Is quantum computing still a mere laboratory curiosity? Yes!

- When will quantum computing cease being a mere laboratory curiosity? Unknown. Not soon.

- By definition quantum computing will no longer be a mere laboratory curiosity as soon as it has achieved initial commercialization

- Could quantum computing be considered no longer a mere laboratory curiosity as soon as pre-commercialization is complete? Possibly.

- Could quantum computing be considered no longer a mere laboratory curiosity as soon as commercialization is begun? Plausibly.

- Quantum computing will clearly no longer be a mere laboratory curiosity once initial commercialization stage 1.0 has been achieved

- Four overall stages of research

- Next steps — research

- What is product development or productization?

- Technical specifications

- Product release

- When can pre-commercialization begin? Now! We’re doing it now.

- When can commercialization begin? That’s a very different question! Not soon.

- Continually update vision of what quantum computing will look like

- Need to utilize off the shelf technology

- It takes significant time to commercialize research results

- The goal is to turn raw research results into off the shelf technology which can then be used in commercial products

- Will there be a quantum winter? Or even more than one?

- But occasional Quantum Springs and Quantum Summers

- Quantum Falls?

- In truth, it will be a very long and very slow slog

- No predicting the precise flow of progress, with advances and setbacks

- Sorry, but there won’t be any precise roadmap to commercialization of quantum computing

- Existing roadmaps leave a lot to be desired, and prove that we’re in pre-commercialization

- No need to boil the ocean — progression of commercial stages

- The transition from pre-commercialization to commercialization: producing detailed specifications for requirements, architecture, functions and features, and fairly detailed design

- Critical technical gating factors for initial stage of commercialization

- Quantum advantage is the single greatest technical gating factor for commercialization

- Variational methods are a short-term crutch, a distraction, and an absolute dead-end

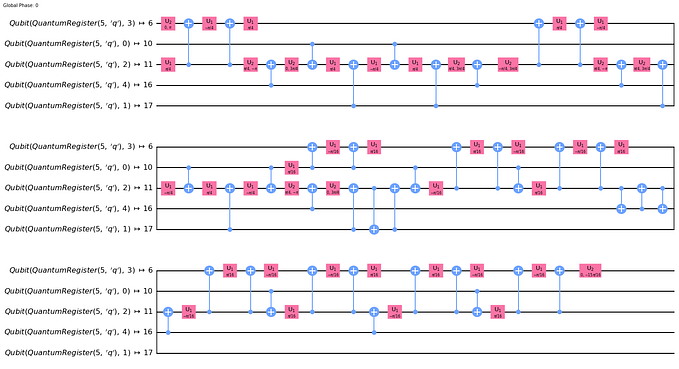

- Exploit quantum Fourier transform (QFT) and quantum phase estimation (QPE) on simulators during pre-commercialization

- Final steps before a product can be released for commercial production deployment

- No further significant research can be required to support the initial commercialization product

- Commercialization requires that the technology be ready for production deployment

- Non-technical gating factors for commercialization

- Quantum computer science

- Quantum software engineering

- No point to commercializing until substantial fractional quantum advantage is reached

- Fractional quantum advantage progress to commercialization

- Qubit capacity progression to commercialization

- Maximum circuit depth progression to commercialization

- Alternative hardware architectures may be needed for more than 64 qubits

- Qubit technology evolution over the course of pre-commercialization, commercialization, and post-commercialization

- Initial commercialization stage 1 — C1.0

- The main criterion for initial commercialization is substantial quantum advantage for a realistic application, AKA The ENIAC Moment

- Differences between validation, testing, and evaluation for pre-commercialization vs. commercialization

- Validation, testing, and evaluation during pre-commercialization

- Validation, testing, and evaluation for initial commercialization stage 1.0

- Initial commercialization stage 1.0 — The ENIAC Moment has arrived

- Initial commercialization stage 1.0 — Routinely achieving substantial quantum advantage

- Initial commercialization stage 1.0 — Achieving substantial quantum advantage every month or two

- Okay, maybe a nontrivial fraction of minimal quantum advantage might be acceptable for the initial stage of commercialization

- Minimum Viable Product (MVP)

- Automatically scalable quantum algorithms

- Configurable packaged quantum solutions

- Shouldn’t quantum error correction (QEC), logical qubits, and The FORTRAN Moment be required for the initial commercialization stage? Yes, but not feasible.

- Should a higher-level programming model be required for the initial commercialization stage? Probably, but may be too much to ask for.

- Not everyone will trust a version 1.0 of any product anyway

- General release

- Criteria for evaluating the success of initial commercialization stage 1.0

- Quantum ecosystem

- Subsequent commercialization stages — Beyond the initial ENIAC Moment

- Post-commercialization efforts

- Milestones in fine phase granularity to support quantum Fourier transform (QFT) and quantum phase estimation (QPE)

- Milestones in quantum parallelism and quantum advantage

- When might commercialization of quantum computing occur?

- Slow, medium, and fast paths to pre-commercialization and initial commercialization

- How long might pre-commercialization take?

- What stage are we at right now? Still early pre-commercialization.

- When might pre-releases and preview releases become available?

- Dependencies

- Some products which enable pre-commercialization may not be relevant to commercialization

- Risky bets: Some great ideas during pre-commercialization may not survive in commercialization

- A chance that all work products from pre-commercialization may have to be discarded to transition to commercialization

- Analogy to transition from ABC, ENIAC, and EDVAC research computers to UNIVAC I and IBM 701 and 650 commercial systems

- Concern about overreach and overdesign — Multics vs. UNIX, OS/2 vs. Windows, IBM System/38, Intel 432, IBM RT PC ROMP vs. PowerPC, Trilogy

- Full treatment of commercialization — a separate paper, eventually

- Beware betaware

- Vaporware — don’t believe it until it yourself

- Pre-commercialization is about constant change while commercialization is about stability and carefully controlled and compatible evolution

- Customers and users prefer carefully designed products, not cobbled prototypes

- Customers and users will seek the stability of methodical commercialization, not the chaos of pre-commercialization

- Need for larger capacity, higher performance, more accurate classical quantum simulators

- Hardware infrastructure and services buildout

- Hardware infrastructure and services buildout is not an issue, priority, or worry yet since the focus is on research

- Factors driving hardware infrastructure and services buildout

- Maybe a surge in demand for hardware infrastructure and services late in pre-commercialization

- Expect a surge in demand for hardware infrastructure and services once The ENIAC Moment has been reached

- Development of standards for QA, documentation, and benchmarking

- Business development during pre-commercialization

- Some preliminary commercial business development late in pre-commercialization

- Preliminary commercial business development early in initial commercialization stage

- Deeper commercial business development should wait until after pre-releases late in the initial commercialization stage

- Consortiums for configurable packaged quantum solutions

- Finalizing service level agreements (SLA) should not occur until late in the initial commercialization stage, not during pre-commercialization

- IBM — Still heavily focused on research as well as customers prototyping and experimenting

- Oracle — No hint of prime-time application commercialization

- Amazon — Research and facilitating prototyping and experimentation

- Pre-commercialization is the realm of the lunatic fringe

- Quantum Ready

- Quantum Ready — The criteria and timing will be a fielder’s choice based on needs and interests

- Quantum Ready — Be early, but not too early

- Quantum Ready — Critical technical gating factors

- Quantum Ready — When the ENIAC Moment has been achieved

- Quantum Ready — It’s never too early for The Lunatic Fringe

- Quantum Ready — Light vs. heavy technical talent

- Quantum Ready — For algorithm and application researchers anytime during pre-commercialization is fine, but for simulation only

- Quantum Ready — Caveat: Any work, knowledge, or skill developed during pre-commercialization runs the risk of being obsolete by the time of commercialization

- Quantum Ready — The technology will be constantly changing

- Quantum Ready — Leaders, fast-followers, and laggards

- Quantum Ready — Setting expectations for commercialization

- Quantum Ready — Or maybe people should wait for fault-tolerance?

- Quantum Ready — Near-perfect qubits might be a good time to get ready

- Quantum Ready — Maybe wait for The FORTRAN Moment?

- Quantum Ready — Wait for configurable packaged quantum solutions

- Quantum Ready — Not all staff within the organization have to get Quantum Ready at the same time or pace

- Shor’s algorithm implementation for large public encryption keys? Not soon.

- Quantum true random number generation as an application is beyond the scope of general-purpose quantum computing

- Summary and conclusions

This paper is only a proposed model for approaching commercialization of quantum computing

To be clear, this paper is only a proposed model for approaching commercialization of quantum computing. How reality plays out is anybody’s guess.

That said, significant parts of this paper are describing reality as it exists today and in recent years.

And, significant parts of this paper are simply describing widely accepted prospects for the future of quantum computing.

But it is my model for pre-commercialization and how the model parses what goes into pre-commercialization and commercialization respectively, as well as my particular parsing and description of specific activities and their sequencing and timing that may be disputed by some — or not, as the case might be.

First things first — much more research is required

The most important takeaway from this paper is that the top and most urgent priority is much more research.

For detailed areas of quantum computing in which research is needed, see my paper:

- Essential and Urgent Research Areas for Quantum Computing

- https://jackkrupansky.medium.com/essential-and-urgent-research-areas-for-quantum-computing-302172b12176

The gaps: Why can’t quantum computing be commercialized right now?

Even if we had a vast army of 10,000 hardware and software engineers available, these technical obstacles would preclude those engineers from developing a commercial quantum computer which can achieve dramatic quantum advantage for production-scale production-capacity production-quality practical real-world quantum applications:

- Haven’t achieved quantum advantage. No point in commercializing a new computing technology which has no substantial advantage over classical computing.

- Too few qubits.

- Gate error rate is too high. Can’t get correct and reliable results.

- Severely limited qubit connectivity. Need full connectivity or very low error rate for SWAP networks, or some innovative connectivity scheme.

- Very limited coherence time. Severely limits circuit depth.

- Very limited circuit depth. Limited by coherence time.

- Insufficiently fine granularity for phase and probability amplitude. Precludes quantum Fourier transform (QFT) and quantum phase estimation (QPE).

- No support for quantum Fourier transform (QFT) and quantum phase estimation (QPE). Needed for quantum computational chemistry and other applications.

- Measurement error rate is too high.

- Need quantum error correction (QEC) or near-perfect qubits. 99.99% to 99.999% fidelity.

- Need modular quantum processing unit (QPU) designs.

- Need higher-level programming models. Current programming model is too primitive.

- Need for high-level quantum-native programming languages. Currently working at the equivalent of classical assembly language or machine language — individual quantum logic gates.

- Too difficult to transform an application problem statement into a quantum algorithmic application. Need for methodology and automated tools.

- Too few applications are in a form that can readily be implemented on a quantum computer. Need for methodology.

- Lack of a rich library of high-level algorithmic building blocks.

- Need for quantum algorithm and application metaphors, design patterns, frameworks, and libraries.

- Inability to achieve dramatic quantum advantage. Or even a reasonable fraction.

- No real ability to debug complex quantum algorithms and applications.

- Unable to classically simulate more than about 40 qubits.

- No conceptual models or examples of automatically scalable 40-qubit quantum algorithms.

- No conceptual models or examples of realistic and automatically scalable quantum applications.

- No conceptual models or examples of complete and automatically scalable configurable packaged quantum solutions.

- Not ready for production deployment in general. Still a mere laboratory curiosity.

Those are the most critical gating items, all requiring significant research and innovation.

For more on the concept of dramatic quantum advantage, see my paper:

- What Is Dramatic Quantum Advantage?

- https://jackkrupansky.medium.com/what-is-dramatic-quantum-advantage-e21b5ffce48c

For more on the concept of fractional quantum advantage, see my paper:

- Fractional Quantum Advantage — Stepping Stones to Dramatic Quantum Advantage

- https://jackkrupansky.medium.com/fractional-quantum-advantage-stepping-stones-to-dramatic-quantum-advantage-6c8014700c61

What effort is needed to fill the gaps

A brief summary of what’s needed to fill the gaps so that quantum computing can be commercialized:

- Much more research. Enhancing existing technologies. Discovery of new technologies. New architectures. New approaches to deal with technologies. New programming models.

- Much more innovation.

- Much more algorithm efforts.

- Much greater reliance on classical quantum simulators. Configured to act as realistic quantum computers, current, near-term, medium-term, and longer-term.

- Robust technical documentation.

- Robust training materials.

- General reliance on open source, transparency, and free access to all technologies.

- Comprehensive examples for algorithms, metaphors, design patterns, frameworks, applications, and configurable packaged quantum solutions.

- Resistance to urges and incentives to prematurely commercialize the technology.

- Many prototypes, experimentation, and much engineering evolution. Who knows what the right mix is for successful quantum solutions to realistic application problems.

Quantum advantage is the single greatest technical obstacle to commercialization

The whole point of quantum computing is to offer a dramatic performance advantage over classical computing. There’s no point in commercializing a new computing technology which has no substantial performance advantage over classical computing.

For a general overview of quantum advantage and quantum supremacy, see my paper:

- What Is Quantum Advantage and What Is Quantum Supremacy?

- https://jackkrupansky.medium.com/what-is-quantum-advantage-and-what-is-quantum-supremacy-3e63d7c18f5b

For more detail on dramatic quantum advantage, see my paper:

- What Is Dramatic Quantum Advantage?

- https://jackkrupansky.medium.com/what-is-dramatic-quantum-advantage-e21b5ffce48c

As well my paper on achieving at least a fraction of dramatic quantum advantage:

- Fractional Quantum Advantage — Stepping Stones to Dramatic Quantum Advantage

- https://jackkrupansky.medium.com/fractional-quantum-advantage-stepping-stones-to-dramatic-quantum-advantage-6c8014700c61

Quantum computing remains a mere laboratory curiosity

For now and the indefinite future, quantum computing remains a mere laboratory curiosity, not yet ready for the development and deployment of product-scale quantum applications to solve real-world problems.

For more on laboratory curiosities, read my paper:

- When Will Quantum Computing Advance Beyond Mere Laboratory Curiosity?

- https://jackkrupansky.medium.com/when-will-quantum-computing-advance-beyond-mere-laboratory-curiosity-2e1b88329136

So, when will quantum computing be able to advance beyond mere laboratory curiosity? Once all of the major technology gaps have been adequately addressed. When? Not soon.

The critical need for quantum computing is much further and deeper research

The critical need at this stage is much further and deeper research for all aspects of quantum computing, on all fronts. Too many questions, uncertainties, and unknowns remain before there will be sufficient research results upon which to base a robust and realistic commercialization strategy.

For more on the many areas of quantum computing needing research, read my paper:

- Essential and Urgent Research Areas for Quantum Computing

- https://jackkrupansky.medium.com/essential-and-urgent-research-areas-for-quantum-computing-302172b12176

What do commercialization and pre-commercialization mean?

Commercialization is a vague and ambiguous term, but the essence is a process which culminates in a finished product which is marketed to be used to solve practical, real-world problems for its customers and users.

Pre-commercialization is also a vague and ambiguous term, but the essence is that it is all of the work which must be completed before a serious attempt at commercialization can begin.

Product innovation generally proceeds through three stages:

- Research. Discovery, understanding, and invention of the science and raw technology. Some products require deep research, some do not.

- Prototyping and experimentation. What might a product look like? How might it work? How might people use it? Try out some ideas and see what works and what doesn’t. Some products require a lot of trial and error, some do not.

- Productization. We know what the product should look like. Now build it. Product engineering. Much more methodical, avoiding trial and error.

Pre-commercialization will generally refer to either:

- Research alone. Prototyping and experimentation will be considered part of commercialization. Common view for a research lab.

- Research as well as prototyping and experimentation. Vague and rough ideas need to be finalized before commercialization can begin. Commercialization, productization, product development, and product engineering will all be exact synonyms.

The latter meaning is most appropriate for quantum computing. Quantum computing is still in the early stage of product innovation, with:

- Much more research is needed. Lots of research.

- No firm vision or plan for the product. What the product will actually look like, feel like, and how it will actually be used. So…

- Much prototyping and experimentation is needed. Lots of it. This will shape and determine the vision and details of the product.

So, for quantum computing:

- Pre-commercialization means research as well as prototyping and experimentation. This will continue until the research advances to the stage where sufficient technology is available to produce a viable product that solves production-scale practical real-world problems. All significant technical issues have been resolved, so that commercialization can proceed with minimal technical risk.

- Commercialization means productization after research as well as prototyping and experimentation are complete. Productization means a shift in focus from research to a product engineering team — commercial product-oriented engineers and software developers rather than scientists.

Put most simply, pre-commercialization is research as well as prototyping and experimentation

Raw research results can be impressive, but it can take extensive prototyping and experimentation before the raw results can be transformed into a form that a product engineering team can then turn into a viable commercial product.

Putting the burden of prototyping and experimentation on a product engineering team is quite simply a waste of time, energy, resources, and talent. Prototyping and experimentation and product engineering are distinct skill sets — expecting one to perform the tasks of the other is an exercise in futility. Sure, it can be done, much as one can indeed forcefully pound a square peg into a round hole or vice versa — it can be done, but the results are far from desirable.

Put most simply, commercialization focuses on a product engineering team — commercial product-oriented engineers and software developers rather than scientists

Prototyping and experimentation may not require the same level of theoretical intensity as research, but it is still focused on posing and answering hard technical questions rather than the mechanics of conceptualizing, designing, and developing a commercially-viable product.

But a product engineering team consists of commercial product-oriented engineers and software developers focused on applying all of those answers towards the mechanics of conceptualizing, designing, developing, and deploying a commercially-viable product.

Pre-commercialization is the Wild West while commercialization is more like urban planning

By definition, nobody really knows the answers or the rules or even the questions during pre-commercialization. It has all the rules of a knife fight — there are no rules in a knife fight. It really is like the Wild West, where anything goes.

In contrast, commercialization presumes that all the answers are known and is a very methodical process to get from zero to a complete commercial product. It is just as methodical as urban planning.

Most production-scale application development will occur during commercialization

Although organizations can certainly experiment with quantum computing during pre-commercialization, it generally won’t be possible to develop production-scale applications until commercialization. Too much is likely to change, especially the hardware and details such as qubit fidelity.

The alpha testing stage of the initial commercialization stage is probably the earliest that production-oriented organizations can begin to get serious about quantum computing. Any work products produced during pre-commercialization will likely need to be redesigned and reimplemented for commercialization. Sure, some minor amount of work may be salvageable, but that should be treated as the exception rather than the rule.

The beta testing stage of initial commercialization is probably the best bet for getting really serious about design and development of production-scale quantum algorithms and applications.

Besides, quite a few organizations will prefer to wait for the third or fourth stages of commercialization before diving too deep and making too large a commitment to quantum computing. Betting on the first release of any new product is a very risky proposition, not for the faint of heart.

All of that said, I’m sure that there will be more than a few hearty organizations, led by The Lunatic Fringe of early adopters, who will at least attempt to design and develop production-scale algorithms and applications during pre-commercialization, at least in the later stages, once the technology has matured to a fair degree and technical risk seems at least partially under control. After all, somebody has to be the first, the first to achieve The ENIAC Moment, with the first production-scale practical real-world quantum application. But, such cases will be the exception rather than the rule, for most organizations.

Premature commercialization risk

A key motivation for this paper is to attempt to avoid the incredible technical and business risks that would come from premature commercialization of an immature technology — trying to create a commercial product before the technology is ready, feasible, or economically viable.

Quantum computing has come a long way over several decades and especially in the past few years, but still has a long way to go before it is ready for prime-time production deployment of production-scale practical real-world applications.

So, this paper focuses on pre-commercialization — all of the work that needs to be completed before a product engineering team can even begin serious, low-risk planning and development of a commercial product.

No great detail on commercialization proper since focus here is on pre-commercialization

Commercialization itself is discussed in this paper to some degree, but not as a main focus since the primary intent is to highlight what work should be considered pre-commercialization vs. product engineering for commercialization.

This paper will provide a brief summary of what happens in product development or productization or commercialization, but only a few high-level details since the primary focus here is on pre-commercialization — the need for deep research, prototyping, and experimentation which answers all of the hard questions so that product engineering can focus on engineering rather than research — and on being methodical rather than experimentation and trial and error.

The other reason to focus on commercialization to any extent in this paper is simply to clarify what belongs in commercialization rather than during pre-commercialization, to avoid premature commercialization — to avoid the premature commercialization risk mentioned in the preceding section.

All of that said, the coverage of commercialization in this paper should be sufficient until we get much closer to the end of pre-commercialization and the onset of commercialization is imminent. In fact, consider the coverage of commercialization in this paper to be a draft paper or framework for full coverage of commercialization.

Commercialization can be used in a variety of ways

Technically, commercialization nominally means for profit, for financial gain. Although quantum computing will generally be used for financial gain, it may be used, for example, in government applications, which of course are not for commercial gain, although the vendor supplying the quantum computers to the government is in fact seeking financial gain, so even government fits the technical definition. Although, the government could in theory design and build its own quantum computer, with all of the qualities of a vendor-supplied quantum computer, but without intention of financial gain, so that technically would not constitute a commercial deployment per se.

Technically, it might be better if we referred to practical adoption rather than commercialization, but commercialization has so many positive and clear connotations that it’s worth the minimal inaccuracy of special cases such as the proprietary government case just mentioned.

An academic research lab might build and operate a quantum computer for productive application, such as to process data from a research experiment. Once again, no direct financial motive, so calling it commercial seems somewhat misleading. But, once again, the focus this paper has is whether the quantum computer has the capabilities and capacity for practical application. The lab-oriented quantum computer will have to meet quite a few of the same requirements as a true commercial product for financial gain. So, once again, I see no great loss to applying the concepts of commercialization to even a lab-only but practical quantum computer.

Another use for the term commercialization is simply the intention to seek practical application for a new technology still in the research lab. The technology may be ready for practical application, but it simply needs to be commercialized.

Another use of the team is the final stage of development of a new product, immediately after validation, but before it is ready to be polished, packaged, and released as a commercial product.

From a practical perspective, I see two elements of the essential meaning of commercialization as used in this paper:

- It is no longer a mere laboratory curiosity.

- It is finally ready for production deployment. Production-scale, production-capacity, production-reliability, production-quality practical real-world applications.

Sure, some applications will not be for commercial gain. That’s okay.

Sure, some quantum computers will be custom developed for the organizations using them, neither acquired as a commercial product of a vendor, nor offered as a commercial product to other organizations. That’s okay too.

Unclear how much research must be completed before commercialization of quantum computing can begin

How much research must be completed before quantum computing is ready to be commercialized? Unfortunately, the simple and honest answer is that we just don’t know.

Technically, the answer is all the research that is needed to support the features, capabilities, capacity, and performance of the initial stage of commercialization and possibly the first few subsequent stages of commercialization. That’s the best, proper answer.

Actually, there are a lot of details of at least the areas or criteria for research which must be completed before commercialization — listed in the section Critical technical gating factors for initial stage of commercialization. There are also non-technical factors, listed in the section Non-technological gating factors for commercialization.

And to be clear, all of those factors are required to be resolved prior to the beginning of commercialization. That’s the whole point of pre-commercialization — resolving all technical obstacles so that product engineering will be a relatively smooth process.

But even as specific as that is, there is still the question of how much of that can actually be completed and whether a more limited, stripped-down vision of quantum computing is the best that can be achieved in the medium-term future.

What fraction of research must be completed before commercialization of quantum computing?

Even that question has an unclear, vague, and indeterminate answer. The only answer that has meaning is that all of the research needed to achieve the technical gating factors listed in the section Critical technical gating factors for initial stage of commercialization must be completed, by definition.

So, 100% of the research underpinning the technical gating factors must be completed before initial commercialization can begin. Granted, there will be ongoing research needed for subsequent commercialization stages.

Research will always be ongoing and never-ending

As already noted, research must continue even after all of the technical answers needed for initial commercialization have been achieved.

Each of the subsequent commercialization stages after the initial commercialization stage will likely require its own research, which must be completed well in advance of the use and deployment of technology dependent on the results of the research.

Simplified model of the main stages from pre-commercialization through commercialization

The simplest model is the best place to start, evolving from raw idea to finished product line:

- Laboratory curiosity. Focus on research. Many questions and technological uncertainties to be resolved.

- The ENIAC moment. Finally achieve production-scale capabilities. But still for elite teams only.

- Initial Commercialization. An actual commercial product. No longer a mere laboratory curiosity.

- The FORTRAN moment. Beginning of widespread adoption. No longer only for elite teams.

- Mature commercialization. Widespread adoption. Methodical development and release of new features, new capabilities, and improvements in performance, capacity, and reliability.

The heavy lift milestones for commercializing quantum computing

Many milestones must be achieved, but these are the really big ones:

- At least a few dramatic breakthroughs. Incremental advances will not be enough.

- Near-perfect qubits. Well beyond noisy. Four or five nines of fidelity.

- High intermediate-scale qubit capacity. 256 to 768 high-fidelity qubits.

- High qubit connectivity. If not full any to any, then at least enough that most algorithms won’t suffer. May (likely) require a more advanced quantum processor architecture.

- Extended qubit coherence and deeper quantum circuits. At least dozens, 100, 250, and preferably 1,000 gates.

- The ENIAC Moment. First credible application.

- Higher-level programming model and high-level programming language.

- Substantial libraries of high-level algorithmic building blocks.

- Substantial metaphors and design patterns.

- Substantial algorithm and application frameworks.

- Error correction and logical qubits.

- The FORTRAN Moment. Non-elite teams can develop quantum applications.

- First configurable packaged quantum solution.

- Significant number of configurable packaged quantum solutions.

- Quantum networking. Entangled quantum state between separate quantum computers.

- The BASIC Moment. Anybody can develop a practical quantum application.

Short list of the major stages in development and adoption of quantum computing as a new technology

- Initial conception.

- Theory fleshed out.

- Rudimentary lab experiments.

- First real working lab quantum computer.

- Initial experimentation.

- Incremental growth of initial capabilities coupled with incremental experimentation, rinse and repeat.

- First hints of usable technology coupled with experimental iteration.

- Strong suggestion that usability is imminent with ongoing experimental iteration.

- The ENIAC moment. Still just a laboratory curiosity. Still not quite ready for commercialization.

- Multiple ENIAC moments.

- Flesh out the technology.

- Enhance the usability of the technology with experimental iteration. Occasionally elite deployments.

- The FORTRAN moment. The technology is finally usable and ready for mass adoption.

- Widen applicability and adoption of the technology, iteratively. Production deployments are common.

- Successive generations of the technology. Broaden adoption. Develop standards.

More detailed list of stages in development of quantum computing as a product

Of course the reality will have its own twists and turns, detours, setbacks, and leaps forward, but these items generally will need to occur to get from theory and ideas to a commercial product:

- Initial, tentative research. Focusing on theory.

- More, deeper research. Focusing on laboratory experimentation.

- Even more research. Honing in on solutions and applications.

- Peer-reviewed publication.

- Trial and error.

- Simulation.

- Hardware innovation.

- Hardware evolution.

- Hardware maturity.

- Hardware validation for production use.

- Algorithm support innovation.

- Algorithm design theory innovation.

- Support software and tools conceptualization.

- Support software and tools development. Including testing.

- Technology familiarization.

- Documentation.

- Full technology training.

- Experimental algorithm design.

- Prototyping stage.

- Prototype applications.

- Production prototype algorithm design.

- Production algorithm design.

- QA methodology development.

- QA tooling prototyping.

- QA tooling production design.

- QA tooling production development.

- QA tooling production checkout and validation. Ready for use for validating production applications.

- Training curriculum. Syllabus.

- Marketing literature. White papers. Brochures. Demonstration videos. Podcasts.

- Standards development.

- Standards adoption.

- Standards adherence.

- Standards adherence validation.

- Regulatory approval.

Stage milestones towards quantum computing as a commercial product

Actual stages and milestones will vary, but generally these are many of the major milestones on the path from theory to a commercial product for quantum computing.

- Trial and error.

- Initial milestone. Something that seems to function.

- Evolution. Increasing functions, features, capabilities, capacity, performance, and reliability.

- Further functional milestones. Incremental advances. Occasional leaps.

- Initial belief in usability. “It’s ready!” — we think.

- Feedback from initial trial use. “Okay, but…”

- Further improvements. “This should do it!”

- Rinse and repeat. Possibly 4–10 times — or more.

- Questions about usability. Belief that “Okay, it’s not really ready.”

- A couple more trials and refinements.

- General acceptance that now it actually does appear ready.

- General release.

- 2–10 updates and refinements. Fine tune.

- Finally it’s ready. General belief that the technology actually is ready for production-scale development and deployment.

- Sequence of stages for testing for deployment readiness.

- SLA development. Service level agreement. Actual commitment, with actual penalties.

- Initial production deployment.

- Some possible — likely- hiccups.

- Further refinements based on reality of real-world production deployment.

- Final refinement that leads to successful deployment.

- Rinse and repeat for further production deployments. Further refinements.

- Acceptance of success. General belief that a sufficient number of successful production deployments have been made that production deployment is a low-risk proposition.

- Rinse and repeat for several more production deployments.

- Minimal hiccups and refinements needed.

- We’re there. General conclusion that production deployment is a routine matter.

The benefits and risks of quantum error correction (QEC) and logical qubits

Noisy NISQ qubits are too problematic for widespread adoption of quantum computing. Sure, elite teams can work around the problems to some extent, but even then it can remain problematic and an expensive and time-consuming proposition. Quantum error correction (QEC) fully resolves the difficulty of noisy qubits, providing perfect logical qubits which greatly facilitate quantum algorithm design and development of quantum applications.

Unfortunately, there are difficulties achieving quantum error correction as well. It will happen, eventually, but it’s just going to take time, a lot of time. Maybe two to three years, or maybe five to seven or even ten years.

Short of full quantum error correction and perfect logical qubits, semi-elite teams can probably make do with so-called near-perfect qubits which are not as perfect as error-corrected logical qubits, but close enough for many applications.

For more discussion of quantum error correction and logical qubits, see my paper:

- Preliminary Thoughts on Fault-Tolerant Quantum Computing, Quantum Error Correction, and Logical Qubits

- https://jackkrupansky.medium.com/preliminary-thoughts-on-fault-tolerant-quantum-computing-quantum-error-correction-and-logical-1f9e3f122e71

Three stages of deployment for quantum computing: The ENIAC Moment, configurable packaged quantum solutions, and The FORTRAN Moment

A new technology cannot be deployed until it has been proven to be capable of solving production-scale practical real-world problems.

The first such deployable stage for quantum computing will be The ENIAC Moment, which is the very first time that anyone is able to demonstrate that quantum computing can achieve a substantial fraction of substantial quantum advantage for a production-scale practical real-world problem.

The second deployable stage, beyond additional ENIAC moments for more applications, is the deployment of a configurable packaged quantum solution, which allows a customer to develop a new application without writing any code, simply by configuring a packaged quantum solution for a type of application.

A configurable packaged quantum solution is essentially a generalized quantum application which is parameterized so that it can be customized for a particular situation using only configuration parameters rather than writing code or designing or modifying quantum algorithms directly.

The third and major deployable stage is The FORTRAN Moment, enabled in large part by quantum error correction (QEC) and logical qubits, as well as a higher-level programming model and high-level programming language which allow less-elite technical teams to develop quantum applications without the intensity of effort required by ENIAC moments, which require very elite technical teams.

Highlights of pre-commercialization activities

Just to summarize the more high-profile activities of pre-commercialization, before the technology is even ready to consider detailed product planning and methodical product engineering for a commercial product (commercialization):

- Deeper research. Both theoretical and deep pure research, as well as basic and applied research. Plodding along, incrementally and sometimes with big leaps, increasing qubit capacity, reducing error rates, and increasing coherence time to enable production-scale quantum algorithms.

- Support software and tools for researchers. For researchers, not to be confused with what a commercial product of customers and users will need.

- Support software and tools conceptualization. What might be needed in a commercial product for customers and users.

- Support software and tools development. Including testing.

- Initial development of quantum computer science. A new field.

- Initial development of quantum software engineering. A new field.

- Initial development of a quantum software development life cycle (QSDLC) methodology. Similar to SDLC for classical computing, but adapted for quantum computing.

- Initial effort at higher level and higher performance algorithmic building blocks.

- Initial efforts at algorithm and application libraries, metaphors, design patterns, and frameworks.

- Initial efforts at configurable packaged quantum solutions.

- Development of new and higher-level programming models.

- Development of high-level quantum programming languages.

- Develop larger capacity, higher performance, more accurate classical quantum simulators. Enable algorithm designers and application developers to test out ideas without access to quantum hardware, especially for quantum computers expected over the next few years.

- Need for very elite staff. Until the FORTRAN Moment is reached in a post-initial stage of commercialization.

- Need for detailed and accessible technical specifications. Even in pre-commercialization.

- Basic but reasonable quality documentation.

- Basic but reasonable quality tutorials. Freely accessible.

- Some degree of technical training. Freely accessible.

- Initial efforts at development of standards for QA, documentation, and benchmarking.

- Preliminary but serious effort at testing. QA, unit testing, subsystem testing, system testing, performance testing, and benchmarking.

- Need to reach the ENIAC Moment. Something closely resembling a real, production-scale application that solves a practical real-world problem. There’s no point commercializing if this has not been achieved.

- Focus on near-perfect qubits. Good enough for many applications.

- Research in quantum error correction (QEC). Still many technical uncertainties. Much research is required.

- Defer full implementation of quantum error correction until a subsequent commercialization stage. It’s still too hard and too demanding to expect in the initial commercialization stage.

- Focus on methodology and development of scalable algorithms. Minimal function on current NISQ hardware, but automatic expansion of scale as the hardware improves.

- Focus on preview products. Usable to some extent — by elite staff, for evaluation of the technology, but not to be confused with viable commercial products. Not for production use. No SLA.

- No SLA. Service level agreements — contractual commitments and penalties for function, availability, reliability, performance, and capacity. No commercial production deployment prior to initial commercialization stage anyway.

- Minimal hardware infrastructure and services buildout.

- Significant intellectual property creation. Hopefully mainly open source.

- Some degree of intellectual property protection. Hopefully minimal exclusionary patents.

- Some degree of intellectual property licensing.

- Possibly some intellectual property theft.

- Only minimal focus on maintainability. Generally, work produced during pre-commercialization will be rapidly superseded by revisions. The focus is on speed of getting research results and speed of evolution of existing work, not long-term product maintainability.

- End result of pre-commercialization: sufficient detail to produce detailed specifications for requirements, architecture, functions and features, and fairly detailed design to kick off commercialization.

Highlights of initial commercialization

Once pre-commercialization has been completed, a product engineering team can be chartered to develop a deployable commercial product. Just to summarize the more high-profile activities of commercialization:

- Formalize results from pre-commercialization into detailed specifications for requirements, architecture, functions and features, and fairly detailed design to kick off commercialization. Chartering of a product engineering team.

- Focus on a minimum viable product (MVP) for initial commercialization.

- Substantial quantum advantage. 1,000,000X performance advantage over classic solutions. Or, at least a significant fraction — well more than 1,000X. Preferably full dramatic quantum advantage — a one-quadrillion performance advantage. This can be clarified as pre-commercialization progresses. At this moment we have no visibility.

- Significant advances in qubit technology. Mix of incremental advances and occasional giant leaps, but sufficient to move well beyond noisy NISQ qubits to some level of near-perfect qubits, a minimum of four to five nines of qubit fidelity for two-qubit gates and measurement.

- Focus on near-perfect qubits. Good enough for many applications.

- Research in quantum error correction (QEC). Still many technical uncertainties. Much research is required.

- Defer full implementation of quantum error correction until a subsequent commercialization stage. It’s still too hard and too demanding to expect in the initial commercialization stage.

- Serious degree of support software and tools development. Including testing.

- Further development of quantum computer science.

- Further development of quantum software engineering.

- Further development of a quantum software development life cycle (QSDLC) methodology.

- Some degree of higher level and higher performance algorithmic building blocks.

- Some degree of algorithm and application libraries, metaphors, design patterns, and frameworks.

- Some degree of configurable packaged quantum solutions.

- Some degree of example algorithms and applications. That reflect practical, real-world applications. And clearly demonstrate quantum parallelism.

- Development of new and higher-level programming models.

- May or may not include the development of a high-level quantum programming language.

- Continue to expand larger capacity, higher performance, more accurate classical quantum simulators. Enable algorithm designers and application developers to test out ideas without access to quantum hardware, especially for quantum computers expected over the next few years.

- Focus on methodology and development of scalable algorithms. Minimal function on current limited hardware, but automatic expansion of scale as the hardware improves.

- Need for higher quality documentation.

- Need for great tutorials. Freely accessible.

- Serious need for technical training. But still focused primarily on elite technical staff at this stage.

- Serious efforts at development of standards for QA, documentation, and benchmarking.

- Robust efforts at testing. QA, unit testing, subsystem testing, system testing, performance testing, and benchmarking.

- SLA. Service level agreements — contractual commitments and penalties for function, availability, reliability, performance, and capacity. Essential for commercial production deployment.

- Minimal interoperability. More of an aspiration than a reality.

- Sufficient hardware infrastructure and services buildout. To meet initial expected demand. And some expectation for initial growth.

- Increased intellectual property issues.

- Increased focus on maintainability. Work during commercialization needs to be durable and flexible. Reasonable speed to evolve features and capabilities.

Post-commercialization — after the initial commercialization stage

Post-commercialization simply refers to any of the commercialization stages after the initial commercialization stage — also referred to here as subsequent commercialization stages.

Highlights for subsequent stages of commercial deployment, maybe up to ten of them

Achieving the initial commercialization stage will be a real watershed moment, but still too primitive for widespread deployment. A long sequence of subsequent commercialization stages will be required to achieve the full promise of quantum computing. Some highlights:

- Multiple ENIAC Moments.

- Multiple configurable packaged quantum solutions.

- Consortiums for configurable packaged quantum solutions.

- Ongoing focus on methodology and development of scalable algorithms. Limited function on current hardware, but automatic expansion of scale as the hardware improves.

- Dramatic quantum advantage. A one-quadrillion performance advantage over classical solutions. May take a few additional commercialization stages for the hardware to advance.

- Ongoing advances in qubit technology. Mix of incremental advances and occasional giant leaps.

- Maturation of support software and tools development. Including testing.

- Maturation of quantum computer science.

- Maturation of quantum software engineering.

- Maturation of a quantum software development life cycle (QSDLC) methodology.

- Extensive higher level and higher performance algorithmic building blocks.

- Extensive algorithm and application libraries, metaphors, design patterns, and frameworks.

- Extensive configurable packaged quantum solutions. Very common. The common quantum application deployment for most organizations.

- Excellent collection of example algorithms and applications. That reflect practical, real-world applications. And clearly demonstrate quantum parallelism.

- Full exploitation of new and higher-level programming models.

- Development of high-level quantum programming languages.

- Full quantum error correction (QEC) and perfect logical qubits. Will take a few stages beyond the initial commercialization.

- The FORTRAN Moment is reached. Widespread adoption is now possible.

- Training of non-elite staff for widespread adoption. Gradually expand from elite-only technical staff during the initial stage to non-elite technical staff as the product gets easier to use with higher-level algorithmic building blocks and higher-level programming models and languages.

- Increasing interoperability.

- Dramatic hardware infrastructure and services buildout. To meet demand. And anticipate growth.

- Increased intellectual property issues.

- The BASIC Moment is reached. Anyone can develop a practical quantum application.

- Quantum networking.

- Quantum artificial intelligence.

- Universal quantum computer. Merging full classical computing features.

Prototyping and experimentation — hardware and software, firmware and algorithms, and applications

In this paper, prototyping and experimentation include:

- Hardware.

- Firmware.

- Software.

- Algorithms.

- Applications.

- Test data.

So this includes working quantum computers, algorithms, and applications.

Prototypes won’t necessarily include all features and capabilities of a production product, but at least enough to demonstrate and evaluate capabilities and to enable experimentation with algorithms and applications.

Pilot projects — prototypes

A pilot project is generally the same as a prototype. Although sometimes prototyping is used at the outset of any project, while a pilot project is usually a single, specific project chosen to prove an overall approach to solving a particular application problem rather than a general approach to all application problems. In any case, references in this paper to prototypes and prototyping apply equally well to pilot projects.

Proof of concept projects (POC) — prototypes

A proof of concept project (POC) is generally the same as a prototype, or even a pilot project. Although, a POC project tends to focus on a very narrow, specific concept (hence the name), whereas a prototype tends to be a larger scale than POC, and a pilot project tends to be a full-scale project. In any case, references in this paper to prototypes and prototyping apply equally well to proof of concept projects.

Rely primarily on simulation for most prototyping and experimentation

Early hardware can be limited in availability, limited in capacity, error-prone, and lacking in critical features and capabilities. Life is so much easier using a clean simulator for prototyping and experimentation with algorithms and applications — provided that it is configured to match target hardware, primarily what is expected at commercialization, not hardware that is not ready for commercialization.

Primary testing of hardware should focus on functional testing, stress testing, and benchmarking — not prototyping and experimentation

Testing of hardware should be very rigorous and methodical, based on carefully defined specifications. During both pre-commercialization and commercialization.

Refrain from relying on prototyped algorithms and applications and ad hoc experimentation for critical testing of hardware, especially during pre-commercialization when everything is in a state of flux.

Prototyping and experimentation should initially focus on simulation of hardware expected in commercialization

Algorithm designers and application developers should not attempt to prototype or experiment with new hardware until it has been thoroughly tested and confirmed to meet its specifications.

Try to avoid hardware which is not ready for commercialization — focus on simulation at all stages.

Running on real hardware is more of a final test, not a preferred design and development method. If the hardware doesn’t meet its specifications, wait for the hardware to be improved rather than distort the algorithms and applications to fit hardware which simply isn’t ready for commercialization.

Late in pre-commercialization, prototyping and experimentation can focus on actual hardware — once it meets specs for commercialization

Only in the later stages of pre-commercialization, when the hardware is finally beginning to stabilize and approximate what is expected at commercialization will it make sense to do any serious prototyping and experimentation directly on real hardware. Even then, it’s still best to develop and test first under simulation. Running on real hardware is mostly as a test rather than for algorithm design and application development.

Prototyping and experimentation on actual hardware earlier in pre-commercialization is problematic and an unproductive distraction

Working directly with hardware, especially in the earlier stages of pre-commercialization can be very problematic and more of a distraction than a benefit. Remain focused on simulation.

Worse, attempts to run on early hardware frequently required distortions of algorithms and applications to work with hardware which is not ready for commercialization. Focus on correct results using simulation, and wait for the hardware to catch up with its specifications.

Products, services, and releases — for both hardware and software

This paper treats products and services the same regardless of whether they are hardware or software. And in fact, it treats hardware and software the same — as products or services.

Obviously hardware has to be physically manufactured, but with cloud services for accessing hardware remotely, even hardware looks like just another service to be accessed over the Internet.

Eventually hardware vendors will start selling and shipping quantum computer hardware to data centers and customer facilities.

There are of course time, energy, and resources required to manufacture or assemble a hardware device, but frequently that can be less than the time to design the hardware plus the time to design and develop the software needed to use the hardware device once it is built.

A release simply means making the product or service available and accessible. Both software and hardware, and products and services, can be released — made available for access.

Of course there are many technical differences between hardware and software products, but for the most part they don’t impact most of the analysis of this paper, which treats hardware as just another type of product — or service in the case of remote cloud access.

Products which enable quantum computing vs. products which are enabled by quantum computing

There are really two distinct categories of products covered by this paper:

- Quantum-enabled products. Products which are enabled by quantum computing. Such as quantum algorithms, quantum applications, and quantum computers themselves.

- Quantum-enabling products. Products which enable quantum computing. Such as software tools, compilers, classical quantum simulators, and support software. They run on classical computers and can be run even if quantum computing hardware is not available. Also includes classical hardware components and systems, as well as laboratory equipment.

The former are not technically practical until quantum computing has exited from the pre-commercialization stage and entered (or exited) the commercialization stage. They are the main focus of this paper.

The latter can be implemented at any time, even and especially during pre-commercialization. Some may in fact be focused on pre-commercialization, such as lab equipment and classical hardware used in conjunction with quantum lab experiments.

The point is that some quantum-related (quantum-enabling) products can in fact be commercially viable even before quantum computing has entered the commercialization stage, while any products which are enabled by quantum computing must wait until commercialization before they are commercially viable.

Potential for commercial viability of quantum-enabling products during pre-commercialization

Although most quantum-related products, including quantum applications and quantum computers themselves, have no substantive real value until the commercialization stage of quantum computing, a whole range of quantum-enabling products do potentially have very real value, even commercial value, during pre-commercialization and even during early pre-commercialization. These include:

- Quantum software tools.

- Compilers and translators.

- Algorithm analysis tools.

- Support software.

- Classical quantum simulators.

- Hardware components used to build quantum computers.

Organizations involved with research or prototyping and experimentation may be willing to pay not insignificant amounts of money for such quantum-enabling products, even during pre-commercialization.

Preliminary quantum-enabled products during pre-commercialization

Some vendors may in fact offer preliminary quantum-enabled products — or consulting services — during pre-commercialization, strictly for experimentation and evaluation, but with no prospect for commercial use during pre-commercialization. Personally, I would refer to these as preview products, even if offered for financial compensation. These could include algorithms and applications, as well as a variety of quantum-enabling tools as discussed in the preceding section, but again, focused on experimentation and evaluation, not commercial use.

How much of research results can be used intact vs. need to be discarded and re-focused and re-implemented with a product engineering focus?

Research has a distinct purpose from commercial products. The purpose of research is to get research results — to answer questions, to discover, and to learn things. In contrast, the purpose of a commercial product is to solve production-scale practical real-world problems.

By research work, I include outright research results as well as any of the prototyping and experimentation covered by pre-commercialization.

Research work needs to be robust, but not needing to address all of the concerns of a commercial product, many short-cuts can be taken and many gaps and shortfalls may exist that make it unsuitable for a commercial product as-is.

Sure, sometimes research work products can in fact be used as-is in a commercial product with no or only minimal rework, but more commonly substantial re-work or in fact a complete redesign and reimplementation may be necessary to address the needs of a commercial product.

In any case, be prepared for substantial re-work or outright, from-scratch design and reimplementation. If less effort is required, consider yourself lucky.

The whole point of research and pre-commercialization in general is to answer hard questions and eliminate technological risk. Generally, the distinct professional skills and expertise of a product engineering team will be required to produce a commercial-grade product.

Some of the factors which may not be addressed by any particular work performed in research or the rest of pre-commercialization include:

- Features.

- Functions.

- Capabilities.

- Performance.

- Capacity.

- Reliability.

- Error handling.

- QA testing.

- Ease of use.

- Maintainability.

- Interoperability.

I wouldn’t burden the research staff or advanced development staff with the need to worry or focus on any of those factors. Leave them to the product engineering team — they live for this stuff!

That said, some of those factors do overlap with research questions, but usually in different ways.

In any case, let the research, prototyping, and experimentation staff focus on what they do best, and not get bogged down in the extra level of burden of product engineering.

Hardware vs. cloud service

Although there are dramatic differences between operating a computer system for the sole benefit of the owning organization and operating a computer system as a cloud service for customers outside of the owning organization, they are roughly comparable from the perspective of this paper — they are interchangeable and reasonably transparent from the perspective of a quantum algorithm or a quantum application.

A quantum algorithm or quantum application will look almost exactly the same regardless of where execution occurs.

The economics and business planning will differ between the two.

The relative merits of the two approaches are beyond the scope of this paper.

From the perspective of hardware infrastructure and services buildout, computers are computers regardless of who owns and maintains them.

Prototyping and experimentation — applied research vs. pre-commercialization

When technical staff prototype products and experiment with them, should that be considered applied research or part of commercialization and productization? To some extent it’s a fielder’s choice, but for the purposes of this paper it is considered pre-commercialization, a stage between research and commercialization.

From a practical perspective, it’s a question of whose technical staff are doing the work. If the research staff are doing the work, then it’s more clearly applied research. But if engineering staff, or a product incubation team do the work, then it’s not really applied research per se since researchers are not doing the work.

To be more technically accurate, the way the work is carried out matters as well. If it’s methodical experiments with careful analysis, it could be seen as research, but if it’s trial and error and based on opinion, intuition, and input from sales, marketing, users, product management, and product engineering staff, then it wouldn’t be mistaken as being true applied research.

At the end of the day, and from the perspective of commercialization, prototyping and experimentation can be seen as more closely aligned with applied research than commercialization.

Still, it’s clearly in the middle, which is part of the motivation for referring to it as pre-commercialization — work to be completed as an extension of research before true commercialization can properly begin.

Prototyping products and experimenting with applications

Although prototyping and experimentation can be viewed together, as if they were siamese twins joined at the hip, they are somewhat distinct:

- Products are prototyped.

- Applications are experimented with.

Of course, some may view the distinction differently, but this is how I am using it here in this paper.

Prototyping and experimentation as an extension of research

It’s quite reasonable to consider prototyping (of products) and experimentation (with applications) as an extension of research. After all, they are intermediate results, designed to generate feedback, rather than intended to become actual products and applications in a commercial sense.

That said, calling research, prototyping, and experimentation pre-commercialization makes it more clear that they are separate from and must precede and be completed before proper commercialization can begin.

Trial and error vs. methodical process for prototyping and experimentation

Research programs tend to be much more methodical than prototyping and experimentation, which tend to be more trial and error, based on opinion, intuition, and input from sales, marketing, users, product management, and product engineering staff.

Alternative meanings of pre-commercialization

I considered a variety of possible interpretations for pre-commercialization. In the final analysis, it’s a somewhat arbitrary distinction, so I can understand if others might choose one of the alternative interpretations:

- Research alone. Anything after research is commercialization.

- Final stage just before commercialization, culminates in commercialization. Product engineering and development complete. Internal testing complete. Documentation complete. Training development complete. Ready for alpha and beta testing.

- Everything before production product engineering. Preliminary lab research — demonstrate basic capability. Full-scale research. Prototype products, evolution. Development of product requirements. Final prototype product. Essentially what I settled on — research and prototyping and experimentation, with the intention of answering all the critical technical questions, ready to hand off to the product engineering team.

- Everything before a preliminary vision of what a production product would look like. Lab experimentation. Iterative research, searching for the right technology capable of satisfying production needs. Iterative development of production requirements. Finally have a sense of what a functional production product would look like — and how to get there within a relatively small number of years. Iterate until final production requirement specification ready — and the research to support it.

- Everything after all research for the initial production product is complete. Sense that no further research is needed for initial production product release

- Everything after The ENIAC Moment. But then what nomenclature should be used for the stages before the ENIAC moment?

- Everything until The ENIAC Moment is behind us. A good runner-up candidate for pre-commercialization.

Requirements for commercialization

Before a product or technology idea can be commercialized, several criteria must be met:

- Research must be complete. Not necessarily all research for all of time, but sufficient research to design and build the initial product and a few stages after that. Enough research to eliminate all major scientific and technological uncertainties for the next few stages of a product or product line.

- Vague or rough product ideas must be clarified or rejected. A clear vision of the product is needed.

- A product plan must be developed. What must the product do and how will it do it?

- Budgeting and pricing. How much will it cost to design and develop the product? How large a team will be required, for how long, and how much will they be paid? How much equipment and space will be needed and how much will it cost? How much will it cost to build each unit of the product? How much will the product be sold for? How much will it cost to support, maintain, and service the product? How much of that cost can be recouped from recurring revenue as opposed to factoring it into the unit pricing?

Once those essentials are known and in place, product development can occur — the product engineering team can get to work.

Production-scale vs. production-capacity vs. production-quality vs. production-reliability

The focus of a commercial product is production deployment. Also known as operating the product or technology at scale.

There are four related terms:

- Production-scale. Capable of addressing large-scale practical real-world problems. The combination of input capacity, processing capacity, and output capacity.

- Production-capacity. Focusing specifically on the size of data to be handled.