Five Major Use Cases for a Classical Quantum Simulator

There is more than one reason to simulate execution of a quantum circuit on a classical computer. This informal paper proposes a model of five distinct major use cases and a variety of access patterns for a classical quantum simulator. The goal is to complement real quantum computers to enable a much more productive path to effective quantum computing.

First, here’s a brief summary of the kinds of use cases and access patterns for simulation of quantum circuits on classical computers:

- Simulate simply because a real quantum computer is not yet available. Or that available real quantum computers are insufficient to meet your computing requirements, such as number of qubits, qubit fidelity, and connectivity.

- Simulating an ideal quantum computer, with no noise and no errors. Primarily for research, experimentation, and education. And for testing and debugging for logic errors.

- Determining what the results of a quantum circuit should be — without any of the noise of a real quantum computer.

- Simulating an actual, real quantum computer, matching its noise and error rate. Replicate the behavior of the real machine as closely as possible.

- Simulating a proposed quantum computer, matching its expected noise and error rate. Evaluate proposed work before it is done — simulate before you build.

- Simulating proposed enhancements to an existing quantum computer, matching its expected noise and error rate. Evaluate proposed work before it is done — simulate before you build.

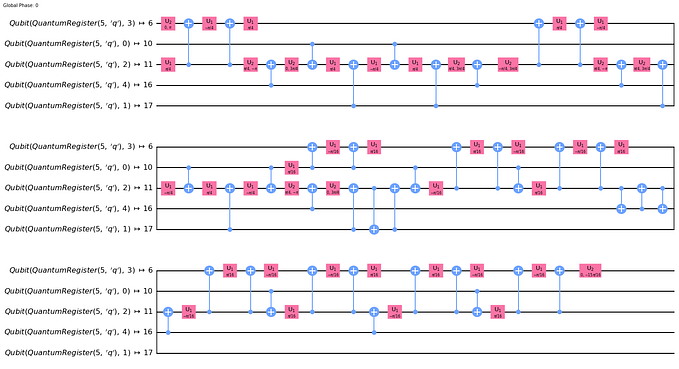

- Debugging a quantum circuit, such as examining all details of quantum state which are not observable on a real quantum computer. For those who are not perfect and are unable to write bug-free algorithms and circuits from the start.

- Analysis of execution of a quantum circuit. There is far too much which cannot be easily predicted.

- Benchmarking a real quantum computer, comparing actual results with simulated results. Such as for IBM’s Quantum Volume metric.

- Repeated runs with different noise and error rates to determine what noise and error rate must be achieved to achieve a desired success rate for achieving expected results. What advances in hardware must be achieved to run a particular quantum circuit?

- And more.

Some of these usages qualify as major use cases, while others make more sense as access patterns which apply across many of the major use cases, such as debugging and analysis. The major use cases and access patterns will be elaborated in subsequent sections of this paper.

But here’s a terse summary of the five major use cases for the lazy reader:

- Perfect simulation. No noise and no errors. Good for research and testing and debugging of logic errors.

- Theoretical ideal real simulation. Not quite perfect since there are theoretical limits from quantum mechanics.

- Match an existing real quantum computer. Very closely match the error and limit profile for a particular real quantum computer — so simulation results closely match the real machine.

- Match a proposed real quantum computer. Tune noise and error profile for proposed enhancements to evaluate how effective they will be — simulate before you build.

- Near-perfect qubits. Evaluate algorithms based on varying levels (nines) of qubit fidelity.

It is not the purpose of this informal paper to dive deeply into the details of simulation of quantum circuits in general but simply to highlight the need for the five distinct major use cases for classical quantum simulators.

Topics covered in this informal paper:

- Most users should be focused on accurate simulators rather than current real quantum computers.

- Is quantum simulation the same as quantum simulator?

- The future of classical quantum simulators even if not the present.

- Why use a classical quantum simulator?

- Overarching goal: Focus on where the “puck” will be in 2–4 years rather than current hardware which will be hopelessly obsolete by then.

- Quantum algorithms and quantum circuits are rough synonyms.

- Target quantum computer.

- Profile for a target quantum computer.

- Personas, use cases, and access patterns.

- The five major use cases.

- Access patterns.

- Personas for simulation of quantum circuits.

- Users.

- Major use case #1: Perfect simulation.

- Major use case #2: Theoretical ideal real simulation.

- Major use case #3: Match an existing real quantum computer.

- Major use case #4: Match a proposed real quantum computer.

- Major use case #5: Near-perfect qubits.

- Determining what the results of a quantum circuit should be — without any of the noise of a real quantum computer.

- Quantum error correction (QEC).

- Would a separate use case be needed for quantum error correction?

- Will quantum error correction really be perfect?

- Should debugging be a major use case?

- Generally, the use cases revolve around criteria for configuring noise and error rates.

- Simulate before you build.

- Why simulate if real hardware is available?

- Noise models.

- Per-qubit noise and error model.

- Noise models analogous to classical device drivers.

- Most users should not create, edit, look at, or even be aware of the details of noise models.

- High-level control of noise models.

- Selecting nines of qubit fidelity for noise models.

- Viewing effective nines of qubit fidelity.

- Inventory of known quantum computers.

- Parameters and properties for use cases and access patterns.

- Precision in simulation.

- Precision in unitary matrices.

- Granularity of phase and probability amplitude.

- Limitations of simulation of quantum circuits.

- Simulation details.

- Debugging features.

- Use of perfect simulation to test for and debug logic errors.

- Rerun a quantum circuit with the exact same noise and errors to test a bug fix.

- Checking of unitary matrix entries.

- Need for blob storage for quantum states.

- What to do about shot counts for large simulations.

- Resources required for simulation of deep quantum circuits.

- Is there a practical limit to the depth of quantum circuits?

- Will simulation of Shor’s factoring algorithm be practical?

- Alternative simulation modes.

- Pulse control simulation.

- Major caveat: Simulation is limited to roughly 50 qubits.

- Need for scalable algorithms.

- Need for automated algorithm analysis tools.

- Need to push for simulation of 45 to 55 qubits.

- Would simulation of 60 to 65 qubits be useful if it could be achieved?

- Potential to estimate Quantum Volume without the need to run on a real quantum computer

- Simulation is an evolving, moving target, subject to change — this is simply an initial snapshot.

- Summary and conclusions.

Most users should be focused on accurate simulators rather than current real quantum computers

Current quantum computers are neither useful nor indicative of the kinds of quantum computers which will be used in five to seven to ten years, especially in terms of qubit fidelity and ability to achieve dramatic quantum advantage, so it makes little sense for users to focus on algorithms and applications which either won’t work at all or are not representative of what will work some years from now. This argues for placing much greater emphasis on using simulators today rather than current real hardware.

The impetus for this paper is to suggest improvements to how classical quantum simulators are conceptualized, packaged, and used, to encourage more productive behavior by those seeking to get a head start at exploiting the future world of quantum computing — not the current reality of current real quantum computers.

That said, a secondary impetus is to support the development and testing of algorithms which in fact will run on current quantum computing hardware (e.g., debugging), but even then, the focus remains on getting more people focused on using high-quality simulators, and using simulators as the starting point and primary development environment. In that model, eventually running an algorithm on a real quantum computer is a mere validation step — confirming that the real hardware matches the simulation fairly closely.

Is quantum simulation the same as quantum simulator?

Unfortunately, no, the terms quantum simulation and quantum simulator have very different meanings, although some people may mistakenly use them as synonyms.

The first term refers to using a quantum computer to simulate quantum physics as an application. That’s what Feynman envisioned using a quantum computer for — simulating quantum mechanics at the subatomic level.

The second term refers to using a classical computer to simulate a quantum computer — simulating the execution of a quantum circuit, on a classical computer.

The latter is the intended topic of this paper.

To attempt to avoid the confusion, I use the longer term classical quantum simulator to emphasize the distinction, although it is redundant.

The future of classical quantum simulators even if not the present

This paper is not limited to describing only existing classical quantum simulators per se, but attempts to elaborate a proposed model for how they should operate and be used in the future.

The proposed five major use cases and accompanying access patterns are valid and relevant, regardless of whether they are actually currently implemented.

Why use a classical quantum simulator?

Before delving into abstract use cases, it is worth noting what tasks users are trying to accomplish by using a classical quantum simulator:

- Simulate quantum circuit execution when no real quantum computer hardware is yet available.

- Simulate quantum circuit execution when no real quantum computer hardware is available with sufficient resources — not enough qubits, qubit coherence time is too short for required circuit depth, qubit fidelity is too low, or qubit connectivity is insufficient.

- Test a quantum algorithm on an imaginary, hypothetical ideal quantum computer as part of a research project.

- Simulate an existing real quantum computer as accurately as possible.

- Simulate a proposed change to an existing quantum computer.

- Simulate a proposed new quantum computer.

- Evaluate what changes to existing quantum computers would be needed to enable particular algorithms to return more acceptable results.

- Debug a quantum algorithm (quantum circuit.)

- Single-step through a quantum circuit.

- Examine the full quantum state of result qubits just before they are measured for actual results.

- Simulate a quantum circuit when measuring Quantum Volume for a real quantum computer.

- Develop baseline test results for comparison against results from a real machine.

- Develop, test, and evaluate a noise model to be used for future simulator runs.

Some of these tasks will directly translate into use cases, while others will be particular access patterns for one or more use cases.

Overarching goal: Focus on where the “puck” will be in 2–4 years rather than current hardware which will be hopelessly obsolete by then

Since quantum computing hardware is currently very limited and evolving rapidly, with current hardware likely to be hopelessly obsolete within two to four years, if not sooner, and current hardware is not capable of supporting production-scale practical applications, let alone achieving any significant and dramatic quantum advantage, it is my view that it is highly advantageous for quantum algorithm designers and quantum application developers to place much more emphasis on designing, developing, and testing algorithms and applications using simulators for future hardware rather than investing any significant effort in current quantum hardware.

Using real quantum computers may produce a certain sense of satisfaction, but it’s really an illusion since the results are not dramatically better than can be achieved using classical computers.

Better to focus on algorithm design which will be able to hit the ground running in two to four years as the hardware inevitably progresses.

Quantum algorithms and quantum circuits are rough synonyms

Technically, theoretically, quantum algorithms and quantum circuits are not exactly the same thing, but for the purposes of this paper they can be treated synonymously.

A quantum algorithm is more of a specification of logic, while a quantum circuit is more of an implementation of that logic.

A quantum algorithm is more abstract and idealized, while a quantum circuit is concrete and particularized to the specific hardware which it must run on.

Target quantum computer

The term target quantum computer indicates the quantum computer which is being simulated or to be simulated. It is the quantum computer upon which a quantum algorithm or quantum application is intended to run.

It may be one of:

- An actual, real quantum computer.

- A hypothetical or proposed quantum computer.

- An ideal quantum computer.

A classical quantum simulator itself is not a target quantum computer per se. The target quantum computer is the (real or imagined) quantum computer which is to be simulated.

Profile for a target quantum computer

The term profile for a target quantum computer refers to a relatively complete description of a target quantum computer. At a minimum, this would include:

- Vendor name. Full name.

- Vendor handle. Short name for the vendor.

- Vendor quantum computer family name or other identifier.

- Vendor model number or other identification.

- Serial number for a particular instance of some model of quantum computer.

- Number of qubits.

- Noise model.

- Actual or expected overall qubit fidelity. Can include nines of qubit fidelity, qubit coherence time, gate error rate, and measurement error rate.

- Fidelity for individual qubits, when that level of detail is available.

- Qubit connectivity. Which qubits may participate in a two-qubit quantum logic gate.

- Precision of unitary matrix entries supported.

- Granularity of phase supported.

- Granularity of probability amplitudes supported.

- Coherence time and a sense of maximum circuit depth. Be able to report to the user if their quantum circuit exceeds the maximum circuit depth. Maybe two thresholds, one to issue a warning diagnostic, and another to abort the simulation. Otherwise, users may struggle to figure out exactly while their circuit results are so unexpected.

Personas, use cases, and access patterns

When looking at how a system is used, it is useful to look at three things:

- Personas. Who is using it? What is their role or function in the organization? Also known as an archetype.

- Use cases. What goals or purposes are they trying to achieve?

- Access patterns. What specific tasks are they performing in order to work towards and achieve those goals?

This paper focuses on use cases, but it also mentions personas that might use those use cases, as well as what access patterns might be used by those personas for those use cases.

The five major use cases

- Perfect simulation. Ideal, perfect, or even naive simulation. Noise-free and error-free. No limits and maximum precision. Great for research in ideal algorithms, but does not reflect any real machine. The baseline, starting point for all other use cases. Also useful for verifying results of a real machine, such as Quantum Volume metric calculation and cross-entropy benchmarking. Also useful for testing and debugging for logic errors.

- Theoretical ideal real simulation. Not quite perfect since there are theoretical limits from quantum mechanics. There’s no clarity as to the precise limits at this time. It’s possible that theoretical limits may vary for different qubit technologies, such as trapped ions and superconducting transmon qubits.

- Match an existing real quantum computer. Very closely match the noise, error, and limit profile for a particular real quantum computer — so simulation results closely match the real machine, and given a relatively small number of real machines, could run a very large number of simulations on more plentiful classical machines. Great for evaluating algorithms intended to run on a particular real quantum computer. Should include a per-qubit noise profile since that’s how problematic current, real machines really are.

- Match a proposed real quantum computer. Tune noise and error profile for proposed enhancements to evaluate how effective they will be — exactly match proposed evolution for new machines — simulate before you build. This may be a relatively minor adaptation of an existing real quantum computer, significant improvements to an existing real quantum computer, or it may be for some entirely new and possibly radically distinct quantum computer.

- Near-perfect qubits. Configure a theoretical ideal real simulation for the number of nines of qubit fidelity. Great for evaluating perfect algorithms for varying levels of qubit fidelity. Based on theoretical ideal real simulation, configured for nines of qubit fidelity.

As indicated, each use case has its own, unique goals and purposes.

Access patterns

While the use cases define the function of the simulation, there are multiple access patterns for accessing those use cases. The primary access pattern is of course normal circuit execution. Other access patterns include debugging and various testing and analysis scenarios.

Some access patterns apply to all use cases, while other access patterns may only apply to some of the use cases.

The access patterns:

- Normal circuit execution. The normal, typical usage, to simply execute the circuit and retrieve the results of measured qubits.

- Debugging. Logging all intermediate results and quantum states for later examination. Single-step to examine quantum state at each step of the algorithm. Range of classical debugging techniques, but applied to quantum circuits. Useful for all of the main use cases.

- Audit trail. Capture all quantum state transitions. For external analysis. For larger circuits, it might be desirable or even necessary to specify a subset of qubits and a subset of gates to reduce the volume of state transitions captured. Blob storage may be necessary to accommodate the sheer volume of quantum states.

- Rerun a quantum circuit with the exact same noise and errors to test a bug fix. Some circuit failures may be due to logic bugs that only show up for particular combinations of noise and errors.

- Shot count. Circuit repetitions to develop the expected value of the probabilistic result using statistical aggregation of the results from the repeated executions of the circuit.

- Snapshot and restart. For very large simulations, periodically capture (snapshot) enough of all quantum state so that the simulation can later be restarted right where it left off.

- Assertions and unit testing. Check quantum state and probabilities at various steps in the circuit during execution, and just before final result measurement as well.

- Find maximum error rate. Specify desired results and run an algorithm repeatedly with varying error rates to find the minimum number of nines of qubit fidelity needed to get correct results a designated percentage of the time. Gives an idea of how practical an algorithm is. Or, what qubit fidelity must be achieved to enable various applications. Or, given a roadmap, at what stage various applications will be enabled. Useful for evaluating ideal algorithms or proposed changes to a real machine.

- Report error rate trend. Run an algorithm n times with increments of qubit fidelity and report percentage of correct results for each run. Used to evaluate the tradeoffs between error rate and correctness of results. Achieving more correct results might be too difficult or time-consuming to achieve. Useful for evaluating ideal algorithms or proposed changes to a real machine.

- Calibrate shot count. Experiment with a quantum circuit to find how the shot count for circuit repetitions needs to be calibrated based on various noise levels and error rates to achieve a desired success rate for results of the quantum computation.

- Checking of unitary matrix entries. Such as fine-grained rotation angles which may not make sense for particular hardware.

- Count quantum states. Gather and return counts of quantum states during circuit execution, including counts of the total quantum state transitions, total unique quantum states, and maximum simultaneous quantum states, as well as a distribution of quantum states, and histogram of quantum states over time. That can be expensive and voluminous, so allow qubits and time intervals to be selected to dramatically reduce resource requirements.

Personas for simulation of quantum circuits

There are a variety of categories of persons who have an interest in simulating quantum circuits on classical computers. They have a role or perform a function in their organization. Archetype is another term for persona.

Developers and users are two of the main categories of personas for simulation.

Some personas may be very hands-on and personally use simulators, while others have an interest in the simulation but may not perform the simulation themselves.

Their reasons for simulation are the use cases. Their tasks using simulation are the access patterns.

- Algorithm researchers. Interest in hypothetical or theoretical algorithms on hypothetical or theoretical quantum computers rather than on simply current real quantum computers. Need to debug and analyze the performance of algorithms.

- Quantum hardware engineers. Designing and developing quantum computer hardware itself, the quantum processing unit (QPU). Evaluate predictions for how a proposed or new quantum computer should perform. Configure the simulator to match the noise levels and error rates of the prospective quantum computer. And, test and evaluate their new hardware as it is built — does it perform up to their expectations.

- Quantum software engineers. Designing and developing the operating software for quantum computer software. Need to test the full quantum computer system, hardware combined with operating software. Not applications per se, but need algorithm and application test cases to validate correct operation of the system. Simulate the real quantum computer to get results to compare against the actual hardware. Assure that the operating software is properly preparing quantum circuits from requests over the network connections and properly executing them on the hardware.

- Users with limited access to current real quantum computers. The machine may be too new to permit wider access or the demand may be so high that access is severely limited.

- Algorithm designers. Need to debug new algorithms (quantum circuits), even if they will technically run on existing quantum computers, but intermediate quantum states cannot be observed. Need to run a very wide range of tests, more than existing real current quantum computers can handle.

- Prospective users of algorithms. Experiment with algorithms to see how well they work and perform. Experient with noise levels and error rates.

- Application developers. Need to be able to test quantum algorithms at a fast pace, greater than access to real hardware will permit.

- Quality assurance testers. Test algorithms and applications on a very wide range of variability in noise levels and error rates since testing on a single real quantum computer or even a number of real quantum computers may not cover the full range of variability.

- Application usage testers. Test the full application before deployment. Especially if real quantum computers are not available or not available in sufficient volume. And to capture a greater range of the variability of real quantum computer hardware.

- IT planners. Need to plan for both quantum and classical hardware system requirements.

- IT staff. Need to deploy, configure, test, and maintain quantum and classical hardware systems.

- Technical management. Need to understand the capabilities of the hardware that their team is using. Need to understand hardware and service budgeting requirements.

- Research management. More specialized than non-research technical management. Focused more on the science than the engineering or products. Need to understand hardware and service budgeting requirements.

- Executive management. Need to understand the budget and impact of the computer hardware needed by their organization, and the need for research.

- Technical journalists. Understand what the technology does, its purpose, its limits.

- General journalists. A more general understanding of the need for simulation of real quantum computers.

- Students. A wide range of levels, some overlapping with the other personas, especially if involved with research or internships.

- Casual users. Interested in learning about quantum computers, informally.

Users

The term user in this paper refers to any of the personas who are actually running (hands-on) simulations of quantum circuits.

This can range from algorithm designers to quality assurance testers to application testers who are running applications which are using simulation to execute quantum circuits.

Major use case #1: Perfect simulation

Perfect simulation is ideal simulation, free of any noise and free of any errors — flawless, perfect qubit fidelity. And with no limits and with maximum precision. It could also be said to be naive simulation since it doesn’t represent the real world, any real quantum computer.

In some sense it’s not terribly useful to simulate a quantum circuit in a way that doesn’t represent how it might operate on a real machine, but there are some special cases:

- It’s great for research in ideal algorithms. Theoreticians contemplating the ideal.

- It’s the baseline or starting point or foundation for all other use cases.

- It’s useful for verifying results of a real machine, such as for Quantum Volume metric calculation and cross-entropy benchmarking.

- It’s also useful for testing and debugging for logic errors. To avoid being distracted or sent on wild goose chases by noise.

Major use case #2: Theoretical ideal real simulation

The theoretical ideal for a quantum computer would not be quite absolutely perfect since there are theoretical limits from quantum mechanics, including an inherent degree of uncertainty.

There’s no clarity as to the precise limits at this time. Presumably Planck units would dictate various limits.

It’s possible that theoretical limits may vary for different qubit technologies, such as trapped ions and superconducting transmon qubits.

In any case, this use case would correctly match the ideal quantum computer in the real world, the best quantum computer which could be produced in this universe.

Major use case #3: Match an existing real quantum computer

This use case is great for evaluating algorithms intended to run on a particular real quantum computer.

The simulator would very closely match the noise, error, and limit profile for a particular real quantum computer — so simulation results very closely match the real machine.

Should include a per-qubit noise profile since that’s how problematic current, real machines really are.

Superficially, it might seem silly to bother to simulate an actual real quantum computer when you can just as easily run quantum circuits on the real quantum computer, but there are reasons, including:

- Debugging. Intermediate and even final quantum states are not observable on a real quantum computer. A classical quantum simulator can capture and record all quantum states.

- Rapid turnaround for a large number of executions. For massive testing of small quantum circuits, the network latency to run a quantum circuit could easily be much greater than the time the simulator could simulate the circuit. Plentiful commodity classical computers could permit a very large number of parallel simulations.

- Limited access to the actual, real quantum computer system. For new machines and for machines in very high demand, the waiting time could greatly exceed the simulation time.

- Faster access and more precise control of execution. Classical code can simulate exactly when it wants and exactly as it wants, not having to deal with all of the vagaries of remote execution in the cloud.

- Precise evaluation of intermediate states. As part of validation of the quality of the actual, real quantum computer, as well as precise validation of the execution of quantum algorithms. Similar to debugging, but used differently — debugging may be more interactive and visual, while validation is likely to be automated.

Major use case #4: Match a proposed real quantum computer

In this case, there is no existing actual, real quantum computer which can be used, so all reasons for executing quantum circuits for such a hypothetical quantum computer will require simulation.

The goal is that the noise, error, and limit profile should very closely match the expectations for the proposed quantum computer.

Users and developers should be able to fairly exactly replicate results from the proposed quantum computer.

The philosophy here is to simulate before you build. See what problems might crop up before you expend significant engineering resources building the new quantum computer.

There are really three cases here:

- A relatively minor adaptation of an existing real quantum computer.

- Significant improvements to an existing real quantum computer.

- An entirely new quantum computer. Possibly radically distinct from existing quantum computers.

Major use case #5: Near-perfect qubits

The goal of this use case is to experiment with varying degrees of qubit fidelity, typically to determine what degree of qubit fidelity (nines of qubit fidelity) might be needed to achieve acceptable results.

This will generally be the preferred use case for experimentation rather than the perfect and theoretically ideal use cases since it more closely reflects a quantum computer which might exist at some point in the reasonable future.

The definition of near-perfect will vary over time as real quantum computer hardware evolves.

The goal here is neither to match current hardware nor some distant ideal, but some happy medium which is well above the current state of the art but reachable within a few years. Something that is not quite perfect but close enough for many applications.

Quantum error correction (QEC) may achieve very close to absolute (or theoretical) perfection, so near-perfect qubits are about as close to that perfection as real hardware is expected to get without quantum error correction.

That ideal for near-perfect is probably a bit too extreme, so generally the actual standard for near-perfect should be somewhat better than current state of the art, such as what might be achieved in two to three or four or even five years.

And that standard would be a moving target, increasing each year as the hardware advances.

Given current state of the art for qubit fidelity, in the 1.5 to 2.5 nines range, a standard of 3 or 3.5 or maybe even 4 nines might be reasonable as a default for near-perfect qubit fidelity.

It might make sense for the user interface to support an option to specify the standard for near-perfect as either a specific number of nines of qubit fidelity or a number of years beyond the current default estimate of near-perfect in a few years. For example, five years, seven

years, or ten years from now where the default is an estimate for qubit fidelity two or three years from now.

This use case is based on the theoretical ideal real simulation use case, but easily configured for nines of qubit fidelity.

I expect that algorithm researchers would find this use case particularly useful. It would be nice to know what qubit fidelity is needed for a new algorithm to work properly.

Some of the more common configurations:

- Two nines. Roughly current state of the art.

- 2.5 nines. A more precise reference to the current state of the art. Corresponds to 99.5% reliability or a 0.5% error rate (0.005 in decimal form.)

- Three nines. A little better than the best we have today. May or may not be suitable for quantum error correction.

- Four nines. A stretch from where we are today. Probably enough for initial quantum error correction.

- Five nines. Possibly a limit for current quantum hardware technologies. Probably needed for efficient quantum error correction.

- Six nines. Near-perfect qubit fidelity.

- Nine nines. Even closer to perfect.

- Twelve nines. Possibly beyond what we can expect to achieve.

- Fifteen nines. Almost certainly beyond what we can expect to achieve. But still useful for algorithm research to test the limits of quantum computing.

Determining what the results of a quantum circuit should be — without any of the noise of a real quantum computer

It is commonly the case that one cannot easily predict in advance what results to expect from a quantum circuit. You can run the circuit on a real quantum computer, but then you can’t easily tell if the results are necessarily correct or whether the results are skewed due to noise and hardware errors. This is where simulation can help.

A perfect simulation can let you know what the results of the circuit should be if there is no noise or errors.

A near-perfect simulation can let you know what the results should be with various degrees of noise and errors.

Then you can compare the actual results from running the circuit on a real quantum computer with these ideal results.

Quantum error correction (QEC)

Full and robust implementation of quantum error correction (QEC) is still so far off in the future that it’s almost not worth talking too much about at this juncture, but it’s still worth mentioning and noting that we need to at least prepare a placeholder for it.

The first question is whether QEC should be simulated at the physical qubit level or at the logical qubit level. I lean towards the latter since it would be much simpler and much more efficient, especially since simulating so many physical qubits would be extremely expensive.

In fact, it wouldn’t even be practically possible to simulate even a single logical qubit if 65 or even 57 physical qubits were required to implement a single logical qubit (two proposals from IBM) — since 50 qubits is essentially the upper limit for classical quantum simulation.

For more detail on quantum error correction, read my paper:

- Preliminary Thoughts on Fault-Tolerant Quantum Computing, Quantum Error Correction, and Logical Qubits

- https://jackkrupansky.medium.com/preliminary-thoughts-on-fault-tolerant-quantum-computing-quantum-error-correction-and-logical-1f9e3f122e71

Would a separate use case be needed for quantum error correction?

I don’t think a separate use case would be needed for quantum error correction. Rather, the profile for a quantum computer supporting quantum error correction would be couched in terms of logical qubits with a trivial noise model in which noise and errors would be absolutely nonexistent or at most very tiny.

I suspect that many of the earliest quantum computers to support quantum error correction will have two modes — raw uncorrected physical qubits or logical qubits only. Each of those two modes would have a distinct profile to guide simulation.

Alternatively, there could be a parameter for all of the use cases to indicate whether physical or logical qubits are to be used for simulation, and that boolean parameter would then be used internally to select which of the two profiles to use for the selected target machine, with the default set to logical or physical qubits depending on what the dominant usage is expected to be — earliest implementations might still be predominantly used as physical qubits, while eventually most usage might switch to logical qubits.

Will quantum error correction really be perfect?

One open question about QEC is whether it will actually be able to achieve true, absolute error-free operation, or whether there will always be some degree of residual error rate, however tiny it may be.

The earliest implementations of QEC may in fact still have some relatively significant, non-tiny residual error rate.

It should be expected that any residual error rate for QEC will decline over time as the underlying fidelity of qubits gradually improves over time.

It is also worth noting that there may also be a theoretical residual error rate due to the nature of uncertainty in quantum mechanics.

In any case, any residual error rate under QEC should be included in the noise model for QEC for the profile for an error-corrected target quantum computer.

Should debugging be a major use case?

I go back and forth on this — it sure seems as if debugging is a major big deal use of classical quantum simulators, but it applies to all of the major use cases, so it’s more of an access pattern than a use case per se. Still, part of me wants to consider it a major use case anyway.

Ultimately, the primary criteria for deciding that debugging (or analysis) is not a use case per se is that the key distinction between the major use cases is the set of criteria to use to decide how to configure noise and error rates. Debugging (or analysis) applies across all configurations of noise and error rates.

For now, I’ll consider it to be an access pattern which applies to all of the major use cases, but I can understand if others wish to treat it as a distinct use case.

Alternatively, debugging could be considered a minor use case, distinct from the major use cases — and the intent of this paper was to focus on the major use cases, as well as enumerating access patterns and personas.

Generally, the use cases revolve around criteria for configuring noise and error rates

For the most part, the key distinction between the major use cases is the set of criteria to use to decide how to configure noise and error rates.

This is one of the reasons why debugging and analysis are not major use cases per se, since they don’t configure noise or error rate, and they apply to all configurations of noise and error rate — all use cases.

Simulate before you build

A key use case of a classical quantum simulator is to evaluate hardware development before it gets built. The goal is to try out quantum algorithms and quantum circuits before the hardware is even built — to see how it functions and see how it performs, before the heavy expense of hardware implementation.

There are two distinct use cases:

- Simulate a completely new quantum computer. Not based on a current quantum computer.

- Simulate incremental (or even radical) enhancements to an existing quantum computer.

If there is already a simulation profile configured for the latter case, it can be a relatively straightforward process to implement the simulation of the enhancements.

Why simulate if real hardware is available?

It’s easy to understand that a classical quantum simulator is needed when real quantum computer hardware is not available, but there are a number of other reasons to use a classical quantum simulator, beyond simply to execute of quantum circuit and capture the results:

- Test algorithms which cannot be run on existing real quantum computers, primarily due to low qubit fidelity of current hardware.

- Testing algorithms and applications for qubit fidelities and gate error rates that are different from current real quantum computers.

- Debugging — not possible on real quantum computers. Is your algorithm failing due to bugs in your logic or limitations of the hardware?

- Other forms of analysis, as enumerated in the access pattern section, beyond simply capturing results of full circuit execution.

- To capture the results for simple quantum circuit execution instantly for testing purposes without any of the latency, delay, or unavailability associated with queuing up to use the scarce number of real quantum computers available today and for the indefinite future.

- For smaller simulations, can run many simulators on a very large distributed cluster to achieve many more runs than may be possible with shared cloud access to very limited real quantum computers.

Noise models

Noise models are important and even essential to reasonable and realistic simulation of quantum computers, but this paper will not delve into any specific details of how noise models do or should function, other than to state the purpose and goals for noise models in the various use cases.

Per-qubit noise and error model

Not all qubits are created equal. Subtle design nuances and manufacturing variations may cause some otherwise identical qubits to function somewhat differently, particularly in terms of noise and error rates. The net effect is that if one wishes to configure a classical quantum simulator to fairly closely mimic a real quantum computer, then there potentially need to be subtle differences in the noise and error rate modeling on a per-qubit basis.

This is not something that an average user would ever need to be concerned with, but the engineer developing the noise model for a particular quantum computer would have to focus on this level of detail.

Noise models analogous to classical device drivers

A noise model for a classical quantum simulator functions in a somewhat analogous manner to a device driver on a classical computer — a package of software which controls the hardware (simulation), including various properties or parameters to fine-tune that control.

Most users should not create, edit, look at, or even be aware of the details of noise models

Most users (personas) will simply use nose models which have been prepared in advance to match the major use cases, so they won’t have any need to create, edit, or even look at the details of the noise model that their simulation runs are using.

All most personas will need to do is pick the major use case and identify what quantum computer they are trying to simulate and that will fully determine what noise model will be used. And possibly a very small number of parameters, such as nines of qubit fidelity, if they are trying to simulate the impact of a quantum computer that may have a higher qubit fidelity than current quantum computers.

Advanced personas, such as researchers and quantum hardware engineers, will need to create and edit noise models, but their needs should not cause less sophisticated personas to have any need to create, modify, look at, or even be aware of the details of noise models.

High-level control of noise models

The noise models of today require that the user have a significant amount of knowledge about how the noise model works in order to tune or control the noise model, but most average users will not possess that level of knowledge. Instead, noise models need to have high-level parameters that are easy for average users to understand, that relate more to the needs of applications and algorithm design.

Selecting nines of qubit fidelity for noise models

The most useful, and known, parameter for a noise model would be nines of quabit fidelity. This is something that average users will be able to relate to.

The default should correspond to the approximate current state of the art for qubit fidelity of real quantum computers.

And maybe have an alternate default which will correspond to current expectations for where qubit fidelity will be in about two years. For users who are developing algorithms today but don’t expect to actually use them in practice for another two years.

For more on nines of qubit fidelity, see my paper:

Viewing effective nines of qubit fidelity

Regardless of the use case or setting of parameters for the noise model, it would be extremely useful for the simulator to display the effective nines of qubit fidelity that result from that noise model.

In many cases the qubit fidelity will be a fixed constant or easily calculable from other noise model parameters, but in some cases simulating a small benchmark test circuit might be needed to empirically observe the effective qubit fidelity.

In any case, it would be highly advisable to get users into the mindset of thinking of noise and errors in terms of nines of qubit fidelity.

Inventory of known quantum computers

The model proposed by this paper presumes that there will be a library or inventory of profiles for target quantum computers as well as for noise models and other parameters and properties for all known existing, ideal, and proposed quantum computers.

Parameters and properties for use cases and access patterns

Various parameters or properties may need to be specified for particular use cases or access patterns.

The hope is that most such parameters and properties will have intelligent default values so that an average user will not feel the need to specify many parameters or properties at all.

Precision in simulation

Precision come into play in simulation in five ways:

- Precision of entries in unitary matrices. For execution of quantum logic gates.

- Precision of quantum probability amplitudes. For the quantum state of qubits during simulation.

- Precision of phase. A subset of the quantum state of qubits. For the quantum state of qubits during simulation.

- Precision of readout of quantum probability amplitudes. For the quantum state of qubits during simulation as displayed or returned for debugging and analysis of qubit state.

- Precision of readout of phase. A subset of the quantum state of qubits. For the quantum state of qubits during simulation as displayed or returned for debugging and analysis of qubit state.

In theory, each use case should have its own profile for precision of each of these six cases:

- A perfect simulation should have the maximum possible precision.

- A theoretical real ideal simulation should have a precision which closely matches the theoretical ideal, as defined by quantum mechanics.

- A simulation of a real machine should match the effective precision of the actual hardware, firmware, and software.

- A near-perfect simulation should use a precision which matches the best estimate of a real machine which would achieve the nines of qubit fidelity selected for the simulation.

A naive simulator might simply use double precision floating point or some other convenient or default precision, but accurate simulation should be more particular as to precision so that it is indeed a close match to the precision of the intended target quantum computer.

There may be some theoretical limit to precision, such as some Planck-level unit. There may be some theoretical quantum uncertainty for precision, based on quantum mechanics. There may also be some theoretical limit which may vary depending on the particular qubit technology. There may also be a practical limit based on the particular implementation of the particular qubit technology.

In any case, there should be some degree of control over precision of the operation of the simulator, driven by the profile of the target quantum computer.

Precision in unitary matrices

The precision of real numbers in entries in unitary matrices is an interesting challenge.

There are likely theoretical limits, derived from quantum mechanics. These should be clearly documented. Especially if they may vary between different qubit technologies.

The entries in unitary matrices are complex numbers, which consist of real and imaginary parts, each part possibly having a separate precision.

Granularity of phase and probability amplitude

Each quantum computer architecture, each qubit technology, each qubit implementation, and quantum mechanics itself may constrain the degree of granularity of both phase angles and probability amplitudes. As such, this granularity needs to be reflected in the configuration of classical quantum simulators.

The simulators should be configurable to detect, optionally report, and optionally abort if quantum logic gates violate granularity limits of the target quantum computer. Configuration settings should optionally allow very tiny variations, such as rounding errors to be ignored, but too-small rotations should be detected as possible algorithm bugs — asking the quantum computer to do something which it is incapable of doing.

The perfect simulation use case is an exception since it has no limits per se, although the user may start with that base and then add limits, which can then be used to validate input circuits.

Quantum computer hardware vendors should clearly and precisely document any granularity limits for phase and probability amplitude.

For more on granularity of phase and probability amplitude, see my paper:

- Beware of Quantum Algorithms Dependent on Fine Granularity of Phase

- https://jackkrupansky.medium.com/beware-of-quantum-algorithms-dependent-on-fine-granularity-of-phase-525bde2642d8

Limitations of simulation of quantum circuits

Simulation of quantum computers is not a perfect panacea. There are various limitations, such as:

- It is believed that simulation of circuits using more than about 50 qubits will not be practical since the number of potential quantum states grows exponentially as the number of qubits grows, so 50 qubits would imply 2⁵⁰ quantum states — roughly one quadrillion states.

- Performance of simulation of larger circuits can be problematic, both in time and the amount of storage required.

- At present 40 qubits may be the current practical limit for simulation — and maybe less.

- Finely tuning noise models to accurately model real machines is the ideal, the goal, but accuracy is not guaranteed and could potentially be problematic.

Simulation details

The full, detailed feature set for simulating quantum circuits is beyond the scope of this informal paper. From the perspective of this paper, quantum circuit simulation is an opaque black box — a circuit goes in and a result comes out, although the various use cases and access patterns do impact what happens inside the box, but most of what happens inside the box is beyond the scope of this informal paper.

Debugging features

The full, detailed feature set for debugging quantum circuits is beyond the scope of this informal paper. From the perspective of this paper, the point is simply that rich debugging features are needed. There will be two categories of features:

- The full richness of debugging on classical computers. Not all classical debugging features will be relevant to quantum computing, but certainly a nontrivial fraction.

- A full set of quantum-specific debugging features. Taking into account the nature of quantum state with complex probability amplitudes which can span any number of qubits when qubits become entangled — so-called product states.

Use of perfect simulation to test for and debug logic errors

Perfect simulation is also good for testing a wide range of input values to confirm expected results and detect any logic errors, again without the distraction of noise. Of course such testing should also be performed with a realistic noise and error model, but first do the testing with noise-free perfect simulation to shake out most of the logic errors before introducing noise into the mix.

Even if the simulator is configured to closely match the noise and error profile of a real quantum computer (or even any hypothetical noisy quantum computer), the user faces the problem that they cannot determine whether unacceptable results are due to noise and errors or flaws in the logic of their algorithms. This distinction can be resolved by running that same algorithm using the perfect simulation use case where noise and errors have no role, so that any unacceptable results would thus be due to flaws in the logic of the algorithm.

It would still be advisable for users to run with a use case and noise and error model which matches the quantum computer on which the user intends their algorithm to run. It’s only when results appear unacceptable that perfect simulation may be needed.

It may still be useful to still use the simulator configured to match a target quantum computer rather than using a real quantum computer for various reasons, such as:

- To facilitate debugging.

- To facilitate analysis of intermediate quantum state.

- For more controlled execution.

- For more rapid execution (at least for smaller circuits.)

Rerun a quantum circuit with the exact same noise and errors to test a bug fix

Some quantum circuit failures may be due to logic bugs that only show up for particular combinations of noise and errors. Such bugs can be extremely difficult to detect and track down. And even when a logic fix is found, it can be just as difficult to verify that the logic fix is being tested since it depended on some particular combination of noise and errors.

The trick is to have an access pattern for each of the major use cases to set the random number seed to exactly reproduce a noise scenario to test a bug fix. This also depends on each simulation run for a quantum circuit returning the random number seed which was used for that simulation run.

A circuit may work reasonably well for most cases, but occasionally fail based on random noise or errors.

One might have a fix or add logic to help track down the reason for the circuit not being resilient to that particular noise or error condition, but simply rerunning the simulation will not normally guarantee that the same exact pattern of noise and errors will occur.

But if the random number seed of the failed test case simulation is captured and then used to set the random number seed for a re-test, the exact noise and error scenario can be reliably replicated as often as needed, with no need for exhaustive trial and error testing.

Whether this feature should be a discrete access pattern or simply a parameter for a quantum circuit execution access pattern (normal or debugging) is left unspecified — either would work.

Checking of unitary matrix entries

Many quantum logic gates are very easily translated into very simple unitary matrix values, but some gates are more sophisticated, such as reliance on angles which are not integer multiples of 90 degrees, or multiples or fractions of pi. Algorithms which rely on fine-granularity of angles can be very problematic on noisy NISQ quantum computers, such as quantum Fourier transform (QFT) and quantum phase estimation (QPE).

Validation of reasonable unitary matrix entry values certainly depends largely on the entry values themselves, but also on the range of possible noise. It’s one thing for noise and errors to occasionally cause problems, but it’s another thing if the matrix entry values are virtually guaranteed to fail to give acceptable results based on the chosen (or implied by the use case) noise model. It would be better to cleanly report the problematic gates rather than leave the user to struggle to figure out why they are getting unacceptable results.

So, it would be helpful to have an access pattern which validates each unitary matrix before the circuit is simulated.

Some checks cannot be made on the raw unitary matrix entry values themselves since the entries are computed outside of the simulator and before the simulation based on high level parameters, such as rotation angles. It would be better to validate the angles themselves before they are used to compute the matrix entry values. That way, the user can be given very direct and specific guidance as to what is causing the problem, such as the fact that a given target quantum computer or noise model does not adequately support the specified parameter values, such as fine-grained rotation angles.

It’s possible to validate the raw, final unitary matrix entry values, such as detecting that they rely on a degree of precision which is not supported by the chosen target quantum computer or noise model, but at that stage the simulator won’t be able to report what high-level parameters were used to compute the matrix entry values.

It might generally be desirable to set two threshold values for matrix entries, one threshold of precision for advisory reporting of suspect entry values but continue execution, and the other an abort threshold which stops the simulation if excessive precision is attempted.

One difficulty to be addressed is that quite a few unitary matrix entry values will rely on irrational real numbers such as the square root of 2 or a multiple or fraction of pi which implicitly have or require infinite precision, so that a simplistic check for excessive precision would always indicate a problematic situation. Some sort of symbolic representation of matrix entries would be helpful so that square root and pi would be calculated within the simulator to a precision specified for the target quantum computer rather than before the unitary matrix is sent to the simulator

Need for blob storage for quantum states

Even a moderate-sized algorithm could generate a vast number of quantum states, far too many to directly return to an application which wishes to analyze all of the gory details of a simulation run.

This calls for some form of so-called blob (binary large object) storage, where the simulator can store the intermediate quantum states and return an identifier which would allow the application or user to access the detailed quantum states at some later time based on that identifier for the simulation run.

What to do about shot counts for large simulations

Another open question is what to do about shot counts (circuit repetitions) for simulation of larger quantum circuits where the simulation could be very expensive and generate a vast number of quantum states.

Circuit repetitions are needed for two distinct purposes:

- To compensate for the noisiness and errors of current NISQ hardware.

- To accommodate the inherent probabilistic nature of quantum computing.

At present, shot counts need to be excessively high due to excessive noisiness. These excessive shot counts will ultimately be reduced as qubit fidelity improves.

But even as qubit fidelity improves, probabilistic algorithms will still require circuit repetitions. There, dramatically reducing the shot count is not a reasonable option.

Ultimately, higher performance simulators will help address this issue.

The only solution or workaround for now is to either run at a higher qubit fidelity (less noise and fewer errors) so that the shot count can be dramatically reduced, or to artificially reduce the shot count and accept that the simulation will not be as accurate as it otherwise would be.

Another possibility is to allow the user to specify the exact same shot count as they would be using for execution on a real quantum computer — possibly many thousands or even millions, but then have a global option to specify the percentage of the full shot count to be used during a simulation run, such as 50%, 10%, 5%, 2%, 1%, or even 0.001%. So that the actual number of simulated circuit repetitions can be reduced but the explicit intended repetitions can be preserved, and to allow easy experimentation with what that percentage can be.

For more on shot counts and circuit repetitions, read my paper:

- Shots and Circuit Repetitions: Developing the Expectation Value for Results from a Quantum Computer

- https://jackkrupansky.medium.com/shots-and-circuit-repetitions-developing-the-expectation-value-for-results-from-a-quantum-computer-3d1f8eed398

Resources required for simulation of deep quantum circuits

Most quantum circuits today are relatively shallow (short), but soon enough, as qubit fidelity increases, people are going to want to use much deeper quantum circuits, which will require a dramatic increase in classical resources needed to perform a simulation of a deep circuit.

Vendors and researchers in the technology of classical quantum simulators will need to place a lot of emphasis on both increasing raw storage capacity, making more clever use of existing storage capacity, and coming up with new and novel approaches to managing the vast volume of intermediate quantum states generated by deeper quantum circuits.

Is there a practical limit to the depth of quantum circuits?

There are two distinct questions regarding potential limits to the depth of quantum circuits:

- Physical limits of real quantum computers. Such as coherence time. There may also be limits for the capacity of the storage used by software or classical hardware which is preparing the quantum logic gates of a quantum circuit for execution.

- Resource limits for classical quantum simulators. Limits of capacity of storage for intermediate quantum states, as well as human patience for how long a simulation might take in wall clock time.

From the perspective of this paper, the interest is in the latter, whether a quantum circuit might have so many qubits and so many gates that the number of quantum states grows so large that it either exceeds the classical storage capacity of the simulator or takes so long to run that the user is unable or unwilling to wait that long.

Even if the simulation might be theoretically possible and even ultimately practical given sufficient resources, time, and patience, practical limits on human endurance may render a particular simulation impractical.

What might the practical limit to quantum circuit depth be? Unknown. And that’s beyond the scope of this informal paper. Some possibilities:

- Dozens of gates. It’s got to be more than that. But, that could be the limit for some or many current simulators, at least for circuits with more than a handful of qubits.

- Hundreds of gates. Hopefully at least that, but the practical limit could be in that range.

- Thousands of gates. That could well be well beyond the range of many simulators.

- Millions of gates. No reasonable expectation that this would be possible.

Also, it’s worth noting that there may be a separate limit for total circuit size as opposed to maximum circuit depth per se.

All such limits or costs for circuit depth and maximum circuit size should be clearly documented for each classical quantum simulator.

Will simulation of Shor’s factoring algorithm be practical?

The general implementation of Shor’s factoring algorithm for semiprime integers will require four times the number of bits in the integer to be factored plus another three ancilla bits. So, factoring even a 10-bit integer (values from 0 to 1,023) will require four times ten plus three or 43 qubits. That’s just past the number of practical qubits which can be simulated with current technology.

But, the real killer is that Shor’s factoring algorithm requires very deep circuits — roughly the number of bits times the number of bits minus one, divided by two, or ten times nine divided by two or 45 quantum logic gates just to factor a 10-bit integer.

As noted in the preceding section, additional research is needed to support simulation of deeper circuits.

Even simulation of factoring of six, seven, or eight-bit integers may be a real challenge.

Simulation of factoring even a 16-bit integer may be beyond the capabilities of even advanced classical quantum simulators that we might reasonably expect even a few years from now.

Granted, there are derivative algorithms which use fewer qubits, but circuit depth may continue to be a problem. And even if an algorithm could manage to use only n qubits to factor an n-bit integer — and circuit depth was not a problem — simulation is still limited to a maximum of 50 or so qubits, and factoring a 50-bit integer would not be considered very impressive since the whole interest in Shor’s factoring algorithm is cracking 2048 and 4096-bit public encryption keys.

Still, it could be useful to at least demonstrate a simulation of Shor’s factoring algorithm for some integer size more than four to six bits. Seven or eight bits should be possible. Nine bits should also be possible. One would hope that factoring ten bits should be possible. I think it should be, but it may take improvements in simulator capacity and performance.

Factoring eleven or twelve-bit integers should be possible with greater improvements.

Simulating Shor’s algorithm beyond twelve bits may be beyond our reach, at least in the next couple of years.

The hope would be that attempting to factor a 12-bit integer will highlight either the potential or the pitfalls of scaling Shor’s factoring algorithm to more than a handful of bits.

Alternative simulation modes

Although not directly related to the topic of this paper, it is worth noting that there are a number of different modes of classical quantum simulation, mostly intended to compensate for the fact that a full simulation consumes excessive resources. This section is not intended to enumerate or describe all such alternative modes of simulation, but simply to note some of the more common ones as examples. This paper focuses on full simulation, which is expensive but comprehensive.

I would call these distinct use cases per say, but simply a property or parameter for each use case. All (or most) access patterns would still apply regardless of the alternative simulation mode chosen.

- Full wavefunction simulator. This is a full simulation. Normal simulation. The common, default case.

- Hybrid Schrödinger-Feynman simulator. From Google. Built for parallel execution on a cluster of machines. It produces amplitudes for user- specified output bitstrings. “It simulates separate disjoint sets of qubit using a full wave vector simulator, and then uses Feynman paths to sum over gates that span the sets.”

- Clifford. From IBM. Up to 5,000 qubits.

- Matrix Product State. From IBM. Up to 100 qubits.

- Extended Clifford. From IBM. Up to 63 qubits.

- General, context-aware. From IBM. Up to 32 qubits.

- Schrodinger wavefunction. From IBM. Up to 32 qubits.

- Pulse control simulation. From IBM. Aer PulseSimulator.

Pulse control simulation

I haven’t gotten deep enough into pulse control or simulation of pulse control to understand how it may fit in with the major use cases and access patterns for classical quantum simulators.

It may need to be a separate major use case, or maybe simply another access pattern for the various major use cases.

Or, it may simply be an alternative simulation mode as discussed in the preceding section. This is my expectation.

Major caveat: Simulation is limited to roughly 50 qubits

At present, 50 qubits is believed to be a relatively hard upper bound for how many qubits could be simulated using current classical computing technology. Granted, this could change and even grow as classical computing technology evolves in the coming years, but that’s where we are today.

And that’s the hard upper bound, in theory, while current simulation technology is significantly more limited:

- Google is claiming 40 qubits as their simulation upper bound, or even only 38 qubits using their cloud computing engine.

https://github.com/quantumlib/qsim - Intel is claiming 42 qubits as their upper bound.

https://arxiv.org/abs/2001.10554 - IBM is advertising 32 qubits as their upper bound for full simulation.

https://www.ibm.com/quantum-computing/simulator/ - AtoS Quantum Learning Machine is advertising simulation up to 41 qubits.

https://atos.net/en/solutions/quantum-learning-machine

So, 32 to 42 qubits is the current state of the art for the largest algorithm which can be practically simulated on a classical quantum simulator at present.

Personally, I expect these numbers to rise, as classical computing technology continues to evolve, as the simulators get more clever and more optimized, and just all-around more attention gets paid to classical quantum simulators, especially as quantum algorithms get significantly more complex and difficult to debug and test on current real quantum computers — and people seek to develop algorithms for the quantum computers of the future expected to be available in two to five or seven years.

Advanced debugging capabilities become much more essential for complex quantum algorithms and as more non-elite algorithm designers and application developers join the field, which can only be accomplished via simulation since intermediate quantum states are not observable on real quantum computers.

Need for scalable algorithms

So, what happens when algorithms begin using more than 50 (or 40) qubits?

My answer is that people need to focus on designing automatically scalable algorithms, so they can be tested with 8, 16, 24, 32, 40, and possibly even 48 qubits via classical quantum simulators, so that their performance and accuracy can then more safely be extrapolated to higher qubit counts.

Need for automated algorithm analysis tools

Also, we need automated algorithm analysis tools to detect coding patterns which may not be scalable — such as dependence on fine granularity of phase and probability amplitude, so that scaling problems can be detected automatically using 24 to 40 qubits before 50 and more qubits are even attempted.

This would likely be an access pattern rather than a separate use case since it would apply to all use cases. And it would likely depend on the noise and error model for the use case.

Need to push for simulation of 45 to 55 qubits

As previously noted, current classical quantum simulators support simulation of up to 32 to 42 qubits, which may be fine today, but soon enough we will see quantum circuits in the range of 45 to 50 or more qubits, so we need to see a more determined push to expand both the capacity and performance of classical quantum simulators.

Simply adding more hardware will likely achieve only relatively limited gains.

What is needed are a variety of advanced and clever uses of existing hardware, or new and novel hardware that is in closer alignment with the needs of simulation of quantum computing.

Much more research is needed.

Would simulation of 60 to 65 qubits be useful if it could be achieved?

Superficially, the more qubits you can simulate, the better, but it is also a question of diminishing returns — if we get to a place where algorithms are in general scalable, then maybe we won’t need to simulate more than about 40 to 48 qubits. That’s the operative word: maybe. Nobody knows for sure.

My working assumption is that the leap from 40 or 50 qubits to 60 or 65 qubits, although extremely expensive, could be very rewarding.

How expensive? Ten bits is 1,024, a factor of one thousand increase in resources required. Another ten bits would be another factor of one thousand or a total factor of one million.

Sure, this would be expensive. Think of an entire large data center just for a single simulator. Think of an entire football field filled with countless rows of racks of classical processors.

But if it enables us to gather insight which we could not gather otherwise, it could be worth it.

My real hope is that if we can indeed get into a mode of inherently scalable algorithms, plus analytic tools to confirm scalability of logic, then maybe 40 to 50 qubits could be sufficient to prove the scalability of an algorithm, and then a simulator for 40 to 50 qubits would be all that we need.

But, that’s simply my hope, not a slam-dunk reality, so I’d like to keep the door open for a massive-scale simulator for 60 to 65 qubits.

Potential to estimate Quantum Volume without the need to run on a real quantum computer

Normally, IBM’s Quantum Volume metric of performance and capacity is calculated by running random quantum circuits on a real quantum computer and then comparing the results to a simulation of the same circuit, but the runs on the real quantum computer could also be simulation runs provided that an accurate noise model can be developed which accurately models the real qubits.

Not that this would necessarily be a big benefit for existing quantum computers, but it would be a big help for estimating the performance and capacity for proposed new quantum computers.

But even that is predicated on having reasonably accurate estimates of qubit fidelity for proposed quantum computers. Still, that is a very reasonable goal to strive towards.

Note that this approach only works for QV less than 2⁵⁰ or using 50 qubits since simulations are limited to 50 qubits, but that’s not an extra constraint since the perfect simulation of the random quantum circuits to compare to the actual results is already required even with the traditional method of first running the circuit on a real quantum computer. Besides, Quantum Volume is only defined for up to about 50 qubits, as per the original IBM paper, because of the simulation requirement.

Simulation is an evolving, moving target, subject to change — this is simply an initial snapshot

This paper is intended to be forward-looking, but quantum computing technology, hardware, architectures, and algorithms are constantly evolving so that any number of aspects of this paper might become invalid, outdated, or obsolete at any time. This paper is simply an initial snapshot in time — early August 2021. I fully expect it to need to be updated within a few years as the technology evolves — if not sooner.

Summary and conclusions

- Simulation needs to closely match noise and error profiles of both existing real quantum computers and proposed quantum computers.

- Need to support debugging of quantum algorithms. Especially as they become more complex and not so easy to simulate in your own head. And debugging cannot be performed on a real quantum computer since intermediate quantum states are not observable.

- Need to fully observe all details of quantum state during quantum circuit execution. Including measured qubits just before they are measured.

- Need to support full testing of quantum algorithms.

- Need to support analysis of quantum circuit execution which is not possible when executing the circuit on a real quantum computer.

- Need to focus on where the “puck” will be in 2–4 years rather than rely on current hardware which will be hopelessly obsolete by then if not much sooner.

- Need to focus algorithm designers on hardware of the future rather than be distracted by current hardware which is incapable of solving real, production-scale practical problems or delivering significant quantum advantage anyway.

- Significant further research is needed to push the envelope for maximizing performance and minimizing resources needed to simulate quantum circuits since 38–50 qubits (or even less) is approximately the limit for simulation today.

- Simulation is an evolving, moving target, subject to change — this is simply an initial snapshot.

For more of my writing: List of My Papers on Quantum Computing.