Quantum Computing Advances We Need to See Over the Coming 12 to 18 to 24 Months to Stay on Track

Current quantum computers are simply not up to the task of supporting any production-scale practical real-world applications. This informal paper identifies and discusses the various critical areas in which improvements are desperately needed to become up to that task over the next two years. That may still not be enough for widespread quantum applications, but it is a necessary intermediate milestone. We may still not have achieved The ENIAC Moment for quantum computing in two years, but the advances discussed in this paper should leave us positioned to be on track to have that major milestone in reach, at least once all of the listed advances have been achieved, whether that occurs in two, three, or five years.

Topics discussed in this paper:

- In a nutshell

- On track for what?

- Preparing for The ENIAC Moment

- Will we have achieved The ENIAC Moment in two years?

- How close might we be to achieving The ENIAC Moment in two years?

- I suspect that we’ll see The ENIAC Moment in roughly three years

- To be clear, The ENIAC Moment is neither assured nor required nor assumed in two years

- The ENIAC Moment is a demonstration, not necessarily ready for production deployment

- Hardware advances needed

- Yes, we need more qubits, but…

- How many qubits do we need? 160 to 256 for some, 64 to 80 for most, 48 as a minimum

- Trapped-ion quantum computers need more qubits

- Neutral-atom quantum computers need to be delivered

- Algorithms need to be automatically scalable

- Rich set of sample quantum algorithms

- The seven main quantum application categories

- Rich set of sample quantum applications

- Take the guesswork out of modeling shot count (circuit repetitions)

- Higher standards for documenting algorithms

- Near-perfect qubits are required

- Perfect logical qubits and quantum error correction (QEC) are not needed in this timeframe

- Architectural improvements needed to enhance transmon qubit connectivity

- Transmon qubit proponents need to announce a connectivity strategy and roadmap

- RIP: Ode to SWAP networks

- Unclear how much improvement in coherence time is needed in this time period

- Quantum advantage requires dramatic improvement

- Still in pre-commercialization

- Not yet time for commercialization

- Even in two years we may still be deep in pre-commercialization

- Avoid premature commercialization

- Ongoing research

- Need a replacement for Quantum Volume for higher qubit counts

- Need application category-specific benchmarks

- We need some real application using 100 or even 80 qubits

- Some potential for algorithms up to 160 qubits

- We’re still in stage 0 of the path to commercialization and widespread adoption of quantum computing

- A critical mass of these advances is needed

- My Christmas and New Year wish list

- Is a Quantum Winter likely in two years? No, but…

- Critical technical gating factors which could presage a Quantum Winter in two years

- A roadmap for the next two years?

- Where might we be in one year?

- RIP: Ode to NISQ

- On track to… end of the NISQ era and the advent of the Post-NISQ era

- RIP: Noisy qubits

- Details for other advances

- And what advances are needed beyond two years?

- My original proposal for this topic

- Summary and conclusions

In a nutshell

General comments:

- Current technology is too limited. Current quantum computers are simply not up to the task of supporting any production-scale practical real-world applications.

- The ENIAC Moment is not in sight. No hardware, algorithm, or application capable of The ENIAC Moment for quantum computing at this time. Coin flip whether it will be achievable within two years.

- Still deep in the pre-commercialization stage. Overall, we’re still deep in the pre-commercialization stage of quantum computing. Nowhere close to being ready for commercialization. Much research is needed.

- We’re still in stage 0 of the path to commercialization of quantum computing. Achievement of the technical goals outlined in this paper will leave us poised to begin stage 1, the final path to The ENIAC Moment. The work outlined here lays the foundation for stage 1 to follow.

- A critical mass of these advances is needed. But exactly what that critical mass is and exactly what path will be needed to get there will be a discovery process rather than some detailed plan known in advance.

- Which advance is the most important, critical, and urgent — the top priority? There are so many vying for achieving critical mass.

- Achievement of these technical goals will leave the field poised to achieve The ENIAC Moment.

- Achievement of these technical goals will mark the end of the NISQ era.

- Achievement of these technical goals will mark the advent of the Post-NISQ era.

- No Quantum Winter. I’m not predicting a Quantum Winter in two years and think it’s unlikely, but it’s not out of the question if a substantial fraction (critical mass) of the advances from this paper are not achieved.

- Beyond two years? A combination of fresh research, extrapolation of the next two years, and topics I’ve written about in my previous papers. But this paper focuses on the next two years.

The major areas needing advances:

- Hardware. And firmware.

- Support software. And tools.

- Algorithms. And algorithm support.

- Applications. And application support.

- Research in general. In every area.

Hardware advances needed:

- Qubit fidelity.

- Qubit connectivity.

- Qubit coherence time and circuit depth.

- Qubit phase granularity.

- Qubit measurement.

- Quantum advantage.

- Near-perfect qubits are required.

- Trapped-ion quantum computers need more qubits.

- Neutral-atom quantum computers need to support 32 to 48 qubits.

- Support quantum Fourier transform (QFT) and quantum phase estimation (QPE) on 16 to 20 qubits. And more — 32 to 48, but 16 to 20 minimum. Most of the hardware advances are needed to support QFT and QPE.

Support software advances needed:

- A replacement for the Quantum Volume metric is needed. For circuits using more than 32–40 qubits and certainly for 50 qubits and beyond.

- Need application category-specific benchmarks. Generic benchmarks aren’t very helpful to real users.

- 44-qubit classical quantum simulators for reasonably deep quantum circuits.

Algorithm advances needed:

- Quantum algorithms using 32 to 40 qubits are needed. Need to be very common, typical.

- Some real algorithm using 100 or even 80 qubits. Something that really pushes the limits.

- Some potential for algorithms up to 160 qubits.

- Reasonably nontrivial use of quantum Fourier transform (QFT) and quantum phase estimation (QPE).

- Algorithms need to be automatically scalable. Generative coding and automated analysis to detect scaling problems.

- Higher-level algorithmic building blocks. At least a decent start, although much research is needed.

- Rich set of sample quantum algorithms. A better starting point for new users.

- Modeling circuit repetitions. Take the guesswork out of shot count (circuit repetitions.)

- Higher standards for documenting algorithms. Especially discussion of scaling and quantum advantage.

Application advances needed:

- Some real applications using 100 or even 80 qubits. Something that really pushes the limits.

- At least a few applications which are candidates or at least decent stepping stones towards The ENIAC Moment.

- Applications using quantum Fourier transform (QFT) and quantum phase estimation (QPE) on 16 to 20 qubits. And more — 32 to 48, but 16 to 20 minimum.

- At least a few stabs at application frameworks.

- Rich set of sample quantum applications. A better starting point for new users.

Research in general needed:

- Higher-level programming models.

- Much higher-level and richer algorithmic building blocks.

- Rich libraries.

- Application frameworks.

- Foundations for configurable packaged quantum solutions.

- Quantum-native programming languages.

- More innovative hardware architectures.

- Quantum error correction (QEC).

On track for what?

The goal for the next two years is to evolve quantum computing closer to being able to support production-scale practical real-world quantum applications. How close we can get in that timeframe is a matter of debate — we’ll just have to see what reality delivers on the promises.

Whether we are able to achieve The ENIAC Moment in two years is unclear at this juncture. The goal is to have laid the groundwork and be poised for such an achievement, even if it does take another year or two or three. The point is to be on track to achieving that larger objective.

Until we reach that goal, all we will have will be:

- Experiments.

- Demonstrations.

- Prototypes.

- Proofs of concept.

- Toy algorithms.

- Toy applications.

To be sure, we will have a variety of tools and some specialized niche applications and services, such as random number generation, but not so much in the sense of full-blown production applications.

Preparing for The ENIAC Moment

Ultimately the goal for the next two years is to lay all the necessary technical groundwork needed to achieve The ENIAC Moment around or shortly after the next two years. This would be the first moment when a real quantum computer is finally able to support a production-scale practical real-world quantum application. Before then, all we will have are experiments, demonstrations, prototypes, and proofs of concept. Not much more than toy algorithms and toy applications.

The focus of this paper is the long list of technical advances required before The ENIAC Moment is even possible.

For more on The ENIAC Moment, see my paper:

- When Will Quantum Computing Have Its ENIAC Moment?

- https://jackkrupansky.medium.com/when-will-quantum-computing-have-its-eniac-moment-8769c6ba450d#b99f

Will we have achieved The ENIAC Moment in two years?

To be clear, even if we are on track to achieving The ENIAC Moment, it may still not quite be within reach in two years. It’s unclear — maybe we will have achieved The ENIAC Moment, or maybe not.

How close might we be to achieving The ENIAC Moment in two years?

It’s so hard to say how close we might be to achieving The ENIAC Moment in two years. The possibilities:

- Indeed we’ve achieved it.

- A mere matter of a few months away.

- Another six months.

- Another year.

- Another two years.

- Another three years?

Might it take more than another three years, a total of five years from today? Well, predicting the future is always fraught with peril, but I would say that if we haven’t achieved The ENIAC Moment five years from now then we would definitely be facing or deep within the prospect of a significant Quantum Winter, with growing disenchantment over a new technology that is having great difficulty delivering on its promises.

I suspect that we’ll see The ENIAC Moment in roughly three years

I’ll place a stick in the sand and suggest that we can expect The ENIAC Moment two to four years from now — call it three years to be more specific.

To be clear, The ENIAC Moment is neither assured nor required nor assumed in two years

It sure would be great to have The ENIAC Moment in two years.

It sure would be great to be close to being on the verge of The ENIAC Moment in two years.

But I’d settle for making the kind of progress outlined in this paper — substantial progress even if still more is needed.

The ENIAC Moment is a demonstration, not necessarily ready for production deployment

The ENIAC Moment is defined as achieving production-scale, but that may still be simply a demonstration of production-scale, not necessarily actual production deployment or even ready for production deployment.

It might take another year or two or even three before the application capability demonstrated by The ENIAC Moment can be formalized and passed through a rigorous product engineering process to produce a true product which is actually ready for deployment in an operational setting. This would be the transition from pre-commercialization to commercialization.

The ENIAC Moment may still be in the lab, still a mere laboratory curiosity, not quite ready for real-world deployment.

For more on laboratory curiosities, see my paper:

- When Will Quantum Computing Advance Beyond Mere Laboratory Curiosity?

- https://jackkrupansky.medium.com/when-will-quantum-computing-advance-beyond-mere-laboratory-curiosity-2e1b88329136

And for more on commercialization, see my paper:

- Model for Pre-commercialization Required Before Quantum Computing Is Ready for Commercialization

- https://jackkrupansky.medium.com/model-for-pre-commercialization-required-before-quantum-computing-is-ready-for-commercialization-689651c7398a

For the larger context of getting to commercialization, see my paper:

- Prescription for Advancing Quantum Computing Much More Rapidly: Hold Off on Commercialization but Double Down on Pre-commercialization

- https://jackkrupansky.medium.com/prescription-for-advancing-quantum-computing-much-more-rapidly-hold-off-on-commercialization-but-28d1128166a

Hardware advances needed

- Qubit fidelity. Requires dramatic improvement. Gate execution errors too high. See near-perfect qubits.

- Qubit connectivity. Requires dramatic improvement. Well beyond nearest-neighbor. May require significant architectural improvements, at least for transmon qubits.

- Qubit coherence time and circuit depth. Requires dramatic improvement.

- Qubit phase granularity. Requires dramatic improvement. Need much finer granularity of phase — and probability amplitude. In order to support large quantum Fourier transforms (QFT) and quantum phase estimation (QPE).

- Qubit measurement. Requires dramatic improvement. Error rate is too high.

- Quantum advantage. Requires dramatic improvement. Need to hit minimal quantum advantage first — 1,000X a classical solution, and then evolve towards significant quantum advantage — 1,000,000X a classical solution. True, dramatic quantum advantage of one quadrillion X is still off over the horizon.

- Near-perfect qubits are required. We need to move beyond noisy NISQ qubits ASAP. 3.5 to four nines of qubit fidelity are needed, maybe three nines for some applications.

- But perfect logical qubits enabled by full quantum error correction (QEC) are not needed in this timeframe. They should remain a focus for research, theory, prototyping, and experimentation, but not serious use for realistic applications in this timeframe.

- More qubits are not needed. We already have 65 and 127-qubit machines, with a couple of 100-qubit machines due imminently (neutral atoms), but we can’t use all of those qubits effectively for the reasons listed above (qubit fidelity, connectivity, phase granularity, etc.)

- 160 to 256 qubits would be useful for some applications. Not needed for most applications, but some could exploit them. Or at least 110 to 125 qubits for more apps.

- 64 to 80 qubits would be a sweet spot for many applications. Plenty of opportunity for significant or even dramatic quantum advantage.

- 48 qubits as the standard minimum for all applications. Achieving any significant quantum advantage on fewer qubits is not practical.

- Trapped-ion quantum computers need more qubits. 32 to 48 qubits in two years, if not 64 to 72 or even 80.

- Neutral-atom quantum computers need to support 32 to 48 qubits. 100 qubits have been promised, but something needs to be delivered.

- Support quantum Fourier transform (QFT) and quantum phase estimation (QPE) on 16 to 20 qubits. And more — 32 to 48, but 16 to 20 minimum. Most of the hardware advances are needed to support QFT and QPE.

Yes, we need more qubits, but…

There’s absolutely no question that we need more qubits. That’s a slam dunk. But…

- We don’t have algorithms ready to use those qubits. Or even the qubits that we already have. No 27-qubit, 65-qubit, or 127-qubit algorithms.

- Mediocre qubit fidelity prevents full exploitation of more qubits. Or even the qubits that we already have.

- Mediocre qubit connectivity prevents exploitation of more qubits. Or even the qubits that we already have.

- Limited coherence time and limited circuit depth prevents exploitation of more qubits. Or even the qubits that we already have.

But other than that, more qubits would be great!

A more positive way to put it is:

- We definitely need more qubits.

- But not as the top priority. Or even the second or third priority.

- Quantum error correction (QEC) will indeed require many more qubits, but that’s a longer-term priority. Three to five years, not one to two years.

As an example of the conundrum we currently face, the brand new, just announced IBM Eagle with 127 qubits is hardly better than the 27-qubit Falcon. Maybe it has some utility for some specialized algorithms (none known yet!), but not for most algorithms, at present.

See my comments on IBM’s 127-qubit Eagle quantum processor:

- Preliminary Thoughts on the IBM 127-qubit Eagle Quantum Computer

- https://jackkrupansky.medium.com/preliminary-thoughts-on-the-ibm-127-qubit-eagle-quantum-computer-e3b1ea7695a3

How many qubits do we need? 160 to 256 for some, 64 to 80 for most, 48 as a minimum

I surmise that 160 to 256 qubits would be more than sufficient as a high-end goal and expectation for the two-year forecast period. Or at least 110 to 125 qubits for some more advanced applications.

64 to 80 qubits are probably plenty for many applications, even to achieve some degree of quantum advantage, two years from now.

My personal expectation is that 48 qubits should be the common minimum two years from now. That should be sufficient to achieve at least some minimal degree of quantum advantage.

48 qubits also focuses attention on the fact that anybody developing algorithms or applications for fewer than 48 qubits is not really focused on achieving significant quantum advantage, which should be the whole goal and purpose for quantum computing.

Trapped-ion quantum computers need more qubits

Trapped-ion quantum computers have a distinct advantage over current superconducting transmon qubit quantum computers since they have full any to any connectivity, but current machines have too few qubits. Support for at least 32 to 48 qubits is needed in two years, if not 64 to 72 or even 80 qubits.

A trapped-ion quantum computer with only 16 to 20 or 24 qubits will be considered irrelevant two years from now. Honeywell/Quantinuum recently announced achieving a quantum volume of 2048, which indicates 11 qubits. Previously they promised a tenfold improvement in quantum volume each year, which implies adding an average of 3.32 qubits per year (the logarithm base 2 of 10 is 3.32, 10 = 2³.32), implying that they would have another 6.64 qubits in two years, or 18 qubits in two years, well-short of the 32-qubit minimum predicated by this paper.

IonQ Computing may be in better shape, but it’s hard to tell for sure. There is insufficient transparency and lack of clarity of publicly available technical specifications and documentation.

In any case, progress is clearly needed on the qubit count front for trapped-ion quantum computers.

The flip side is that while transmon qubit machines have plenty of qubits, their fidelity is too low relative to what is common for trapped-ion qubits.

Neutral-atom quantum computers need to be delivered

Neutral-atom quantum computers show great promise, but they need to be delivered, not just promised.

100 qubits have been promised, but something needs to be delivered.

All that is really needed in the two-year timeframe is support for 32 to 48 qubits. Sure, 64 to 72 or even 80 qubits (or the promised 100 qubits) would be nice, but a year from now just 32 to 48 qubits with high qubit fidelity, long coherence time, and full any to any connectivity would satisfy most near-term application needs.

The main problem with natural-atom machines is that there is too little information available to judge what capabilities they actually deliver — or at least what they will deliver when they do become available.

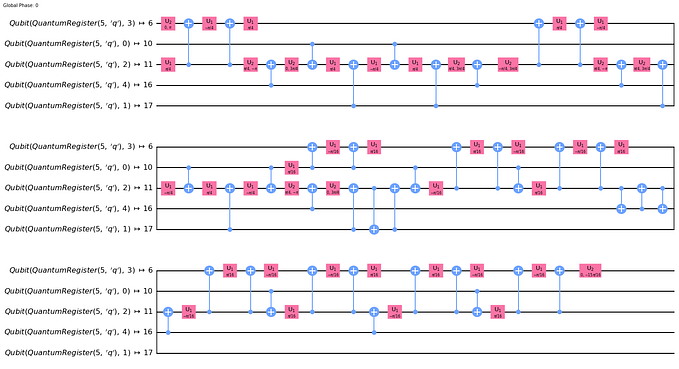

Algorithms need to be automatically scalable

It is urgently important over the next two years that we get to a place where all new algorithms are automatically scalable.

We need to be able to design and test algorithms using modest-sized input and be able to scale those algorithms to much larger input without any need to manually and tediously hand-craft the quantum circuits.

Generative coding is needed. Classical code will generate quantum circuits based on the actual input data.

And since we can’t simulate larger quantum circuits, for more than roughly 50 qubits, we need to rely on automated analysis to detect scaling problems, such as specific coding patterns which won’t reliably scale to much larger qubit counts and circuit sizes.

And I highly recommend testing via simulation for qubit counts up to 40 qubits or at least 32 qubits, but cerainly in the 20 to 30-qubit range.

For more on scaling of algorithms, see my paper:

- Staged Model for Scaling of Quantum Algorithms

- https://jackkrupansky.medium.com/staged-model-for-scaling-of-quantum-algorithms-d1070056907f

Rich set of sample quantum algorithms

A better starting point of sample quantum algorithms is needed for new users.

At least two or three for each of the seven main quantum application categories.

At least one which is very simple but still illustrates the needs of the application category.

At least one of which has some degree of complexity and still illustrates the needs of the application category.

The seven main quantum application categories

For reference, the seven main quantum application categories are:

- Simulating physics.

- Simulating chemistry.

- Material design.

- Drug design.

- Business process optimization.

- Finance. Including portfolio optimization.

- Machine learning.

And there will also be niche applications which don’t fit into any of those seven general quantum application categories. Such as random number generation.

For more on quantum applications and categories, see my paper:

- What Applications Are Suitable for a Quantum Computer?

- https://jackkrupansky.medium.com/what-applications-are-suitable-for-a-quantum-computer-5584ef62c38a

Rich set of sample quantum applications

A better starting point of sample quantum applications is needed for new users.

At least two or three for each of the seven main quantum application categories.

At least one which is very simple but still illustrates the needs of the application category.

At least one of which has some degree of complexity and still illustrates the needs of the application category.

Take the guesswork out of modeling shot count (circuit repetitions)

Due to the combination of errors and the inherent probabilistic nature of quantum computing, it is necessary to execute any given quantum circuit some number of times to develop a statistical expectation value for the results of a quantum computation. This is called the shot count or circuit repetitions. So, how many shots are needed? It’s anybody’s guess. Really. Seriously. It’s pure guesswork. That needs to change.

At a minimum, people need rules of thumb or approximate formulas to deduce the shot count for a given quantum circuit.

Ideally, it should all be automated based on the quantum algorithm, the quantum circuit, the input data and parameters, the application, and some sense of the application requirements.

Research is required.

And in the short-term, maybe some clever heuristics can address the issue as an acceptable approximation, short of the ideal, but… good enough.

For more on the issues with shot counts, wee my paper:

- Shots and Circuit Repetitions: Developing the Expectation Value for Results from a Quantum Computer

- https://jackkrupansky.medium.com/shots-and-circuit-repetitions-developing-the-expectation-value-for-results-from-a-quantum-computer-3d1f8eed398

Higher standards for documenting algorithms

We need higher standards for documenting algorithms, especially for academic publication. Including:

- Discussion of scaling.

- Discussion of calculating shot count (circuit repetitions). A more analytic approach rather than guesswork and trial and error. Including estimation beyond the input size tested by the paper itself.

- Discussion of quantum advantage.

- Discussion of specific hardware requirements to achieve acceptable results for problems which cannot be adequately solved today.

- Place all code, test data, and results in a GitHub repository. In a standardized form and organization.

- Commenting conventions for code. Both quantum and classical.

Near-perfect qubits are required

Noisy NISQ qubits suck — they make it very difficult to construct sophisticated and complex quantum circuits. They consume too much of our attention and energy. We desperately need near-perfect qubits. They don’t need to be absolutely perfect, just close enough that most algorithms won’t usually notice any errors. Perfect logical qubits enabled by quantum error correction (QEC) will not be needed during the next two years — as long as near-perfect qubits are available.

One error in 10,000 operations — an error rate of 0.001%, a reliability of 99.99%, or four nines of qubit fidelity — should be good enough for most algorithms and most applications.

That’s not really that close to perfect, but is close enough for most use cases.

I’m not suggesting that four nines should be the absolute standard, but it is a reasonable target for the next two years.

3.5 nines may be fine for many applications.

And three nines may be sufficient for a fair number of applications, at least in the near-term.

But anything below two nines (99%) or even 2.5 nines (99.5) is likely to remain problematic as an overall goal or reality.

In short, we need to move beyond noisy NISQ qubits ASAP. Not all the way to perfect logical qubits enabled by quantum error correction (QEC) — near-perfect qubits should be sufficient.

For more on near-perfect qubits, see my paper:

- What Is a Near-perfect Qubit?

- https://jackkrupansky.medium.com/what-is-a-near-perfect-qubit-4b1ce65c7908

For more on nines of qubit fidelity, see my paper:

Perfect logical qubits and quantum error correction (QEC) are not needed in this timeframe

As already mentioned in the preceding section, noisy NISQ qubits will not be sufficient for the types of sophisticated and complex quantum algorithms which we will want to be producing in a year or two. But that doesn’t mean that we will need the full perfection of perfect logical qubits enabled by quantum error correction (QEC) — near-perfect qubits should be sufficient.

But just because we won’t require perfect logical qubits enabled by quantum error correction (QEC) in this timeframe doesn’t mean we won’t need them in the immediately succeeding timeframe, in three to four or five years. We probably will need them then.

This implies that we need to focus significant resources on research over the next two or three years so that three to four years from now we are positioned to actually engineer products based on perfect logical qubits enabled by quantum error correction (QEC).

For more detail on perfect logical qubits, quantum error correction (QEC), and fault-tolerant quantum computing in general, see my paper:

- Preliminary Thoughts on Fault-Tolerant Quantum Computing, Quantum Error Correction, and Logical Qubits

- https://jackkrupansky.medium.com/preliminary-thoughts-on-fault-tolerant-quantum-computing-quantum-error-correction-and-logical-1f9e3f122e71

Architectural improvements needed to enhance transmon qubit connectivity

Although trapped-ion qubits and neutral-atom qubits may have inherent advantages for qubit connectivity — supporting full any to any connectivity, superconducting transmon qubits are another story, and may require significant architectural improvements to achieve fast, efficient, and reliable two-qubit quantum logic gate execution.

At best, transmon qubits have only nearest-neighbor connectivity. Otherwise, expensive and error-prone SWAP networks are needed to move two qubits closer to each other for gate execution. See more detail in the next section.

I personally don’t see any way out of this conundrum that a significant improvement in architecture, such as a quantum state bus or shuttling or some other more modular architecture is required to more rapidly, more efficiently, and more reliably get the quantum states of two qubits adjacent to each other so that two-qubit quantum logic gates can be executed rapidly, efficiently, and reliably.

Exactly what specific architectural change is needed is beyond my knowledge and beyond the scope of this informal paper, but something is needed, otherwise transmon qubits will quickly fade into irrelevance, overtaken by trapped-ion qubits or some other qubit technology.

Transmon qubit proponents need to announce a connectivity strategy and roadmap

As noted in the preceding section, architectural improvements are needed to enhance superconducting transmon qubit connectivity. Clearly additional research is needed, and I’m not confident that it can be completed over the next two years. But what the proponents of transmon qubits (both researcher and venders) can and should do within the next two years is to devise and announce a strategy for how they expect to address and resolve the connectivity deficit, as well as a credible roadmap for achieving something much closer to full connectivity.

I would hope and want and expect to see at least prototypes of transmon qubits with enhanced connectivity, say, by the 18-month mark of the two-year forecast period. Whether that is actually feasible remains to be seen. If it in fact takes longer, so be it, but people need to fully appreciate that the clock is ticking and ticking very loudly.

But I would really expect at least a strategy and roadmap to be announced no later than the one-year mark. The details or level of detail are not so important as the commitment to resolution of the issue.

Failure to commit to and deliver a solution to this issue could likely result in a Quantum Winter, at least for transmon qubits.

And failure to commit to and deliver a solution to this issue will effectively cede the future of quantum computing to trapped-ion, neutral-atom, and other approaches to qubit technology.

Transmon qubits have had their season in the sun with minimal connectivity, but the connectivity chickens are poised to come home to roost in the not too distant future.

I give them six months to a year, maybe two, but that will likely be the end of the line for transmon qubits as they exist today with limited connectivity.

RIP: Ode to SWAP networks

Qubit connectivity is a critical technical requirement for sophisticated quantum algorithms. Currently, qubit connectivity is generally mediocre at best. Trapped-ion qubits and neutral-atom qubits are the exceptions.

Generally, nearest-neighbor connectivity is the norm, particularly for superconducting transmon qubits. Google Sycamore provides nearest-neighbor connectivity for all qubits. IBM and Rigetti provide even more limited qubit connectivity with rectangular arrangements of qubits having connectivity only to two adjacent qubits, and three connections where the rectangular arrangements intersect. That’s it.

Sure, nearest-neighbor connectivity works fairly well for very small quantum circuits, but is very problematic for larger circuits. I surmise that this is part of the reason why there are so few 32 to 40-qubit algorithms.

Trapped-ion qubits (and probably neutral-atom qubits as well) don’t have this limitation. They support full any-to-any qubit connectivity. No limitations.

All is not lost for transmon qubits with only nearest-neighbor connectivity. There’s a clever hack to simulate greater connectivity by using so-called SWAP networks, also called routing, where adjacent pairs of qubits can be swapped — their quantum states are exchanged — so that the quantum state of one qubit can be incrementally moved until it is in fact a nearest-neighbor of a target qubit. That’s clever, but not so effective for larger numbers of noisy qubits — the qubit error rate accumulates with each SWAP. A small number of SWAPs may be fine (depending on the application), but a large number of SWAPs can be quite problematic.

The advent of near-perfect qubits with a dramatically-reduced error rate will help, but only to some degree.

SWAP networks are a temporary, stopgap measure. They’ve been somewhat effective so far, but we’ve only been working with smaller quantum circuits and qubit counts so far.

I surmise that getting past 25-qubit algorithms will generally require getting past SWAP networks as well.

So, SWAP networks RIP — Rest In Peace.

Unclear how much improvement in coherence time is needed in this time period

Qubit coherence time definitely needs improvement, although trapped-ion and neutral-atom qubits may not have as big a problem as transmon qubits. It affects both qubit fidelity and maximum circuit depth. Today we get by with very shallow quantum circuits, primarily limited by qubit fidelity and limited qubit connectivity, so that coherence time itself is not the key limiting factor for deep quantum circuits. But how much of an improvement in coherence time is needed over the next two years? It’s unknown and likely to vary from algorithm to algorithm and from application to application.

Sometime over the next two years qubit fidelity and qubit connectivity will become enhanced to the point where coherence time becomes the dominant limiting factor for more sophisticated, complex, and deeper quantum circuits.

Even with enhanced qubit fidelity and connectivity, coherence time alone is not the key limiting factor. Gate execution time also directly impacts maximum circuit depth. Faster gate execution would mean greater circuit depth for the same coherence time. Multiply circuit depth by average gate execution time to get minimum coherence time, or divide coherence time by average gate execution time to get maximum circuit depth.

So, how deep will quantum circuits need to be over the next two years? Some possibilities:

- A few dozen gates. A slam-dunk minimum.

- 50 gates.

- 75 gates.

- 100 gates.

- 175 gates.

- 250 gates.

- 500 gates.

- 750 gates.

- 1,000 gates.

- 1,500 gates.

- 2,500 gates.

- 5,000 gates.

- 10,000 gates.

- More?

Some additional points to consider:

- Total circuit size vs. maximum circuit depth. Does it matter or not?

- How might algorithms evolve over the next two years? The long slow march to production-scale. Advent of use of quantum Fourier transform (QFT) and quantum phase estimation (QPE) for nontrivial input sizes.

- Requirements for larger quantum Fourier transforms (QFT). For transform sizes in the range of 8 to 32 or even 40 bits. What will their key limiting factor(s) be? Will qubit fidelity and connectivity be bigger issues than coherence time and maximum circuit depth?

In any case, I’ll just plant my stake in the ground and suggest a minimum of 250 gates as the maximum circuit depth two years from now. I could easily be persuaded that 1,000 gates would be a better minimum for maximum circuit depth. Again, multiply circuit depth by average gate execution time to get minimum coherence time, or divide coherence time by average gate execution time to get maximum circuit depth.

Quantum advantage requires dramatic improvement

At present there is no notable quantum advantage for quantum solutions over classical solutions. We need to see some significant hardware advances in qubit fidelity and connectivity before we can begin to measure quantum advantage.

First we need to hit minimal quantum advantage — on the order of 1,000X a classical solution.

Then we need to gradually evolve towards substantial or significant quantum advantage — on the order of 1,000,000X a classical solution. How far towards that goal we can get in the next year or two is anybody’s guess at this stage.

Beyond the next two years we need to see evolution beyond that up to even full dramatic quantum advantage on the order of one quadrillion X compared to classical solutions. Whether that takes three, four, five or even seven years is an open question.

For more on minimal quantum advantage and substantial or significant quantum advantage — what I call fractional quantum advantage, see my paper:

- Fractional Quantum Advantage — Stepping Stones to Dramatic Quantum Advantage

- https://jackkrupansky.medium.com/fractional-quantum-advantage-stepping-stones-to-dramatic-quantum-advantage-6c8014700c61

For more on dramatic quantum advantage, see my paper:

- What Is Dramatic Quantum Advantage?

- https://jackkrupansky.medium.com/what-is-dramatic-quantum-advantage-e21b5ffce48c

Still in pre-commercialization

Overall, the technology of quantum computing is still not even close to being ready for commercialization. I refer to it as pre-commercialization. This means:

- Many technical questions remain unanswered.

- Much research is needed.

- Experimentation is the norm.

- Prototyping is common.

- Premature commercialization is a very real risk. The technology just isn’t ready. Avoid commercialization until the technology is ready. But premature attempts to commercialize quantum computing will be common.

Further, not only are we presently in pre-commercialization, but we will likely still be in pre-commercialization at the end of the two-year period.

Sure, maybe, we might be close to finishing pre-commercialization in two years, but we may still need another year or two or even three before enough of the obstacles have been overcome that true commercialization can begin in earnest.

For more on pre-commercialization, commercialization, and premature commercialization, see my paper:

- Model for Pre-commercialization Required Before Quantum Computing Is Ready for Commercialization

- https://jackkrupansky.medium.com/model-for-pre-commercialization-required-before-quantum-computing-is-ready-for-commercialization-689651c7398a

For the larger context of getting to commercialization, see my paper:

- Prescription for Advancing Quantum Computing Much More Rapidly: Hold Off on Commercialization but Double Down on Pre-commercialization

- https://jackkrupansky.medium.com/prescription-for-advancing-quantum-computing-much-more-rapidly-hold-off-on-commercialization-but-28d1128166a

Not yet time for commercialization

Just to reemphasize the point from the previous section that the technology of quantum computing is still not even close to being ready for commercialization — there is much left to do for pre-commercialization. See the citations from the preceding section.

Since it is not yet time for commercialization, any attempts to do so run the risk of premature commercialization as cited in the preceding section.

Even in two years we may still be deep in pre-commercialization

Not only are we presently in pre-commercialization, but there is a fair chance that we will likely still be in pre-commercialization at the end of the two-year period.

Sure, maybe, we might be close to finishing pre-commercialization in two years, but we may still need another year or two or even three before enough of the obstacles have been overcome that true commercialization can begin in earnest.

Avoid premature commercialization

Just to reemphasize the point from three sections ago, that premature commercialization is a very real risk:

- The technology just isn’t ready.

- Avoid commercialization until the technology is ready.

- But premature attempts to commercialize quantum computing will be common.

- Premature commercialization can lead to disenchantment over broken or unfulfilled promises.

- Disenchantment due to premature commercialization is the surest path to a Quantum Winter.

Ongoing research

Much of the advances over the next two years will have to be based on research results which are already published or published over the next six to 12 months. The main focus of research over the next 12 to 18 months will lay the groundwork for the advances which will occur in the stage beyond the next two years. Such areas include:

- Higher-level programming models.

- Much higher-level and richer algorithmic building blocks.

- Rich libraries.

- Application frameworks.

- Foundations for configurable packaged quantum solutions.

- Quantum-native programming languages.

- Identify and develop more advanced qubit technologies. Which are more inherently isolated. More readily and reliably connectable. Finer granularity of phase and probability amplitude.

- More innovative hardware architectures. Such as modular and bus architectures for qubit connectivity.

- Quantum error correction (QEC). To enable perfect logical qubits.

- Application category-specific benchmarks.

- Some real application using 100 or even 80 qubits. Something that really pushes the limits. We do need these within two years, but it’s a stretch goal, so it may take another year or two after that.

- Modeling circuit repetitions. Take the guesswork out of shot count (circuit repetitions.)

- And more.

For a more complete elaboration of research topics see my paper:

- Essential and Urgent Research Areas for Quantum Computing

- https://jackkrupansky.medium.com/essential-and-urgent-research-areas-for-quantum-computing-302172b12176

Need a replacement for Quantum Volume for higher qubit counts

We need a replacement for Quantum Volume as the metric for quantum computation quality for higher qubit counts. Quantum Volume only works for quantum computers with fewer than about 50 qubits, maybe (probably) even less. This is because calculating Quantum Volume involves simulation of a quantum circuit whose width (qubit count) and circuit depth (layers) are equal, but simulation only works for fewer than about 50 qubits (or less) since 2^n quantum states are needed for n qubits, which is an incredible amount of storage for n greater than 2⁵⁰ — one quadrillion quantum states.

50 qubits is the theoretical or practical limit for simulation of quantum circuits, but practical considerations (storage requirements) may in fact limit Quantum Volume simulations to 32–40 qubits or even less.

Exactly what the replacement metric for quantum computation quality should be is beyond the scope of this paper.

For more detail on this issue, see my paper:

- Why Is IBM’s Notion of Quantum Volume Only Valid up to About 50 Qubits?

- https://jackkrupansky.medium.com/why-is-ibms-notion-of-quantum-volume-only-valid-up-to-about-50-qubits-7a780453e32c

Need application category-specific benchmarks

Generic benchmarks, such as Quantum Volume are interesting, but only marginally useful. They don’t tell people what they really need to know, which is how quantum computers will perform for the types of applications in which they are interested. They simply aren’t realistic.

To wit, we need application-specific benchmarks. Or, technically, application category-specific benchmarks.

Each quantum application category needs its own benchmark or collection of benchmarks. There will tend to be some overlap, but it will be the differences that matter.

The real bottom line is that these benchmarks must be realistic and reflect typical application usage.

How much of this will occur within the next two years remains to be seen, but some amount or some preliminary benchmarks should be possible.

We need some real application using 100 or even 80 qubits

Although I do think the main focus should be on algorithms using 32 to 40 qubits, I also think that we do need to see at least one algorithm which seeks to exploit 100 or at least 80 qubits. Something that really pushes the limits. Something that highlights both what can be reached as well as what still can’t be reached.

And this does need to be a realistic, production-scale practical real-world application, not some contrived computer science experiment.

Whether this goal can be met within two years is open to debate. It does look problematic. But it also looks like a remote possibility. It is indeed a viable stretch goal.

Even if it can’t be done in two years, efforts on the research front will be well worth the effort and lay the groundwork for indeed meeting the goal within another year.

Indeed, if we can’t meet this goal in three years, then you know that we are in very bad shape.

Some potential for algorithms up to 160 qubits

Although the main focus over the next two years will be 32 to 40-qubit algorithms, with some applications using 100 or at least 80 qubits, there is some realistic potential to see some practical algorithms which utilize up to 160 qubits.

Maybe it’s more realistic to posit algorithms using 120 to 140 qubits. But possibly stretching to 160 as well.

Or at least 110 to 125 qubits.

The real point is that all new algorithms should be scalable, testable and simulatable using a smaller number of qubits, and then testable at a larger number of real qubits.

A typical progression might be:

- 16 qubits.

- 24 qubits.

- 32 qubits.

- 40 qubits

- 56 qubits.

- 64 qubits.

- 72 qubits.

- 80 qubits.

- 96 qubits.

- 112 qubits.

- 128 qubits.

- 136 qubits.

- 144 qubits.

- 156 qubits.

- 160 qubits.

Of course, we should expect quantum algorithms to be able to scale well beyond that, but this would be a great start. A great proof of concept.

We’re still in stage 0 of the path to commercialization and widespread adoption of quantum computing

Achievement of the technical goals outlined in this paper will leave us poised to begin stage 1, the final path to The ENIAC Moment, the first stage of the path to commercialization and the widespread adoption of quantum computing. The work outlined here lays the foundation for stage 1 to follow.

I see widespread adoption of quantum computing as coming in three stages:

- A few hand-crafted applications (The ENIAC Moment). Limited to super-elite technical teams.

- A few configurable packaged quantum solutions. Focus super-elite technical teams on generalized, flexible, configurable applications which can then be configured and deployed by non-elite technical teams. Each such solution can be acquired and deployed by a fairly wide audience of users and organizations without any quantum expertise required.

- Higher-level programming model (The FORTRAN Moment). Which can be used by more normal, average, non-elite technical teams to develop custom quantum applications. Also predicated on perfect logical qubits based on full, automatic, and transparent quantum error correction (QEC).

For more detail of this three-stage model for widespread adoption of quantum computing, see my paper:

- Three Stages of Adoption for Quantum Computing: The ENIAC Moment, Configurable Packaged Quantum Solutions, and The FORTRAN Moment

- https://jackkrupansky.medium.com/three-stages-of-adoption-for-quantum-computing-the-eniac-moment-configurable-packaged-quantum-9dd8d8a7cbfd

A critical mass of these advances is needed

Technically, all of the advances listed in this paper will be needed within two years for quantum computing to remain on track for supporting production-scale practical real-world quantum applications, but likely we can get to at least some of those applications with less than 100% of all of the listed advances — maybe less than 100% of the advances and maybe less than 100% of each advance. In any case, a critical mass of these advances will be needed to enable such production-cale quantum applications.

But what would that critical mass be? What would be that path to get to that critical mass? Great questions, but I’m afraid the answers cannot be known definitively at this time or at any time in the foreseeable future.

Rather than being a specific detailed list and a detailed specific plan for getting these, the critical mass will instead be a discovery process — we’ll learn as we go.

Researchers and practitioners will conceptualize, design, and implement the specific technologies that they need as they go.

The simple answer to the question of what the critical mass will be is that we’ll know it when we get there, once we see it right in front of us — once researchers and practitioners have all of the critical technologies that they need to actually implement one or more production-scale practical real-world quantum applications, which I have been calling The ENIAC Moment.

Which advance is the most important, critical, and urgent — the top priority?

There are so many technical advances vying for achieving critical mass that it’s virtually impossible to say that any single one is the most important, the most critical, the most urgent, and should be the top priority.

I can tell you some of the top candidates, but not in any priority or importance order:

- Higher qubit fidelity is needed.

- Greater Qubit connectivity is needed.

- Greater qubit coherence time and circuit depth are needed.

- Finer granularity of qubit phase is needed.

- Higher fidelity qubit measurement is needed.

- Quantum advantage needs to be reached.

- Near-perfect qubits are required.

- Algorithms need to be automatically scalable. Generative coding and automated analysis to detect scaling problems.

- Support quantum Fourier transform (QFT) and quantum phase estimation (QPE) on 16 to 20 qubits. And more — 32 to 48, but 16 to 20 minimum. Most of the hardware advances are needed to support QFT and QPE.

Really, seriously, they’re all super-important — and required.

In my Christmas 2021 and New Year 2022 wish list for quantum advances I did actually identify a single top priority:

- Hardware capable of supporting quantum Fourier transform (QFT) and quantum phase estimation (QPE). Hopefully for at least 20 to 30 qubits, but even 16 or 12 or 10 or even 8 qubits would be better than nothing. Support for 40 to 50 if not 64 qubits would be my more ideal wish, but just feels way too impractical at this stage. QFT and QPE are the only viable path to quantum parallelism and ultimately to dramatic quantum advantage.

But then I noted that this was a clever cheat since it depends on a list of other advances:

- Qubit fidelity. Low error rate.

- Qubit connectivity. Much better than only nearest neighbor. Sorry, SWAP networks don’t cut it.

- Gate fidelity. Low error rate. Especially 2-qubit gates.

- Measurement fidelity. Low error rate.

- Coherence time and circuit depth. Can’t do much with current hardware.

- Fine granularity of phase. At least a million to a billion gradations — to support 20 to 30 qubits in a single QFT or QPE.

In the end, I did settle on a single top priority or preference:

- Fine granularity of phase. At least a million to a billion gradations — to support 20 to 30 qubits in a single QFT or QPE.

But even there, that’s a cheat since it also depends on overall improvements in qubit fidelity.

So, in the very end, I did settle on a single top priority:

- Qubit fidelity of 3.5 nines. Approaching near-perfect qubits. I really want to see four nines (99.99%) within two years, but even IBM is saying that they won’t be able to deliver that until 2024. Trapped-ions may do better.

Ultimately I did not find that to be a satisfying process, but it is what it is.

My current preference is to focus on the two-year horizon and not worry so much about where to start — it’s the end that matters, and the journey.

My Christmas and New Year wish list

For reference, here’s my Christmas 2021 and New Year 2022 wish list of the quantum advances that I wanted to see in the coming year (2022), as of December 2021, which overlaps with the goals of this paper to a significant degree:

- My Quantum Computing Wish List for Christmas 2021 and New Year 2022

- https://jackkrupansky.medium.com/my-quantum-computing-wish-list-for-christmas-2021-and-new-year-2022-b24369278262

From that paper, here are my top ten Christmas 2021 and New Year 2022 wishes, not in any strict order per se:

- Qubit fidelity of 3.5 nines. Approaching near-perfect qubits. I really want to see four nines (99.99%) within two years, but even IBM is saying that they won’t be able to deliver that until 2024. Trapped-ions may do better.

- Hardware capable of supporting quantum Fourier transform (QFT) and quantum phase estimation (QPE). Hopefully for at least 20 to 30 qubits, but even 16 or 12 or 10 or even 8 qubits would be better than nothing. Support for 40 to 50 if not 64 qubits would be my more ideal wish, but just feels way too impractical at this stage. QFT and QPE are the only viable path to quantum parallelism and ultimately to dramatic quantum advantage.

- Fine granularity of phase. Any improvement or even an attempt to characterize phase granularity at all. My real wish is for at least a million to a billion gradations — to support 20 to 30 qubits in a single QFT or QPE, but I’m not holding my breath for this in 2022.

- At least a handful of automatically scalable 40-qubit algorithms. And plenty of 32-qubit algorithms. Focused on simulation rather than real hardware since qubit quality is too low. Hopefully using quantum Fourier transform (QFT) and quantum phase estimation (QPE).

- Simulation of 44 qubits. I really want to see 48-qubit simulation — and plans for research for 50 and 54-qubit simulation. Including support for deeper circuits, not just raw qubit count. And configurability to closely match real and expected quantum hardware over the next few to five to seven years.

- At least a few programming model improvements. Or at least some research projects initiated.

- At least a handful of robust algorithmic building blocks. Which are applicable to the majority of quantum algorithms.

- At least one new qubit technology. At least one a year until we find one that really does the job.

- At least some notable progress on research for quantum error correction (QEC) and logical qubits. Possibly a roadmap for logical qubit capacity. When can we see even a single logical qubit and then two logical qubits, and then five logical qubits and then eight logical qubits?

- A strong uptick in research spending. More new programs and projects as well as more spending for existing programs and projects.

Is a Quantum Winter likely in two years? No, but…

I do think that enough progress is being made on most fronts for quantum computing to achieve many, most — a critical mass, if not all of the advances enumerated in this paper. If all of this transpires, then a so-called Quantum Winter is rather unlikely in two years.

Why might a Quantum Winter transpire? Basically a Quantum Winter would or could commence due primarily to disenchantment due to failure of the technology to fulfill the many promises made for it — that quantum computing is not solving the types of problems that it was claimed to solve. So, as long as the vast bulk of the promises (a critical mass) are fulfilled, there should be no Quantum Winter.

But, fulfilling all of those promises is by no means a slam dunk.

But, a smattering of unfulfilled promises amid a sea of fulfilled promises would not be likely to be enough to lead to the level of disenchantment that would trigger or fuel a Quantum Winter.

So, the question is what the threshold balance is between unfulfilled promises and fulfilled promises which constitutes a critical mass sufficient to dip the sector into a Quantum Winter. That’s a great unknown — we’ll know it only as it happens in real-time since it relies on human psychology and competing and conflicting human motivations.

If a Quantum Winter were to transpire, it would have some stages:

- Critical technical metrics not achieved. Such as low qubit fidelity and connectivity, or still no 32 to 40-qubit algorithms.

- Quantum Winter alert. Early warning signs. Beyond the technical metrics. Such as projects seeing their budgets and hiring frozen.

- Quantum Winter warning. Serious warning signs. Some high-profile projects failing.

- Quantum Winter. Difficulty getting funding for many projects. Funding and staffing cuts. Slow pace of announced breakthroughs.

- Severe Quantum Winter. Getting even worse. Onset of psychological depression. People leaving projects. People switching careers.

Critical technical gating factors which could presage a Quantum Winter in two years

Some of the most critical technical gating factors which could send the sector spiraling down into a Quantum Winter in two years include:

- Lack of near-perfect qubits.

- Still only nearest-neighbor connectivity for transmon qubits. Transmon qubits haven’t adapted architecturally.

- Lack of reasonably fine granularity of phase. Needed for quantum Fourier transform (QFT) and quantum phase estimation (QPE) to enable quantum advantage, such as for quantum computational chemistry in particular.

- Less than 32 qubits on trapped-ion machines.

- Dearth of 32 to 40-qubit algorithms.

- Failure to achieve even minimal quantum advantage.

- Rampant and restrictive IP (intellectual property) impeding progress. Intellectual property (especially patents) can either help or hinder adoption of quantum computing.

In short, I’m not predicting a Quantum Winter in two years and think it’s unlikely, but it’s not out of the question if a substantial fraction of the goals from this paper are not achieved — or at least giving the appearance that achievement is imminent.

Ultimately, a Quantum Winter is about psychology — whether people, including those controlling budgets and investment, can viscerally feel that quantum computing is already actually achieving goals or at least palpably close to achieving them.

A roadmap for the next two years?

I’ve thought about it, but I decided that the field is so dynamic and so full of surprises and so filled with uneven progress that a methodical roadmap is just out of the question.

Some of the specific technical factors could have steady incremental progress over the two-year period, but periods of stagnation punctuated with occasional quantum leaps are just as likely.

Where might we be in one year?

For the same reasons that a roadmap of progress is impractical, predicting where we might be at the halfway point of one year is equally fraught with uncertainty.

In fact, part of the reason I focused on two years rather than one year was that uncertainty. Loosening the forecast horizon to two years offers more freedom for uneven progress and the simple fact that some technical factors could indeed remain mired in swamps of slow or even negligible progress for quite some time before hitting breakthroughs which enable more rapid progress later in the forecast period.

From an alternative perspective, maybe one can expect or hope that half of the technical factors will make great progress and already be at or near their two-year goals by the one-year mark, allowing people to then focus more energy on the other half of the technical goals for the other half of the forecast period.

RIP: Ode to NISQ

It almost goes without saying, but achieving the technical goals from this paper would imply the end of the NISQ era. After all, the N is NISQ means noisy qubits, which is the antithesis of near-perfect qubits.

Technically, this paper is downplaying qubit count, suggesting that even 160 to 256 qubits would be a high-end that only some applications would need in the forecast period of two years. Even 256 qubits is well within the range of “50 to a few hundred”, which is how Preskill defined the IS of NISQ, so that a portion of NISQ would remain intact at the end of the two-year forecast period of this paper.

Actually, IBM is promising the 433-qubit Osprey later this year and the 1,121-qubit Condor a year later, which would exceed Preskill’s upper bound of “a few hundred” for intermediate scale, so at least those two machines would obsolete the IS of NISQ, although it is uncertain whether either or both machines would achieve near-perfect qubits as well. If not, then they would become what I call NLSQ devices — noisy large-scale quantum devices.

There is a minor semantic question of what the precise numeric criteria is for “a few hundred” — 300 or 399 or 499 or what? At a minimum, 433 is in the gray zone where it could be considered as either a few hundred or more than a few hundred as a fielder’s choice.

But the essential focus of NISQ really was on the noisiness of the qubits rather than the raw qubit count.

In my proposed new terms, NISQ would be replaced with NPISQ — for near-perfect intermediate-scale quantum devices.

For quantum computers with less than 50 qubits — say 48 qubits, I would suggest the term NPSSQ — near-perfect small-scale quantum devices.

I also suggest an even finer distinction for under 50 qubits:

- NPTSQ. Near-perfect tiny/toy-scale quantum devices. One to 23 near-perfect qubits.

- NPMSQ. Near-perfect medium-scale quantum devices. 24 to 49 near-perfect qubits.

But if as suggested by this paper 48 qubits becomes the new minimum, NPTSQ would no longer be needed, so that NPSSQ and NPMSQ would be virtually identical.

Who knows… maybe once we get past the noisiness of NISQ, maybe we should simply completely drop the term entirely rather than use some replacement term which becomes redundant with the reality of quantum computers once the advances listed in this paper become the norm.

For more on my proposed terms, see my paper:

- Beyond NISQ — Terms for Quantum Computers Based on Noisy, Near-perfect, and Fault-tolerant Qubits

- https://jackkrupansky.medium.com/beyond-nisq-terms-for-quantum-computers-based-on-noisy-near-perfect-and-fault-tolerant-qubits-d02049fa4c93

On track to… end of the NISQ era and the advent of the Post-NISQ era

One could certainly interpret the achievement of the technical milestones of this paper — particularly near-perfect qubits — as marking the end of the NISQ era and the advent of the Post-NISQ era. No more noisy qubits!

Whether to also require a minimum of 400 or 500 qubits (or more — or less) to mark being beyond intermediate-scale is a matter of debate.

Personally I think moving beyond noisy qubits is both necessary and sufficient to end the NISQ era and begin the Post-NISQ era.

RIP: Noisy qubits

To me, personally, noisy qubits — low qubit fidelity — is the greatest impediment to doing anything truly useful with quantum computers. Once we achieve near-perfect qubits, noisy qubits will be a thing of the past.

In truth, weak qubit connectivity (nearest-neighbor) is just as important as low qubit fidelity — they’re both equally important technical hurdles to overcome. It’s just that noisy qubits garner more of the attention.

Details for other advances

My apologies for not providing detail on all of the advances listed in this paper. I did detail those that I consider most important or at least where I felt I had something to say. And some topics may be discussed in other informal papers which I have written.

But just because I didn’t include a separate section on some listed advance is not intended to indicate that it has or should have a lower priority.

As a general proposition, all of the advances should be listed and have some comments in my research paper:

- Essential and Urgent Research Areas for Quantum Computing

- https://jackkrupansky.medium.com/essential-and-urgent-research-areas-for-quantum-computing-302172b12176

And what advances are needed beyond two years?

Curiously, most of the writing I’ve done over the past several years on quantum computing has been about the long-term, more than two years in the future, but here in this paper I limit my focus to the next two years. Put simply, the advances needed beyond two years are beyond the scope of this current paper.

It’s worth noting that a lot of what will happen three, four, and five years from now will be a fairly straight-line extrapolation of the work over the next two years outlined in this paper. There will be plenty of exceptions to that rule, but it’s a fair start.

That said, I do mention some of the areas where advances are needed beyond the next two years in my sections on research. In short, if you want to get a sense of what needs to happen more than two years in the future, my research paper is the place to start:

- Essential and Urgent Research Areas for Quantum Computing

- https://jackkrupansky.medium.com/essential-and-urgent-research-areas-for-quantum-computing-302172b12176

Actually, research won’t be the best place to start for most people. Just check my list of writing and pick your topic of interest — focus on the more recent papers — and much of what I write concerns what will happen more than two years from now:

- List of My Papers on Quantum Computing

- https://medium.com/@jackkrupansky/list-of-my-papers-on-quantum-computing-af1be336410e

My original proposal for this topic

For reference, here is the original proposal I had for this topic. It may have some value for some people wanting a more concise summary of this paper.

- Quantum computing advances we need to see over the coming 12 to 18 to 24 months to stay on track. Qubit fidelity — 3 to 4 nines — 2.5, 2.75, 3, 3.25, 3.5, 3.75 — moving towards near-perfect qubits. Qubit connectivity — Trapped ions or Bus architecture for transmon qubits. Fine phase granularity. Reasonably nontrivial use of quantum Fourier transform (QFT) and quantum phase estimation (QPE). To be viable and relevant, trapped ion machines need to grow to at least 32 to 48 qubits, if not 64 to 72 or even 80. Need to see lots of 32 to 40-qubit algorithms. Need alternative to Quantum Volume capacity and quality metric to support hardware and algorithms with more than 50 qubits. Need to see 44-qubit classical quantum simulators for reasonably deep quantum circuits. Is that about it? 64 to 128 qubits should be enough for this time period.

Summary and conclusions

- Current quantum computing technology is too limited. Current quantum computers are simply not up to the task of supporting any production-scale practical real-world applications.

- The ENIAC Moment is not in sight. No hardware, algorithm, or application capable of The ENIAC Moment for quantum computing at this time. Coin flip whether it will be achievable within two years.

- We’re still deep in the pre-commercialization stage. Overall, we’re still deep in the pre-commercialization stage of quantum computing. Nowhere close to being ready for commercialization. Much research is needed.

- Advances are needed in all of the major areas. Hardware (and firmware), support software (and tools), algorithms (and algorithm support), applications (and application support), and research in general (in every area.)

- Higher qubit fidelity is needed.

- Greater Qubit connectivity is needed.

- Greater qubit coherence time and circuit depth are needed.

- Finer granularity of qubit phase is needed.

- Higher fidelity qubit measurement is needed.

- Quantum advantage needs to be reached.

- Near-perfect qubits are required.

- Trapped-ion quantum computers need more qubits.

- Neutral-atom quantum computers need to support 32 to 48 qubits.

- Support quantum Fourier transform (QFT) and quantum phase estimation (QPE) on 16 to 20 qubits. And more — 32 to 48, but 16 to 20 minimum. Most of the hardware advances are needed to support QFT and QPE.

- A replacement for the Quantum Volume metric is needed. For circuits using more than 32–40 qubits and certainly for 50 qubits and beyond.

- Need application category-specific benchmarks. Generic benchmarks aren’t very helpful to real users.

- Need 44-qubit classical quantum simulators for reasonably deep quantum circuits.

- Quantum algorithms using 32 to 40 qubits are needed. Need to be very common, typical.

- Need to see some real algorithm using 100 or even 80 qubits. Something that really pushes the limits.

- Need to see some potential for algorithms up to 160 qubits.

- Need to see reasonably nontrivial use of quantum Fourier transform (QFT) and quantum phase estimation (QPE).

- Algorithms need to be automatically scalable. Generative coding and automated analysis to detect scaling problems.

- Higher-level algorithmic building blocks are needed. At least a decent start, although much research is needed.

- Need a rich set of sample quantum algorithms. A better starting point for new users.

- Need support for modeling circuit repetitions. Take the guesswork out of shot count (circuit repetitions.)

- Need higher standards for documenting algorithms. Especially discussion of scaling and quantum advantage.

- Need to see some real applications using 100 or even 80 qubits. Something that really pushes the limits.

- Need to see at least a few applications which are candidates or at least decent stepping stones towards The ENIAC Moment.

- Need to see applications using quantum Fourier transform (QFT) and quantum phase estimation (QPE) on 16 to 20 qubits. And more — 32 to 48, but 16 to 20 minimum.

- Need to see at least a few stabs at application frameworks.

- Need a rich set of sample quantum applications. A better starting point for new users.

- Research is needed in general, in all areas. Including higher-level programming models, much higher-level and richer algorithmic building blocks, rich libraries, application frameworks, foundations for configurable packaged quantum solutions, quantum-native programming languages, more innovative hardware architectures, and quantum error correction (QEC).

- We’re still in stage 0 of the path to commercialization of quantum computing. Achievement of the technical goals outlined in this paper will leave us poised to begin stage 1, the final path to The ENIAC Moment. The work outlined here lays the foundation for stage 1 to follow.

- A critical mass of these advances is needed. But exactly what that critical mass is and exactly what path will be needed to get there will be a discovery process rather than some detailed plan known in advance.

- Which advance is the most important, critical, and urgent — the top priority? There are so many vying for achieving critical mass.

- Achievement of these technical goals will leave the field poised to achieve The ENIAC Moment.

- Achievement of these technical goals will mark the end of the NISQ era.

- Achievement of these technical goals will mark the advent of the Post-NISQ era.

- Quantum Winter is unlikely. I’m not predicting a Quantum Winter in two years and think it’s unlikely, but it’s not out of the question if a substantial fraction (critical mass) of the advances from this paper are not achieved.

- Beyond two years? A combination of fresh research, extrapolation of the next two years, and topics I’ve written about in my previous papers. But this paper focuses on the next two years.

For more of my writing: List of My Papers on Quantum Computing.