Why I’m Rapidly Losing Faith in the Prospects for Quantum Error Correction

Full, automatic, and transparent quantum error correction (QEC) is no longer a slam-dunk panacea from my perspective. I feel that it would be truly foolhardy to bet heavily that quantum error correction is going to magically lead us to fault-tolerant quantum computing and perfect logical qubits. Not today, not next year, not in two years, and not in five years — or likely even ever.

Five years ago and even just two years ago I was quite enthusiastic and supportive of the eventual prospects for quantum error correction even if it had no near-term prospects. But two years ago as I was writing up my thoughts on fault-tolerant quantum computing, quantum error correction, and logical qubits my confidence in quantum error correction cracked and started a slow and very gradual crumble as I dug into the details and contemplated the consequences, ramifications, and issues involved.

Instead, I fairly quickly concluded that near-perfect physical qubits (four to five nines of qubit fidelity, maybe in the three nines range for some applications) was a much more ideal goal for both the near term and the medium term, if not the long term as well.

Even two years ago I still considered full quantum error correction as a more ideal solution for the longer term, but even then I was aware of most of the issues I summarize in this informal paper. In fact, only in the past year has my confidence in quantum error correction fully crumbled.

Now, it’s time for me to come clean and admit the ugly truth about quantum error correction.

In truth, I soft-pedaled the title of this informal paper — it more accurately should have been:

- Why I’ve Completely Given Up Hope in the Prospects for Quantum Error Correction

To be clear, I’m not suggesting that there are logic errors in any of the quantum error correction algorithms, but primarily, even if the algorithms do “work”, that they won’t do the full job and correct for all possible types of errors.

In short, quantum error correction (QEC) as it is currently envisioned won’t lead to full, perfect fault-tolerant quantum computing (FTQC) — as it is currently envisioned and promoted.

Generally, fault-tolerant quantum computing is essentially a synonym for implementing quantum error correction, subject to the caveat listed above, that quantum error correction is unlikely to deliver all that it promises, or at least all that its promoters promise.

All of that said, I concede that at any moment somebody might have a research breakthrough and come up with a startling new approach to quantum error correction which addresses some, many, most, or even all of my concerns. After all, they do say that hope does spring eternal. That said, we are where we are, which is what I need to focus on at the moment.

Note that quantum error correction (QEC) should not be confused with manual error mitigation or quantum error suppression, such as libraries which tweak and tune pulse sequences to minimize gate errors. Quantum error correction is automatic and transparent. It’s about logical qubits rather than physical qubits. And quantum error correction eliminates the limit of coherence time, enabling much more complex quantum algorithms.

Topics discussed in this informal paper:

- My thoughts on fault-tolerant quantum computing, quantum error correction, and logical qubits

- Rapidly losing faith? Lost all hope is more like it

- But never say never

- The nails in the coffin for quantum error correction

- In short, most urgently

- For now, use a simulator

- Near-perfect qubits

- Fault-tolerant quantum computing = quantum error correction

- Without full QEC, fault-tolerant quantum computing will consist of near-perfect qubits, greater coherence time, shorter gate execution time, error mitigation, error suppression, and circuit repetition

- Post-NISQ quantum computing — NPISQ and NPSSQ using near-perfect qubits

- Fine granularity of phase is likely problematic

- Lack of fine granularity of phase and probability amplitude may render large complicated quantum algorithms hopeless

- Quantum error mitigation vs. quantum error correction

- No, even quantum error correction doesn’t eliminate all or even most subtle gate errors

- IBM quantum error suppression and Q-CTRL Fire Opal for gate errors, but not for coherence time or algorithm complexity

- Quantum error suppression is really just compensating for flawed logic in the implementation of gates

- Call for more research

- Conclusion

My thoughts on fault-tolerant quantum computing, quantum error correction, and logical qubits

For my thoughts on fault-tolerant quantum computing, quantum error correction, and logical qubits, written almost two years ago (February, 2021), see my informal paper:

- Preliminary Thoughts on Fault-Tolerant Quantum Computing, Quantum Error Correction, and Logical Qubits

- https://jackkrupansky.medium.com/preliminary-thoughts-on-fault-tolerant-quantum-computing-quantum-error-correction-and-logical-1f9e3f122e71

Rapidly losing faith? Lost all hope is more like it

Okay, that’s putting it too mildly. I’m well beyond losing faith. I’ve finally arrived at fully giving up all hope for quantum error correction. Details to follow.

But never say never

That said, one of my philosophies is never say never, so I’ve worded the title so as to suggest that I haven’t absolutely given up all hope, and that there is at least a sliver of hope that somehow things will turn around. Maybe, just maybe, somebody will eventually come up with a fresh quantum error correction scheme which actually works and addresses all of the issues I raise here in this informal paper.

The nails in the coffin for quantum error correction

These are the issues — the insurmountable issues — that I’ve run into during my quantum journey towards quantum error correction, not in any particular order or priority:

- It’s taking too long. We need it now, or at least relatively soon, not far off in the future.

- We’re not making any palpable progress to achieving it. Two to five years ago it was two to five years in the future. In two to five years it will also likely be two to five years in the future.

- 1,000 physical qubits to produce a single logical qubit is outright absurd. That’s what some people are now talking about, even though just a few years ago people were talking about dozens of physical qubits. Sure, maybe that’s the way the math works out (for d = 29 or 31) to achieve an extremely low error rate when starting with noisy physical qubits (98% to 99% fidelity), but this simply doesn’t seem like a practical path. Maybe one difficulty is that we really do need to start with a much lower error rate for physical qubits, such as my proposal for near-perfect qubits with four to five nines of qubit fidelity (or the three range of nines for some applications) so that d could be much more manageable — hopefully less than 100 physical qubits per logical qubit.

- Why can’t we even demonstrate a stripped down quantum error correction today? Even if we do need hundreds to thousands of physical qubits to run real algorithms, we should be able to demonstrate at least a weak form of quantum error correction (say d = 3 to 5) for three to five qubits on classical quantum simulators and even with the physical qubits we can produce today. Granted, logical qubits using d = 3 might not be terribly useful, but would at least prove the concepts — and vendors could then lay out a roadmap for the various levels of d up to whatever level is expected to deliver true fault-tolerant quantum computing (maybe d = 29 or d = 31.)

- No good story for how qubit connectivity will be accomplished for non-adjacent logical qubits, or how we will achieve full any to any connectivity for logical qubits. No mention of any quantum state bus, dynamically-routable couplers, or whether incredibly tedious logical SWAP networks might be needed. IBM did briefly mention on-chip non-local couplers in a paper in September, but didn’t offer enough details to confirm that this would address quantum error correction within a logical qubit or connectivity between non-adjacent logical qubits, or both. A full story on qubit connectivity is very much needed, but has not been provided — by any vendor, although trapped-ion qubits do have full qubit connectivity, at least within modules.

- Nobody has a handle on how many physical qubits it will take to produce logical qubits for a practical quantum computer. Estimates have varied, 1,000 is a popular estimate in the past two years, but how stable or certain is that — or how practical? Also depends on initial error rate of physical qubits and expected or acceptable residual error rate after correction, neither of which is stable, certain, or proven to be practical.

- Nobody has a handle on the residual error rate even with full QEC.

- Nobody has a handle on what error rate we need to achieve for physical qubits to support a practical quantum computer using logical qubits.

- No clarity (or even mention!) of error detection and correction for fine granularity of phase and probability amplitude. Needed for quantum Fourier transform (QFT), quantum phase estimation (QPE), quantum amplitude estimation (QAE), quantum order-finding, and Shor’s factoring algorithm.

- Nobody has a scheme to deal with the continuous analog values of probability amplitude and phase, especially for large, high-precision quantum Fourier transforms and quantum phase estimation. No vendor currently documents the granularity they currently achieve. No vendor makes a commitment to support even tens to hundreds of gradations, let alone thousands, millions, billions, and more… and supported by QEC.

- Oops… no ability to detect and correct continuous analog errors. QEC seems focused on coherence time and general noise for binary qubits, not continuous analog probability amplitude and phase. This seems to be the mortal Achilles heel that dooms the prospects for QEC addressing noisy qubits.

- Oops… no ability to detect and correct gate execution errors. QEC seems focused on maintaining the coherence and stability of idle qubits for extended periods of time, not focused on detecting and correcting errors in the execution of gates. If a qubit or entangled ensemble of qubits is in some arbitrary, unknown state, how can any error detection scheme possibly know or detect what state the qubit or entangled ensemble of qubits should be in after the execution of a gate which applies some arbitrary and potentially very fine-grained rotation about any of the X, Y, or Z axes? Answer: Obviously it can’t. But nobody talks about this! QEC maintains stability and coherence — it can’t possibly magically know what state the execution of an arbitrary gate should produce.

- No scheme for detecting and correcting errors for product states of ensembles of entangled qubits. Current schemes focus exclusively on maintaining the coherence and stability of individual, isolated qubits, not multi-qubit product states.

- No clarity as to correcting for measurement errors.

- QEC is just much too complex. KISS — Keep It Simple Stupid. We need something now. We needed something yesterday, last year, and several years ago. But raw complexity prevents that.

- Semantic absurdity — quantum error correction on NISQ is a contradiction in terms. The “IS” in NISQ stands for intermediate scale and is defined by Preskill as “a number of qubits ranging from 50 to a few hundred.” Even with d = 3, you would only have 48 logical qubits for a 433-qubit IBM Osprey quantum computer, or only 17 logical qubits with d = 5. Or only 28 logical qubits for a 256-qubit quantum computer with d = 3, or 10 logical qubits with d = 5. And, of course, you would have ZERO logical qubits if you have only hundreds of physical qubits but require 1,000 (d = 29 or 31) physical qubits per logical qubit. So, by definition anything resembling a practical quantum computer with a non-trivial number of physical qubits per logical qubit (d > 17) would not and could not be a NISQ device, and certainly not if 1,000 physical qubits are needed for even a single logical qubit. That said, I can see the value of some research and experimental work using smallish values of d, but such efforts would not be suitable for production-scale production deployment.

- Further semantic absurdity — the whole thrust of quantum error correction has been to enable practical quantum computing using NISQ devices, so if corrected logical qubits are still fairly noisy or too few in count, these corrected qubits are effectively useless and quantum error correction was a wasted effort. At a minimum, the semantic link between quantum error correction and NISQ needs to be severed. Research is fine for a handful of logical qubits, but anything practical, with dozens to hundreds of high-quality logical qubits, would be beyond NISQ, the post-NISQ era.

- QEC has turned into a cottage industry, a real cash-cow for researchers, so there’s no incentive to finish the job, which would kill the cash cow. I’m all in favor of more research and increasing funding for research, but research in quantum error correction has gotten ridiculous, with no end in sight. It has literally become an endless process, or so it seems.

- Lack of clarity as to how much of QEC is a research problem and how much is just engineering. Maybe we’re spending too much effort focusing on engineering issues rather than doing enough fundamental research. Or vice versa, that if we focused on basing QEC on near-perfect qubits then we wouldn’t need so much additional research on QEC itself.

In short, most urgently

The most urgent difficulties which are deal killers for quantum error correction are:

- Inability to detect and correct fine-grained analog value rotation errors in gate execution.

- Inability to detect and correct errors in multi-qubit product states for entangled qubits.

- Lack of full any to any connectivity for logical qubits.

- Complexity and large number of physical qubits needed for a single logical qubit.

For now, use a simulator

I didn’t put timeframe on that short list of the most urgent deal killers for quantum error correction since quantum algorithm designers can do a fair amount of work using classical quantum simulators while they wait for quantum error correction or better qubits, at least up to 32 to 40 to 50 qubits.

Near-perfect qubits

For more on near-perfect qubits (four to five nines of qubit fidelity, or maybe in the three range of nines for some applications), see my informal paper:

- What Is a Near-perfect Qubit?

- https://jackkrupansky.medium.com/what-is-a-near-perfect-qubit-4b1ce65c7908

Fault-tolerant quantum computing = quantum error correction

Fault-tolerant quantum computing is essentially a synonym for implementing quantum error correction. In theory.

Without full QEC, fault-tolerant quantum computing will consist of near-perfect qubits, greater coherence time, shorter gate execution time, error mitigation, error suppression, and circuit repetition

So many hopes have been pinned on the grand promise of the eventual prospects of full quantum error correction. Without QEC, our only real hope for fault-tolerant quantum computing is a combination of:

- Near-perfect qubits. Four to five nines of qubit fidelity should be enough for many algorithms, and 3.0 to 3.75 nines may be enough for some applications.

- Greater coherence time. That was a key benefit from quantum error correction — stability for an indefinite period of time. The need is to enable larger and more complex quantum circuits.

- Shorter gate execution time. Given physical limits to increasing coherence time, shorter gate execution time enables larger and more complex quantum circuits.

- Error mitigation. Partially manual, hopefully a significant amount of automation, to correct more common errors.

- Error suppression. In lieu of fixing the current simplistic implementation of gates at the pulse. level.

- Circuit repetition. Execute each circuit enough times that statistical analysis can deduce from the distribution of the results what the true, expected value is likely to be. I like to call this poor man’s quantum error correction — although it doesn’t help with indefinite coherence time.

Post-NISQ quantum computing — NPISQ and NPSSQ using near-perfect qubits

Technically, post-NISQ quantum computing was supposed to be fault-tolerant quantum computing, but even if quantum error correction does eventually become a practical reality, I see non-corrected quantum computing based on near-perfect qubits — with full any-to-any qubit connectivity — as a valuable and very beneficial stepping stone between NISQ and QEC.

I use the terms NPISQ and NPSSQ for this stepping stone:

- NPISQ. Near-perfect intermediate-scale quantum device. 50 to a few hundred near-perfect qubits.

- NPSSQ. Near-perfect small-scale quantum device. Fewer than 50 near-perfect qubits.

For more on those and other variations, see my informal paper:

- Beyond NISQ — Terms for Quantum Computers Based on Noisy, Near-perfect, and Fault-tolerant Qubits

- https://jackkrupansky.medium.com/beyond-nisq-terms-for-quantum-computers-based-on-noisy-near-perfect-and-fault-tolerant-qubits-d02049fa4c93

As an example, I have proposed a NPSSQ quantum computer with 48 fully connected near-perfect qubits:

- 48 Fully-connected Near-perfect Qubits As the Sweet Spot Goal for Near-term Quantum Computing

- https://jackkrupansky.medium.com/48-fully-connected-near-perfect-qubits-as-the-sweet-spot-goal-for-near-term-quantum-computing-7d29e330f625

Such a quantum computer may not handle all apps, but should be able to deliver a significant quantum advantage for a fairly wide range of applications.

That could be an excellent starting point for the low-end of practical quantum computers.

Fine granularity of phase is likely problematic

For more on the issues surrounding fine granularity of phase — and probability amplitude, see my informal paper:

- Beware of Quantum Algorithms Dependent on Fine Granularity of Phase

- https://jackkrupansky.medium.com/beware-of-quantum-algorithms-dependent-on-fine-granularity-of-phase-525bde2642d8

Lack of fine granularity of phase and probability amplitude may render large complicated quantum algorithms hopeless

For more on the issues surrounding the severe limitations that lack of fine granularity of phase and probability amplitude that could severely curtail the full promise of quantum computing, see my informal paper:

- Is Lack of Fine Granularity of Phase and Probability Amplitude the Fatal Achilles Heel Which Dooms Quantum Computing to Severely Limited Utility?

- https://jackkrupansky.medium.com/is-lack-of-fine-granularity-of-phase-and-probability-amplitude-the-fatal-achilles-heel-which-dooms-90be4b48b141

Quantum error mitigation vs. quantum error correction

Quantum error mitigation and quantum error correction may sound as if they are the same, close, or synonyms, but there is more than a nuance of difference.

Quantum error mitigation is a manual process for compensating for some common types of errors, but doesn’t increase coherence time, so it doesn’t increase the size of the quantum algorithms which can be handled. Various libraries and tools may make it easier than a purely manual process, but it’s neither automatic nor transparent. And, the quantum algorithm designer is still working with physical qubits — no logical qubits.

Quantum error correction on the other hand is fully automatic, fully transparent,corrects a wider range of errors, and does increase coherence time, allowing much more complex quantum algorithms. And, the quantum algorithm designer gets to work with logical qubits rather than physical qubits.

No, even quantum error correction doesn’t eliminate all or even most subtle gate errors

Quantum error correction can eliminate concern with coherence time and noise, enable larger and more complex quantum algorithms, automates all forms of quantum error mitigation, and even corrects some gate errors to a limited degree, but even it does not fully eliminate all or even most gate errors.

There isn’t even a theoretical method to guess or calculate what the result of executing a gate should be (other than full classical simulation), so any error in the execution of the gate will go largely undetected unless it is egregious enough that the error detection coding scheme can detect it, but very subtle errors may well go undetected.

Very small rotations of probability amplitude or phase can be especially problematic.

The only real solution is better qubit technology.

This was in fact a problem with early transistors. It took monumental effort in process improvements and purity of silicon and the other materials used to construct transistors to get transistors to the virtually flawless level of performance that we all experience today.

We may have to wait a similar number of years or even a decade or longer before qubit technology has a similar level of improvement.

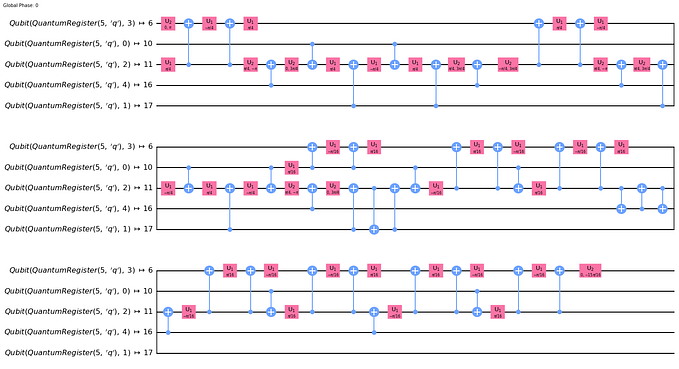

IBM quantum error suppression and Q-CTRL Fire Opal for gate errors, but not for coherence time or algorithm complexity

Besides error mitigation and error correction, there is a third, newer approach — quantum error suppression. It’s rather complicated, but the net effect is to fix the problems with qubits and gate execution at the pulse level, but without actually fixing the qubits per se.

Two approaches to quantum error suppression are:

I won’t attempt to go into the details here since they are rather complicated — and not really related to quantum error correction anyway. In essence, they are better than simple error mitigation, but not as beneficial as full, true quantum error correction, especially in terms of coherence time, but they can in fact reduce gate errors, but not eliminate them.

For more details on IBM’s approach:

- What’s the difference between error suppression, error mitigation, and error correction?

- https://research.ibm.com/blog/quantum-error-suppression-mitigation-correction

For more details on Q-CTRL’s approach:

- Software tools for quantum control: Improving quantum computer performance through noise and error suppression

- https://arxiv.org/abs/2001.04060

Quantum error suppression is really just compensating for flawed logic in the implementation of gates

The ugly, undisclosed truth about quantum error suppression, whether from IBM, Q-CTRL, or some other vendor, is that all that it is really doing is correcting flawed logic in the implementation of quantum logic gates. If IBM and other hardware vendors would just fix their flawed logic, then this separate process of quantum error suppression would not be needed.

That said, this fix only eliminates some fraction of the possible gate errors. There is no fix on the horizon for the rest of the gate errors, especially rotation errors for very small angles.

Worse, even the best quantum error correction will do nothing to address any remaining gate errors.

Call for more research

Despite everything I’ve said in this informal paper, I still hold out hope for some sort of dramatic improvement (as happened for classical transistors), but much research will be needed.

The great hope is that dramatic improvements in basic qubit technology will eliminate virtually all of the band-aid approaches discussed in this paper. But even that will require a lot more research.

Conclusion

I do dearly wish that quantum error correction (QEC) was indeed the ultimate panacea for all quantum errors and that it was a slam-dunk certainty in the relatively near-term, but it just ain’t so. Not now, not real soon, not in the medium term, and not likely in even the longer term or even ever.

First off, seriously, I always try to say never say never. There is always the prospect for some magical breakthrough. True, just don’t get your hopes up.

Best to refrain from betting too much — or anything — on false hopes for full quantum error correction coming to save your large and complex algorithms in three to five to seven years, or even ever.

For now, and indefinitely, place your bets on near-perfect qubits.

And until we have real hardware which fully supports near-perfect qubits — and full any to any qubit connectivity, stick to simulation of your quantum algorithms.

And, ultimately, a lot more research in basic qubit technologies is really the only way out. That’s what we needed to do for classical transistors, so there’s no reason to expect that we can avoid needing to do that for basic qubit technologies. And near-perfect qubits are an early milestone on any such research path — even if full quantum error correction were to become a reality.

But the real conclusion here is not me telling or advising others what they should do, but simply me laying out my reasoning and rationale for no longer being a true believer in the promise and prospects for quantum error correction.

For more of my writing: List of My Papers on Quantum Computing.